Amazon Bedrock AgentCore is changing how developers build and deploy AI agents across enterprise environments. This powerful framework addresses the growing need for standardizing AI agent development, making it easier for teams to create consistent, scalable solutions without reinventing the wheel every time.

This guide is designed for AI developers, machine learning engineers, and enterprise teams who want to streamline their conversational AI development process using AWS AI development tools. If you’re tired of dealing with fragmented agent architectures or struggling to maintain consistency across different AI projects, AgentCore offers a structured path forward.

We’ll walk through the essential components of AI agent architecture within the Amazon Bedrock ecosystem, showing you how this framework can transform your development workflow. You’ll also discover the key benefits that make standardizing AI agents a smart business decision, from reduced development time to improved maintainability. Finally, we’ll cover practical steps for setting up your first AI agent with AgentCore, giving you hands-on experience with enterprise AI solutions that actually work in production environments.

Understanding Amazon Bedrock AgentCore Architecture

Core Components and Framework Overview

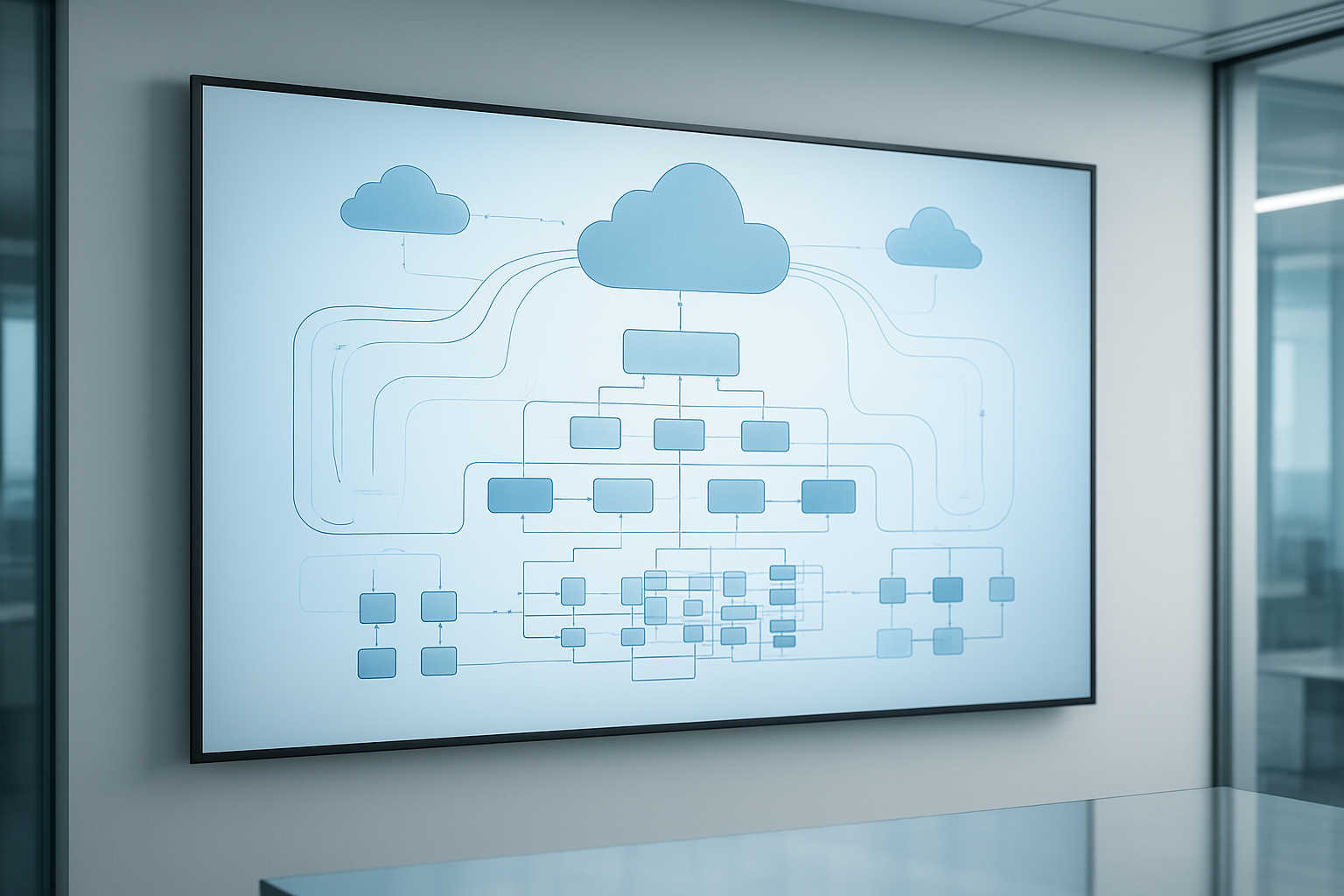

Amazon Bedrock AgentCore provides a unified framework that streamlines AI agent development through standardized components. The architecture centers around three primary elements: the Agent Runtime for conversation management, Knowledge Base integration for retrieval-augmented generation, and Action Groups that define agent capabilities. This modular approach enables developers to build sophisticated conversational AI systems without managing complex infrastructure, while maintaining consistency across enterprise AI solutions.

Integration Capabilities with AWS Services

AgentCore seamlessly connects with the broader AWS ecosystem, offering native integrations with Lambda functions, S3 storage, and DynamoDB databases. The platform automatically handles authentication and API management when connecting to services like Amazon Kendra for enterprise search or CloudWatch for monitoring. This tight integration eliminates the need for custom middleware, allowing teams to leverage existing AWS investments while building AI agents that can access real-time data and execute business logic across multiple services.

Built-in Tools and Resource Management

The framework includes pre-configured tools for common AI agent tasks, including memory management, session handling, and response formatting. AgentCore automatically manages resource allocation, scaling compute resources based on conversation volume and complexity. Built-in guardrails ensure responsible AI behavior, while the platform handles model lifecycle management, including version control and A/B testing capabilities. These tools reduce development overhead and ensure consistent performance across different deployment scenarios.

Scalability and Performance Features

AgentCore architecture supports horizontal scaling through distributed processing and intelligent load balancing. The platform automatically optimizes response times by caching frequently accessed knowledge and pre-loading relevant context. Multi-region deployment capabilities ensure low-latency responses for global applications, while auto-scaling features handle traffic spikes without manual intervention. Performance monitoring dashboards provide real-time insights into agent behavior, enabling proactive optimization and ensuring enterprise-grade reliability for mission-critical AI agent deployments.

Key Benefits of Standardizing AI Agent Development

Reduced Development Time and Costs

Amazon Bedrock AgentCore dramatically cuts development cycles by providing pre-built components and standardized workflows. Teams can launch AI agents in weeks instead of months, reducing infrastructure costs by up to 60% through shared resources and automated scaling. The platform eliminates repetitive coding tasks and accelerates time-to-market for enterprise AI solutions.

Consistent Quality and Reliability Standards

Standardizing AI agent development with AgentCore ensures uniform performance across all deployments. Built-in quality gates, automated testing frameworks, and consistent error handling create reliable conversational AI experiences. Teams benefit from proven architectural patterns that maintain high availability and predictable response times, reducing production issues and support overhead significantly.

Enhanced Team Collaboration and Knowledge Sharing

AgentCore creates a common development language that breaks down silos between data scientists, developers, and operations teams. Standardized templates, shared component libraries, and unified documentation enable seamless knowledge transfer. New team members onboard faster with consistent tooling, while experienced developers can easily contribute to any AI agent project across the organization.

Setting Up Your First AI Agent with AgentCore

Prerequisites and Account Configuration

First things first – you’ll need an active AWS account with proper IAM permissions for Amazon Bedrock AgentCore. Enable the Bedrock service in your preferred region and ensure your account has access to foundation models. Set up AWS CLI credentials and install the Bedrock SDK for your development environment. Verify billing settings to handle API usage costs.

Creating Agent Templates and Configurations

Amazon Bedrock AgentCore streamlines AI agent development through pre-built templates that handle common conversational patterns. Create your agent configuration by selecting appropriate foundation models like Claude or Titan, then define conversation flows using JSON schemas. Templates include customer service bots, document analysis agents, and task automation helpers. Configure memory persistence settings and conversation context windows to match your specific use case requirements.

Defining Agent Roles and Permissions

Security starts with proper role definition in your AI agent architecture. Create IAM roles that limit agent access to necessary AWS services and data sources. Define permission boundaries for external API calls, database connections, and file system access. Use resource-based policies to control which users can interact with your agents. Implement least-privilege principles by granting only essential permissions for agent functionality and data processing tasks.

Testing and Validation Procedures

Your AI agent needs thorough testing before production deployment. Start with unit tests for individual agent functions, then move to integration testing with external services. Create test conversations that cover edge cases, error handling, and unexpected user inputs. Use Bedrock’s built-in monitoring tools to track response accuracy, latency metrics, and token consumption. Set up automated testing pipelines that validate agent behavior after configuration changes.

Advanced Configuration and Customization Options

Custom Knowledge Base Integration

Amazon Bedrock AgentCore lets you connect external data sources like S3 buckets, databases, and document repositories to your AI agents. The platform automatically handles vector embeddings and retrieval processes, making it simple to inject domain-specific knowledge into agent responses. You can configure multiple knowledge bases simultaneously, set retrieval confidence thresholds, and implement custom chunking strategies. Real-time synchronization ensures your agents always access the latest information, while built-in caching improves response times for frequently accessed data.

Multi-Model Support and Selection

AgentCore supports multiple foundation models from different providers, allowing you to select the best model for specific tasks. You can configure model routing based on query complexity, cost considerations, or performance requirements. The platform enables seamless switching between models like Claude, Titan, and Jurassic without code changes. Advanced users can implement A/B testing frameworks to compare model performance and automatically route traffic to the best-performing option for each use case scenario.

Fine-tuning Agent Behavior Parameters

Control your agent’s personality and response style through configurable parameters including temperature, top-p sampling, and response length limits. AgentCore provides granular controls for conversation flow management, including turn-taking rules, context window management, and response formatting options. You can adjust reasoning chains, set confidence thresholds for different response types, and configure fallback behaviors when the agent encounters ambiguous queries or reaches knowledge boundaries.

Implementing Custom Actions and Workflows

Extend agent capabilities by creating custom actions that integrate with external APIs, databases, and business systems. AgentCore supports serverless functions, webhook integrations, and direct database connections through secure credential management. You can build complex multi-step workflows with conditional logic, error handling, and rollback mechanisms. The platform includes workflow orchestration tools for managing long-running processes and maintaining state across multiple interaction sessions.

Security and Compliance Settings

Configure comprehensive security controls including data encryption at rest and in transit, role-based access controls, and audit logging for all agent interactions. AgentCore supports VPC deployment options, private endpoint connections, and integration with AWS identity services. Compliance features include data residency controls, conversation logging with retention policies, and automated content filtering to prevent sensitive information leakage. Advanced monitoring capabilities track usage patterns and detect potential security anomalies.

Best Practices for Production Deployment

Version Control and Release Management

Successful Amazon Bedrock AgentCore deployments require robust version control strategies that track agent configurations, prompt templates, and knowledge base updates. Implement semantic versioning for your AI agents, maintaining separate branches for development, staging, and production environments. Use infrastructure-as-code tools like AWS CloudFormation or Terraform to manage AgentCore resources consistently across environments. Create automated CI/CD pipelines that validate agent responses before deployment, ensuring quality control through comprehensive testing frameworks. Tag releases with detailed changelogs documenting model updates, configuration changes, and performance improvements to maintain deployment transparency.

Monitoring and Performance Optimization

Effective monitoring of Amazon Bedrock AgentCore requires comprehensive observability across multiple dimensions. Set up CloudWatch dashboards to track key metrics including response latency, token consumption, error rates, and user satisfaction scores. Configure custom alarms for anomaly detection in conversation patterns and resource utilization. Implement distributed tracing to identify bottlenecks in multi-agent workflows and knowledge retrieval processes. Regular performance audits should analyze conversation logs to optimize prompt engineering and refine retrieval-augmented generation strategies. Use AWS X-Ray for deep performance insights and establish baseline metrics for continuous improvement of your AI agent best practices.

Error Handling and Troubleshooting Strategies

Robust error handling in AgentCore deployments requires multi-layered strategies addressing both technical failures and conversational breakdowns. Implement graceful fallback mechanisms that redirect users to human agents when confidence scores drop below predefined thresholds. Create comprehensive error logging systems that capture context around failures, including user inputs, agent states, and external service responses. Build retry logic with exponential backoff for transient AWS service issues while maintaining conversation continuity. Establish clear escalation paths for different error types and maintain updated troubleshooting runbooks. Regular analysis of error patterns helps identify systemic issues and opportunities for improving overall system reliability and user experience.

Amazon Bedrock AgentCore transforms how teams build and deploy AI agents by providing a unified architecture that eliminates the guesswork from development. The standardized approach means your team can focus on creating smart, responsive agents instead of wrestling with complex infrastructure setup. From the initial configuration to advanced customization options, AgentCore streamlines every step while maintaining the flexibility needed for unique business requirements.

The real power comes from following production-ready best practices right from the start. When you standardize your AI agent development process, you’re not just building one agent – you’re creating a foundation for scalable, reliable AI solutions across your organization. Start with your first agent today and experience how AgentCore can accelerate your AI initiatives while reducing development complexity and costs.