Building AI systems doesn’t have to be complicated. Minimal AI pipeline design focuses on creating streamlined, efficient machine learning workflows that get your models from development to production fast.

This guide is for software developers, ML engineers, and tech leads who want to cut through the complexity and build AI pipelines that actually work in the real world. You don’t need massive infrastructure or years of ML experience to create powerful AI applications.

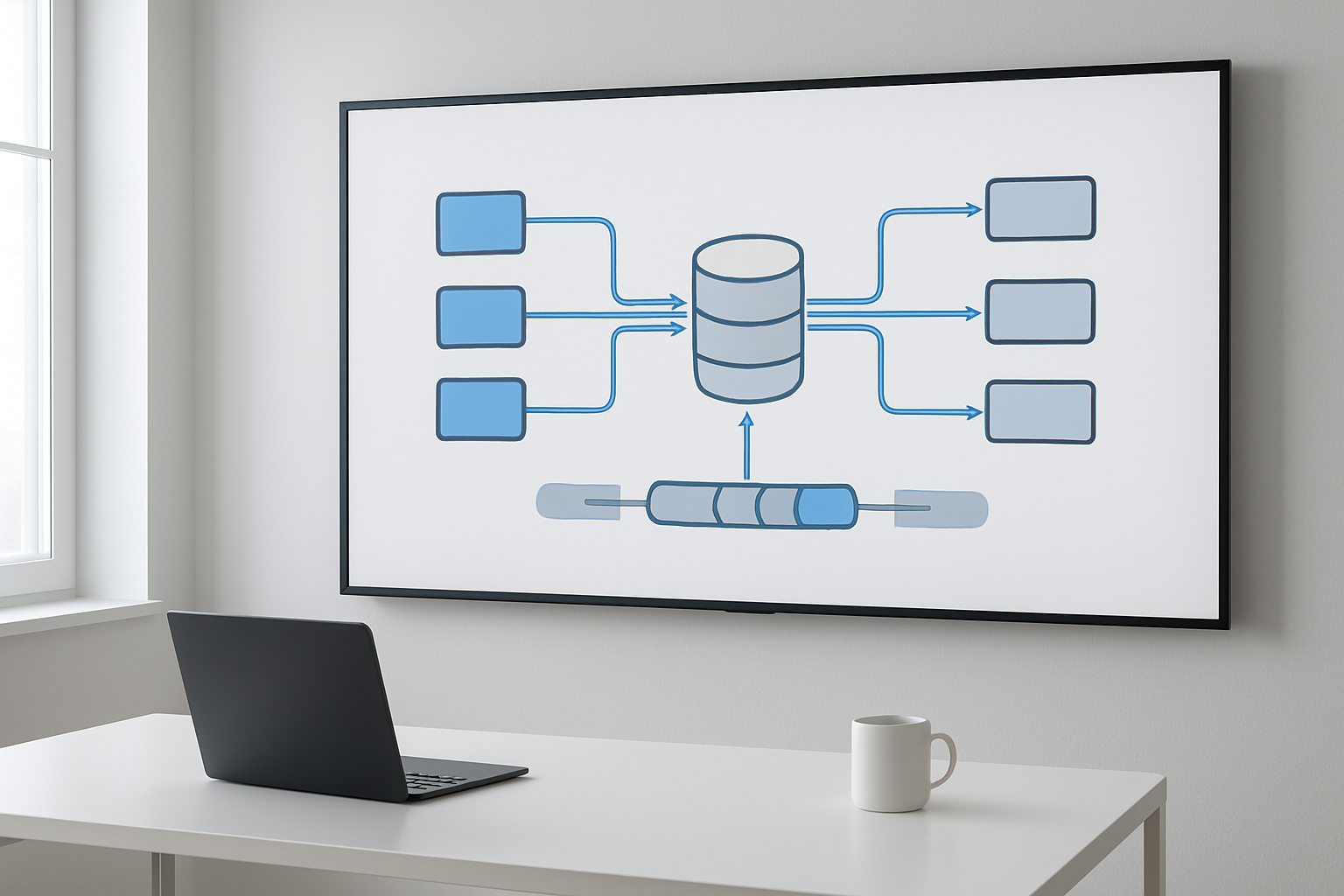

We’ll walk through the essential components every minimal pipeline needs, including the core data processing steps and model serving architecture that form your foundation. You’ll discover streamlined development tools and frameworks that eliminate bloat while maintaining flexibility – think lightweight alternatives to heavy enterprise solutions. Finally, we’ll cover production deployment best practices that keep your AI systems running smoothly without breaking your budget or your sleep schedule.

Ready to build AI pipelines that are simple, fast, and reliable? Let’s dive in.

Essential Components of Minimal AI Pipelines

Data ingestion and preprocessing fundamentals

Building an effective minimal AI pipeline starts with smart data handling. Your data ingestion layer should focus on three core capabilities: collection, validation, and transformation. Start with simple batch processing for most use cases – streaming can wait until you actually need real-time capabilities.

Choose formats that balance simplicity with efficiency. JSON works great for small datasets and prototyping, while Parquet excels when dealing with larger volumes. Avoid complex ETL frameworks initially; Python’s pandas or simple scripts often handle the job perfectly.

Data validation prevents downstream headaches. Implement basic checks for:

- Required fields and data types

- Value ranges and format consistency

- Missing or corrupted entries

- Schema compliance

For preprocessing, focus on the essentials your model actually needs. Common transformations include normalization, encoding categorical variables, and handling missing values. Keep preprocessing steps lightweight and reversible when possible.

Model selection for lightweight deployments

Selecting the right model for your minimal AI pipeline means balancing accuracy with resource constraints. Start with proven, lightweight architectures before exploring complex alternatives.

Consider these model categories based on your use case:

- Linear models for straightforward prediction tasks

- Decision trees for interpretable classification

- Small neural networks for pattern recognition

- Pre-trained models fine-tuned for specific domains

Model size matters in production environments. A 10MB model often performs nearly as well as a 1GB version while loading faster and consuming less memory. Tools like ONNX help optimize models for deployment without sacrificing performance.

Quantization techniques can reduce model size by 75% with minimal accuracy loss. Most frameworks now support 8-bit quantization out of the box. Start with post-training quantization – it requires no retraining and works with existing models.

Output formatting and delivery mechanisms

Your pipeline’s output layer determines how users interact with your AI system. Design this component with both current needs and future scalability in mind.

REST APIs provide the most flexible delivery mechanism for most applications. FastAPI and Flask offer lightweight solutions that handle JSON serialization automatically. Structure your responses consistently:

{

"prediction": "result_value",

"confidence": 0.95,

"metadata": {

"model_version": "1.2",

"processing_time": "120ms"

}

}

For batch processing scenarios, consider file-based outputs. CSV works well for tabular results, while JSON handles complex nested structures. Include timestamps and processing metadata to help with debugging and monitoring.

Real-time applications might benefit from message queues like Redis or RabbitMQ. These systems decouple your AI processing from client applications, improving reliability and allowing for better load management.

Response caching dramatically improves user experience for repeated queries. Implement simple in-memory caching for frequently requested predictions, especially when your model processes the same inputs regularly.

Streamlined Development Tools and Frameworks

Python libraries for rapid prototyping

Building minimal AI pipelines starts with choosing the right Python libraries that accelerate development without adding unnecessary complexity. Scikit-learn remains the go-to choice for traditional machine learning tasks, offering consistent APIs and excellent documentation. For deep learning projects, PyTorch provides intuitive model building with dynamic computation graphs that make debugging straightforward.

Pandas and NumPy handle data manipulation efficiently, while Streamlit creates quick web interfaces for pipeline testing. These lightweight AI frameworks integrate seamlessly, allowing developers to prototype ideas rapidly without wrestling with heavy enterprise solutions.

Consider using Kedro for structured pipeline development – it organizes code, data, and configurations in a maintainable way while keeping overhead minimal. FastAPI serves as an excellent choice for creating REST endpoints around your AI models, offering automatic documentation and validation.

Container-based deployment solutions

Docker containers solve the “works on my machine” problem that plagues AI pipeline deployment. Creating lightweight containers for your minimal AI pipeline ensures consistent behavior across development, staging, and production environments.

Start with slim base images like python:3.9-slim to reduce container size and security vulnerabilities. Multi-stage builds optimize container layers – use one stage for installing dependencies and another for the runtime environment.

Docker Compose orchestrates multi-container setups during development, while Kubernetes handles production scaling. For simpler deployments, consider using cloud-native container services like AWS Fargate or Google Cloud Run, which eliminate infrastructure management overhead.

Key container strategies include:

- Separate containers for model training and inference

- Volume mounts for persistent model storage

- Health check endpoints for monitoring

- Resource limits to prevent memory leaks

Version control strategies for AI models

Traditional Git workflows fall short when managing AI models due to large file sizes and binary formats. Git LFS (Large File Storage) handles model artifacts up to reasonable sizes, but dedicated tools like DVC (Data Version Control) provide better solutions for AI development.

DVC tracks datasets, models, and experiment metrics while integrating with existing Git repositories. It stores large files in cloud storage while maintaining lightweight references in your codebase. This approach enables reproducible experiments and easy model rollbacks.

MLflow offers comprehensive experiment tracking with model versioning capabilities. It logs parameters, metrics, and artifacts automatically, making it easy to compare different pipeline configurations and deploy specific model versions.

Model versioning best practices include:

- Semantic versioning for model releases (1.0.0, 1.1.0, 2.0.0)

- Automated tagging based on performance metrics

- Clear documentation of model changes and improvements

- Separate branches for experimental features

Automated testing frameworks for pipeline validation

Robust testing prevents production failures and ensures pipeline reliability. Start with unit tests for individual pipeline components using pytest, focusing on data transformation functions and model prediction methods.

Integration tests validate end-to-end pipeline behavior. Create test datasets that cover edge cases and expected input variations. Mock external dependencies like databases or APIs to ensure tests run consistently.

Data validation forms a critical testing layer. Great Expectations provides declarative data testing – define expectations about data quality, schema, and distributions, then automatically validate incoming data against these rules.

Pipeline monitoring tests check for data drift and model performance degradation. Implement automated alerts when accuracy drops below thresholds or when input data distributions shift significantly from training data.

Essential testing components include:

- Property-based tests for data transformation functions

- Smoke tests for model loading and prediction

- Performance benchmarks to catch regression

- A/B testing frameworks for model comparison

Efficient Data Flow Architecture

Input Data Validation and Sanitization

Building a robust minimal AI pipeline starts with bulletproof input handling. Your pipeline needs to catch bad data before it wreaks havoc downstream. Create validation schemas that check data types, ranges, and formats at the entry point. Think of this as your bouncer – nothing gets through without proper credentials.

Implement multi-layered sanitization that strips harmful characters, normalizes formats, and handles encoding issues. For text inputs, remove or escape special characters that could break your models. For numerical data, check for NaN values, outliers, and impossible ranges. Always validate against expected schemas and reject malformed requests immediately.

Consider using lightweight validation libraries like Pydantic for Python or Joi for Node.js. These tools let you define validation rules once and apply them consistently across your AI pipeline architecture. Cache validation results when possible to avoid repeated processing of identical inputs.

Real-time vs Batch Processing Decisions

The choice between real-time and batch processing shapes your entire pipeline design. Real-time processing works best for applications requiring immediate responses – chatbots, fraud detection, or recommendation engines. Batch processing shines when you can group requests and optimize for throughput over latency.

Real-time systems demand lower memory footprints and faster inference times. Use streaming frameworks like Apache Kafka or Redis Streams to handle continuous data flow. Implement connection pooling and keep models loaded in memory to minimize startup overhead.

Batch processing allows for more sophisticated optimization techniques. Group similar requests together, use larger batch sizes for better GPU utilization, and implement smart scheduling based on resource availability. Consider hybrid approaches where you batch requests over small time windows (micro-batching) to balance latency and efficiency.

Monitor your usage patterns to make informed decisions. If 80% of your requests can wait 30 seconds, batch processing might deliver better resource utilization and lower costs.

Memory Optimization Techniques

Memory management can make or break your minimal AI pipeline performance. Start by profiling your memory usage patterns and identifying bottlenecks. Use tools like memory_profiler for Python or built-in Node.js profilers to track allocation patterns.

Implement model quantization to reduce memory footprint without significant accuracy loss. Convert 32-bit floats to 16-bit or even 8-bit representations where appropriate. Popular frameworks like ONNX Runtime and TensorRT offer built-in quantization tools that integrate seamlessly into streamlined ML architecture.

Use memory pooling for frequent allocations and deallocations. Pre-allocate buffers for common data sizes and reuse them across requests. This prevents memory fragmentation and reduces garbage collection overhead.

Consider model pruning techniques to remove unnecessary weights and connections. Tools like PyTorch’s pruning utilities can help you identify and remove redundant parameters while maintaining model performance.

Implement lazy loading for large models or datasets. Load components only when needed and unload them when idle. Use memory mapping for large files to let the operating system handle caching efficiently.

Error Handling and Fallback Mechanisms

Robust error handling separates production-ready pipelines from experimental code. Design your error handling with the assumption that everything will eventually fail. Network connections drop, models crash, and hardware fails – your pipeline should gracefully handle these scenarios.

Implement circuit breakers that stop making requests to failing services after a threshold of failures. This prevents cascade failures and gives struggling components time to recover. Libraries like Hystrix for Java or pybreaker for Python provide proven circuit breaker implementations.

Create fallback mechanisms that provide degraded but functional service when primary systems fail. This might mean using a simpler model, returning cached results, or providing default responses. Always inform users when you’re operating in fallback mode.

Use proper logging and monitoring to track errors and performance metrics. Structure your logs with consistent formats that make debugging easier. Include correlation IDs to trace requests through your entire pipeline.

Design retry mechanisms with exponential backoff and jitter to avoid overwhelming recovering services. Not every error deserves a retry – distinguish between transient failures (network timeouts) and permanent failures (invalid input format).

Set up health checks that monitor critical components and provide early warning of potential issues. These checks should be lightweight but comprehensive enough to catch real problems before they affect users.

Performance Optimization Strategies

Model Compression and Quantization Methods

Keeping your minimal AI pipeline lightweight starts with smart model compression techniques. Pruning removes unnecessary neural network weights while maintaining accuracy – think of it as decluttering your model’s brain. Start with magnitude-based pruning, removing weights with the smallest absolute values. Most frameworks like PyTorch and TensorFlow make this straightforward with built-in pruning utilities.

Quantization converts your model from 32-bit floating point to 8-bit integers, slashing memory usage by 75% while boosting inference speed. Post-training quantization works well for most use cases and requires minimal code changes. For production environments, consider dynamic quantization which adapts precision based on input data patterns.

Knowledge distillation creates smaller “student” models that learn from larger “teacher” models. This approach works particularly well for AI pipeline optimization where you need to balance performance with resource constraints. The student model captures the teacher’s decision-making patterns in a more compact form.

Caching Mechanisms for Repeated Operations

Smart caching transforms your pipeline’s performance by avoiding redundant computations. Implement memory-based caching for frequently accessed model predictions using Redis or simple in-memory dictionaries. This approach shines when processing similar inputs or batch operations with overlapping data.

Feature caching prevents recalculating expensive preprocessing steps. Store intermediate results from data transformations, embeddings, or feature extractions. Your streamlined ML architecture benefits enormously when the same raw data gets processed multiple times.

Model output caching works wonders for deterministic models. Hash input parameters and cache corresponding outputs. This strategy proves especially valuable for inference APIs serving repeated requests.

Database query caching reduces data retrieval overhead. Use query result caching for static or slowly-changing datasets. Combine this with connection pooling to minimize database connection overhead in your machine learning pipeline.

Resource Monitoring and Scaling Approaches

Real-time monitoring keeps your minimal AI pipeline running smoothly. Track GPU utilization, memory consumption, and inference latency using tools like Prometheus or built-in cloud monitoring services. Set up alerts for resource spikes or performance degradation.

Horizontal scaling distributes workload across multiple instances. Container orchestration platforms like Kubernetes automatically spin up new instances based on demand. This approach works particularly well for lightweight AI frameworks that start quickly.

Vertical scaling adjusts resources for individual instances. Auto-scaling based on CPU or memory thresholds keeps costs down during low-traffic periods. Cloud providers offer managed scaling solutions that integrate seamlessly with production AI deployment workflows.

Load balancing ensures even distribution across your scaled instances. Use health checks to route traffic away from struggling nodes. This maintains consistent response times even as your pipeline scales up or down.

Production Deployment Best Practices

Environment Configuration Management

Successful production AI deployment starts with solid environment configuration practices. Container-based deployments using Docker provide consistent environments across development, staging, and production systems. Define your minimal AI pipeline dependencies in a requirements file and version-lock everything to prevent surprises during deployment.

Environment variables handle sensitive configuration data like API keys, database connections, and model paths. Never hardcode these values in your source code. Use tools like Kubernetes ConfigMaps or cloud-native secret management services to maintain clean separation between code and configuration.

Create separate configuration files for each environment stage. Your development setup might use lighter model variants for faster iteration, while production configurations specify optimized model versions and resource allocations. This approach keeps your streamlined ML architecture flexible across different deployment scenarios.

API Endpoint Design for Model Serving

Design your AI pipeline endpoints with simplicity and performance in mind. RESTful APIs work well for most ML serving scenarios, offering familiar patterns that frontend and backend developers understand quickly. Keep endpoint structures clean with predictable paths like /predict for inference and /health for system monitoring.

Input validation protects your models from malformed requests. Implement schema validation using tools like Pydantic for Python-based systems. This catches data type mismatches and missing fields before they reach your model, preventing crashes and improving error messages.

Response formatting should include prediction results alongside confidence scores and processing metadata. Structure your JSON responses consistently:

prediction: The model’s outputconfidence: Certainty score when availableprocessing_time: Request duration for performance trackingmodel_version: Currently serving model identifier

Batch processing endpoints handle multiple predictions efficiently when clients need to process several inputs simultaneously. This reduces network overhead and improves throughput for AI pipeline optimization.

Monitoring and Logging Implementation

Comprehensive monitoring catches issues before they impact users. Track key metrics including request latency, error rates, and prediction accuracy drift. Set up alerts for unusual patterns like sudden increases in prediction confidence or response times.

Application logs capture request details, model predictions, and error traces. Structure logs as JSON for easier parsing and analysis. Include correlation IDs to trace individual requests through your entire pipeline. This proves invaluable when debugging complex issues across distributed systems.

Model performance monitoring goes beyond technical metrics. Track business-relevant indicators like conversion rates or user engagement when your AI drives product features. Sudden drops in these metrics might indicate model drift or data quality issues that technical monitoring alone won’t catch.

Use centralized logging solutions like ELK stack or cloud-native options to aggregate logs from multiple service instances. This gives you complete visibility into your production AI deployment across all running containers or serverless functions.

Security Considerations for AI Endpoints

API authentication prevents unauthorized access to your ML models. Implement API key validation or OAuth tokens depending on your use case. Rate limiting protects against abuse and ensures fair resource usage across clients.

Input sanitization becomes critical when your models process user-generated content. Text inputs might contain injection attempts or adversarial examples designed to manipulate model outputs. Validate and clean all incoming data before processing.

Network security includes HTTPS encryption for all API communications and proper firewall configurations. Consider implementing IP whitelisting for internal services or high-security deployments.

Model protection involves preventing unauthorized model extraction or reverse engineering. Avoid exposing detailed model architecture information through API responses. Monitor for suspicious query patterns that might indicate someone trying to reconstruct your model through systematic probing.

Rollback and Update Procedures

Blue-green deployment strategies minimize downtime during model updates. Maintain two identical production environments and switch traffic between them during releases. This allows instant rollbacks if new model versions show problems.

Canary releases gradually expose new models to production traffic. Start with a small percentage of requests going to the updated model while monitoring performance metrics. Increase traffic gradually as confidence builds, or rollback immediately if issues arise.

Version management keeps track of model artifacts, code changes, and configuration updates as atomic units. Use semantic versioning for model releases and maintain rollback packages containing everything needed to restore previous versions quickly.

Automated rollback triggers respond to predefined failure conditions without human intervention. Set thresholds for error rates, response times, or accuracy metrics that automatically revert to stable model versions when crossed. This AI pipeline best practices approach prevents extended outages during off-hours or when teams aren’t actively monitoring deployments.

Health checks validate both technical functionality and model quality after deployments. Run prediction tests against known inputs to verify outputs match expectations before declaring deployments successful.

Building minimal AI pipelines doesn’t have to be overwhelming when you focus on the essentials. Start with the core components that actually matter – clean data ingestion, simple processing steps, and reliable model serving. Choose lightweight frameworks that get the job done without unnecessary complexity, and design your data flow to be as straightforward as possible. Remember that optimization comes after you have something working, not before.

The best AI pipeline is the one you can actually ship and maintain. Keep your architecture simple enough that your team can debug issues quickly and add features without breaking everything. Start small, test often, and scale only when you need to. Your users care about results, not how sophisticated your backend looks on paper.