Ever spent weeks building a data pipeline only to have compliance officers shut it down because they can’t verify where sensitive data is going? You’re not alone. At enterprise scale, 68% of data leaders report governance as their biggest blocker to innovation.

Unity Catalog in Databricks promises to solve this tension between innovation and control. But like any powerful tool, implementation matters more than features.

This guide unpacks battle-tested Unity Catalog best practices for organizing, securing, and governing your data without suffocating your analytics teams. We’ve compiled these recommendations from dozens of successful enterprise implementations.

The difference between a data governance program that empowers versus one that restricts often comes down to a few critical design decisions most teams overlook until it’s too late.

Understanding Unity Catalog Fundamentals

Understanding Unity Catalog Fundamentals

Key Components and Architecture Explained

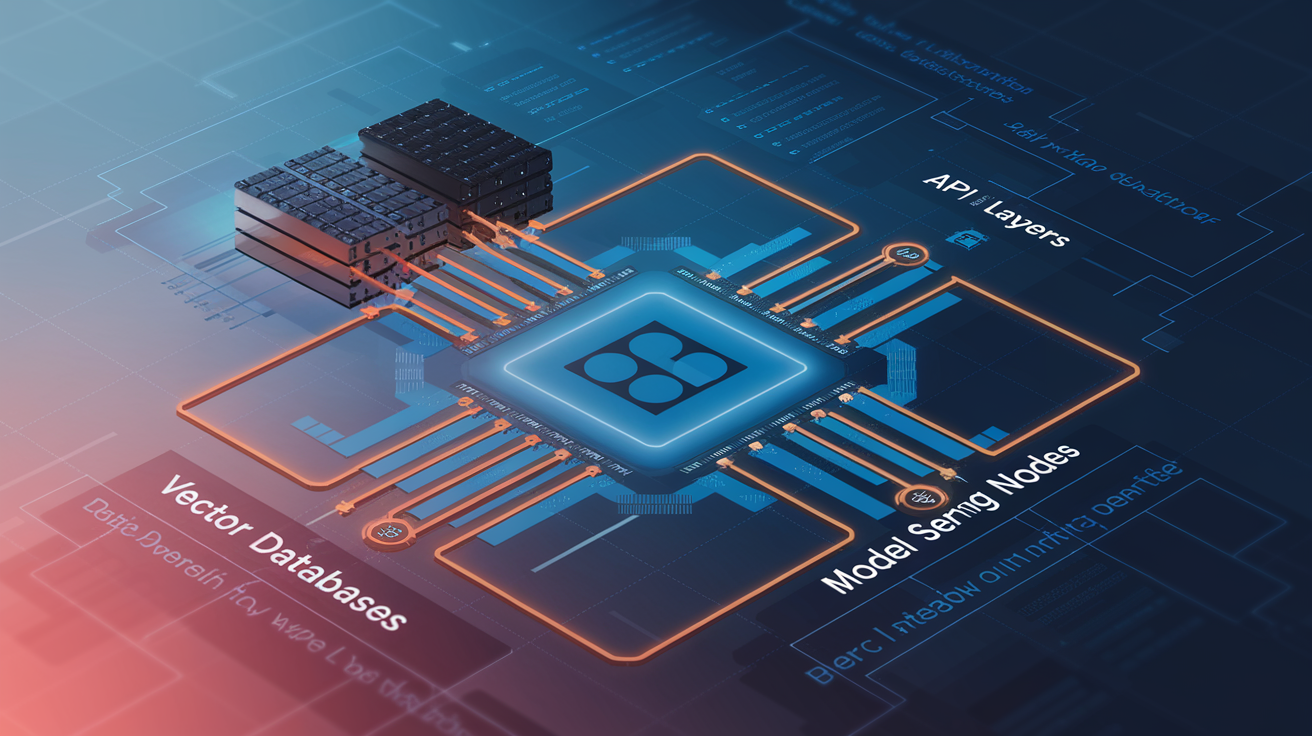

Unity Catalog transforms how you handle data in Databricks through its three-layer architecture: metastores, catalogs, and schemas. Think of it as your data’s organizational system – metastores sit at the top, catalogs organize related data assets, and schemas group tables logically. This hierarchy makes finding and managing your data assets ridiculously simple.

Setting Up Effective Data Organization Structures

A. Designing Your Catalog Hierarchy for Scalability

Smart catalog design isn’t just about today’s needs. Think multi-tier approach: metastores for environments (dev/prod), catalogs for business units, schemas for projects. This pyramid structure prevents future headaches when your data ecosystem explodes. Keep related assets grouped but maintain clear boundaries between domains.

Implementing Robust Security Controls

Implementing Robust Security Controls

A. Role-Based Access Control Best Practices

Security in Databricks isn’t something you bolt on later. Start with properly defined roles. Assign the minimum privileges needed—nothing more. Group similar users together, document your permission structure, and review it quarterly. Don’t make everyone an admin just because it’s easier. That’s how data breaches happen.

B. Row and Column-Level Security Implementation

Row and column security lets you get surgical with permissions. Engineers see infrastructure data, finance sees cost data—nobody sees everything. Implement using SQL predicates for row filters and explicit column grants. Test thoroughly before production. The extra setup pays off when sensitive data stays exactly where it should.

C. Managing Service Principals and Account Access

Service principals are your automation workhorses—treat them seriously. Create dedicated principals for each workflow, never share credentials, rotate secrets regularly, and monitor usage patterns. Remove unused accounts immediately. One forgotten service account with broad permissions can undermine your entire security model.

D. Audit Logging and Security Monitoring

No security system survives first contact with users. Set up comprehensive audit logging capturing who did what and when. Monitor for unusual access patterns, failed login attempts, and permission changes. Configure real-time alerts for suspicious activities. Without monitoring, you’re just hoping nobody’s misbehaving with your data.

Data Governance Workflows and Processes

A. Establishing Clear Ownership and Stewardship

Data governance in Databricks isn’t just about tools—it’s about people. Who owns what data? Who’s responsible when things go wrong? Set up clear data owners for each catalog and schema. Then appoint stewards who handle day-to-day management. This clarity prevents the all-too-common “not my problem” syndrome.

Integration with Enterprise Data Ecosystems

A. Connecting with External Data Sources

Unity Catalog shines when connecting to your existing data sources. Whether it’s AWS S3, Azure Data Lake, or on-premise SQL servers, the catalog creates seamless pathways that preserve your security policies. No more building custom connectors or wrestling with authentication issues between systems.

B. Integrating with Business Intelligence Tools

Your BI tools need reliable access to properly governed data. Unity Catalog works directly with Tableau, Power BI, and Looker through standard connectors. Analysts get self-service access while you maintain visibility into who’s using what data – keeping both teams happy.

C. Supporting MLOps and Data Science Workflows

Data scientists hate governance roadblocks. Unity Catalog gets this. It integrates directly with MLflow to track model lineage, features, and datasets without slowing down innovation. Models built on properly governed data mean fewer compliance headaches downstream when deploying to production.

D. Syncing with Enterprise Governance Frameworks

Unity Catalog doesn’t exist in isolation. It plays nicely with enterprise tools like Collibra, Alation, and Atlan. The two-way synchronization keeps business glossaries, data dictionaries, and quality metrics consistent across your organization without manual updates.

E. Interoperability with Cloud Data Platforms

Cloud sprawl is real. Unity Catalog bridges your multi-cloud reality by creating consistent governance across AWS, Azure, and GCP deployments. Define policies once and apply them everywhere, regardless of which cloud your data lives in.

Performance Optimization and Scaling

Managing Large-Scale Metadata

Ever tried organizing thousands of datasets? It’s a nightmare without the right approach. Unity Catalog handles this with dynamic metadata caching and parallel processing. Stop your metadata operations from crawling by implementing batch updates and prioritizing critical paths first.

Query Performance Best Practices

Monitoring and Maintenance

Monitoring and Maintenance

A. Building Effective Dashboards for Governance Metrics

Tracking Unity Catalog usage shouldn’t feel like searching for a needle in a haystack. Good dashboards show you exactly who’s accessing what, when permissions change, and where security gaps might exist. Build yours with key metrics that actually matter—like failed access attempts and privilege escalation patterns—not vanity stats that look pretty but tell you nothing.

B. Implementing Automated Compliance Checks

Waiting for audit day to discover compliance issues? Big mistake. Set up automated checks that run daily against your governance policies. These silent guardians catch drift before it becomes a problem—flagging orphaned accounts, excessive permissions, and unusual access patterns without you having to remember to look. Your future self will thank you when the auditors come knocking.

C. Troubleshooting Common Issues

Unity Catalog hiccups happen to everyone. The difference between a minor blip and a major headache? Knowing where to look. Start with permission inheritance chains—they’re usually the culprit. Check sync status between external systems next. Don’t waste hours digging through logs randomly. Follow the pattern: permissions → sync → metadata → query performance. You’ll solve problems in half the time.

D. Keeping Up with Platform Updates and Features

Databricks moves fast. Skip two release notes and suddenly everyone’s talking about features you’ve never heard of. Make it a weekly habit to scan the changelog. The small updates often pack the biggest governance improvements. Join the Databricks community Slack where the real inside tips get shared before they hit official docs. The platform’s evolving—make sure your governance approach does too.

Adopting Unity Catalog in Databricks revolutionizes how organizations govern their data assets. By implementing well-structured organization hierarchies, robust security controls, and streamlined governance workflows, businesses can achieve true data democratization while maintaining compliance. The integration capabilities with existing enterprise ecosystems ensure seamless data management across platforms, while performance optimization techniques guarantee efficient scaling as data volumes grow.

We encourage data leaders to leverage these best practices as a foundation for their Unity Catalog implementation journey. Start with foundational elements like metastore configuration and permission models, then gradually build toward more advanced governance capabilities. Remember that effective data governance is an ongoing process—regular monitoring, maintenance, and adaptation to evolving business needs will ensure your Databricks environment remains secure, compliant, and valuable to your organization’s data consumers.