Kubernetes (K8s) has become the go-to solution for managing containerized applications at scale, but understanding its core concepts can feel overwhelming for developers and DevOps teams new to container orchestration.

This guide is designed for software developers, system administrators, and IT professionals who want to grasp Kubernetes fundamentals and see how it can transform their application deployment strategy. You’ll discover why companies from startups to enterprises rely on K8s to run their cloud native applications and microservices deployment pipelines.

We’ll break down Kubernetes architecture and its essential components, explore the key concepts that every developer needs to master, and examine the concrete benefits that make Kubernetes worth the learning curve. You’ll also see real-world Kubernetes use cases across different industries and get practical insights for your own Kubernetes implementation journey.

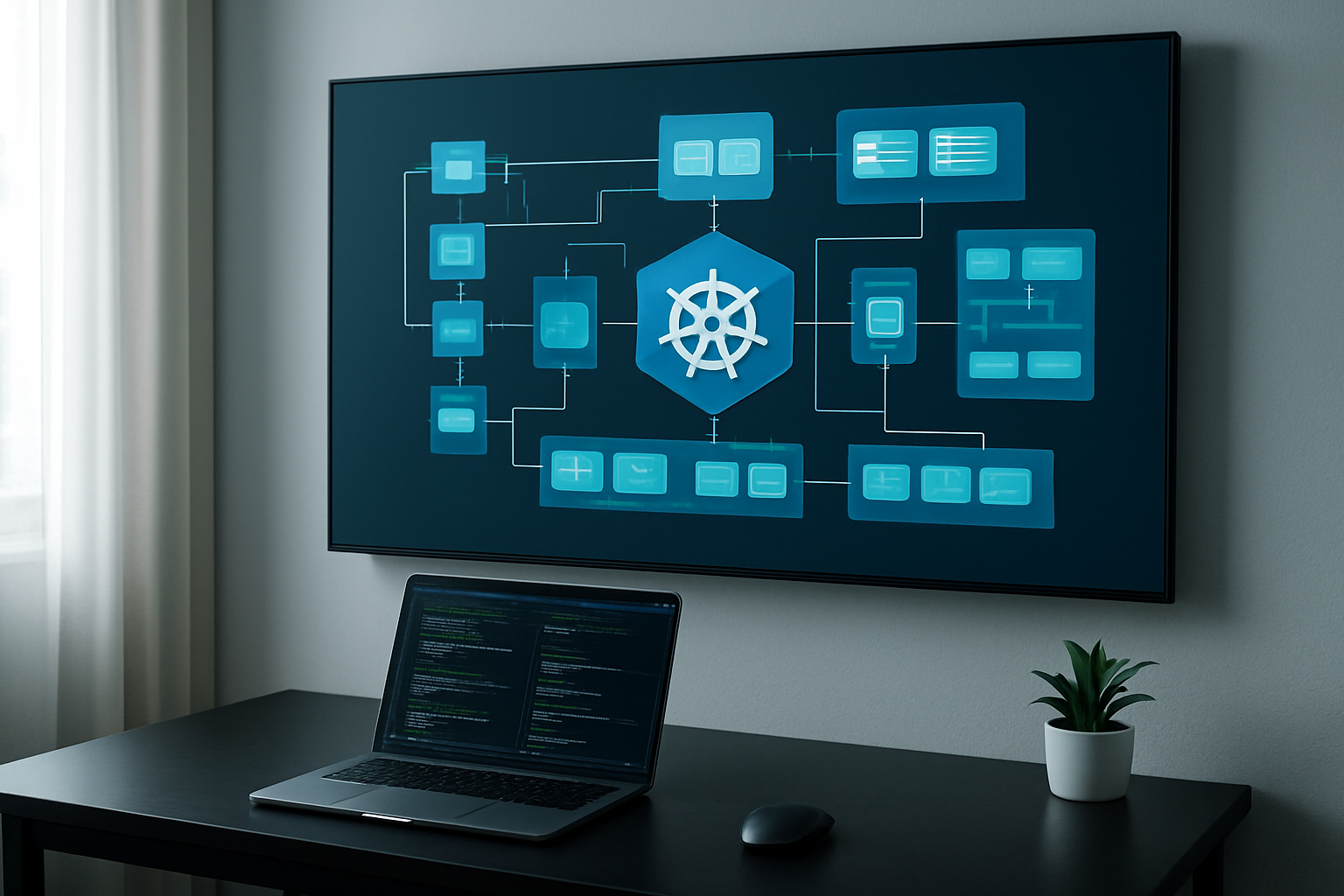

Essential Kubernetes Architecture and Core Components

Master Node Components That Control Your Cluster

The control plane acts as Kubernetes’ brain, managing every aspect of your cluster through specialized components. The API server serves as the central hub, handling all requests and communications between components. Etcd stores your cluster’s configuration data and state information reliably. The scheduler decides which worker nodes should run your pods based on resource requirements and constraints. The controller manager watches cluster state and makes corrections when things drift from desired configurations.

Worker Node Elements That Execute Your Applications

Worker nodes handle the actual application workloads through three key components working together seamlessly. The kubelet agent communicates with the control plane and manages pod lifecycles on each node. Kube-proxy maintains network rules and handles load balancing for services across your cluster. The container runtime (like Docker or containerd) actually runs your containers and manages their execution environment. These components ensure your applications run smoothly and stay connected.

Pod Structure and Container Management Fundamentals

Pods represent the smallest deployable units in Kubernetes architecture, wrapping one or more containers that share resources. Each pod gets its own IP address and storage volumes that all containers inside can access. Containers within the same pod communicate through localhost and share the same network namespace. This design enables tight coupling between related containers while maintaining isolation from other pods. Pod templates define how new instances should be created and configured.

Services and Networking That Connect Everything

Kubernetes networking creates a flat network model where every pod can communicate with other pods directly. Services provide stable endpoints for accessing groups of pods, even as individual pods come and go. ClusterIP services handle internal communication, while NodePort and LoadBalancer services expose applications externally. Network policies control traffic flow between pods for security. DNS automatically resolves service names, making service discovery seamless for your microservices deployment.

Critical Kubernetes Concepts Every Developer Should Master

Deployments for Scalable Application Management

Deployments serve as the backbone of Kubernetes application management, providing declarative updates and seamless scaling capabilities. They manage replica sets automatically, ensuring your applications maintain desired state while handling rolling updates without downtime. When traffic spikes occur, Deployments can scale pods horizontally across nodes, making them essential for microservices deployment. The self-healing mechanism restarts failed containers instantly, while rollback features let you revert problematic updates quickly. This container orchestration approach eliminates manual intervention, allowing developers to focus on code rather than infrastructure management.

ConfigMaps and Secrets for Configuration Control

ConfigMaps separate configuration data from application code, enabling dynamic updates without rebuilding container images. They store non-sensitive information like database URLs, feature flags, and environment-specific settings that applications consume at runtime. Secrets handle sensitive data such as passwords, API keys, and certificates using base64 encoding and optional encryption at rest. Both resources inject data into pods through environment variables, mounted files, or command-line arguments. This separation promotes portability across different environments while maintaining security best practices. Development teams can modify configurations independently from deployment cycles, streamlining the release process significantly.

Persistent Volumes for Data Storage Solutions

Persistent Volumes abstract underlying storage infrastructure, providing durable data storage that survives pod restarts and rescheduling. They decouple storage lifecycle from pod lifecycle, ensuring critical data persists even when containers terminate unexpectedly. Storage classes define different performance tiers and backup policies, allowing applications to request appropriate storage types dynamically. Persistent Volume Claims act as storage requests, automatically binding to available volumes that meet specified requirements. This abstraction layer supports various storage backends including cloud provider disks, network-attached storage, and local volumes, making Kubernetes storage vendor-agnostic and highly flexible for cloud native applications.

Game-Changing Benefits of Adopting Kubernetes

Automatic Scaling That Matches Traffic Demands

Kubernetes automatically adjusts your application’s capacity based on real-time traffic patterns through horizontal pod autoscaling. When demand spikes during peak hours, K8s instantly deploys additional container instances across your cluster. During quiet periods, it scales down resources to prevent waste. This dynamic scaling eliminates manual intervention, ensuring your applications remain responsive while optimizing resource usage. The built-in metrics server monitors CPU, memory, and custom metrics to make intelligent scaling decisions within seconds.

Self-Healing Capabilities That Minimize Downtime

Container orchestration with Kubernetes delivers remarkable resilience through automated failure recovery. When pods crash or become unresponsive, K8s immediately restarts them on healthy nodes without human intervention. Failed nodes trigger automatic workload redistribution across the remaining cluster infrastructure. Health checks continuously monitor application status, replacing unhealthy instances before users notice issues. This self-healing architecture maintains service availability even during hardware failures, software crashes, or network disruptions, dramatically reducing operational overhead.

Resource Optimization That Reduces Infrastructure Costs

Kubernetes maximizes hardware efficiency by intelligently packing workloads across cluster nodes based on resource requirements. Unlike traditional deployments where servers often run at low utilization, K8s ensures optimal resource allocation through advanced scheduling algorithms. Applications share underlying infrastructure without interference, dramatically reducing the number of physical or virtual machines needed. Resource quotas and limits prevent any single application from consuming excessive resources, while bin-packing algorithms minimize waste and lower cloud native applications costs.

Platform Independence That Eliminates Vendor Lock-in

K8s provides true platform portability across public clouds, private data centers, and hybrid environments without code modifications. Your containerized applications run identically on AWS, Google Cloud, Azure, or on-premises infrastructure using the same Kubernetes APIs and deployment manifests. This flexibility prevents vendor lock-in while enabling multi-cloud strategies for disaster recovery and cost optimization. Teams can migrate workloads between environments seamlessly, negotiating better pricing and avoiding dependency on single cloud providers.

DevOps Integration That Streamlines Development Workflows

Kubernetes benefits include seamless integration with CI/CD pipelines, enabling automated testing and deployment workflows. GitOps practices become effortless as configuration changes trigger automatic rollouts through version-controlled manifests. Rolling updates and blue-green deployments happen without service interruption, while instant rollbacks protect against faulty releases. Built-in service discovery and configuration management eliminate complex deployment scripts. Development teams can focus on writing code rather than managing infrastructure, accelerating time-to-market for new features.

Proven Real-World Applications Across Industries

E-commerce Platforms Managing Peak Traffic Loads

E-commerce giants like Amazon and Shopify rely on Kubernetes to handle massive traffic spikes during Black Friday and holiday seasons. K8s automatically scales container orchestration across thousands of nodes, maintaining seamless shopping experiences even when traffic increases by 1000%. Kubernetes architecture enables microservices deployment for payment processing, inventory management, and recommendation engines, ensuring zero downtime during peak sales periods when revenue directly depends on system availability.

Financial Services Ensuring High Availability Systems

Banks and trading platforms use Kubernetes to maintain 99.99% uptime for critical financial applications. JPMorgan Chase and Goldman Sachs deploy K8s clusters across multiple data centers, automatically failing over transaction processing systems within milliseconds. Container orchestration ensures regulatory compliance while supporting real-time trading, fraud detection, and customer banking services. Kubernetes benefits include automated disaster recovery and seamless updates without service interruption, protecting billions in daily transactions.

Media Companies Delivering Content at Global Scale

Netflix and Spotify leverage Kubernetes to stream content to millions of concurrent users worldwide. K8s manages containerized applications across global cloud regions, automatically adjusting resources based on viewing patterns and geographic demand. Kubernetes implementation enables content delivery networks to scale instantly, supporting 4K video streaming and high-fidelity audio without buffering. Media companies use cloud native applications to personalize recommendations, process user data, and deliver content seamlessly across devices and time zones.

Implementation Strategies for Kubernetes Success

Cloud Provider Options and Migration Pathways

Major cloud platforms like AWS EKS, Google GKE, and Azure AKS simplify Kubernetes implementation through managed services. These offerings handle control plane management while providing seamless integration with existing cloud infrastructure. Migration strategies should include lift-and-shift approaches for legacy applications, gradual containerization of monolithic systems, and careful planning of networking and storage requirements to minimize downtime.

Monitoring and Observability Tools for Operational Excellence

Effective Kubernetes monitoring requires comprehensive tooling across multiple layers. Prometheus and Grafana form the backbone of metrics collection and visualization, while tools like Jaeger and Zipkin provide distributed tracing capabilities. Log aggregation through Fluentd or Fluent Bit ensures centralized logging. These tools work together to provide complete visibility into cluster health, application performance, and resource utilization patterns across your container orchestration environment.

Security Best Practices That Protect Your Infrastructure

Security in Kubernetes demands a multi-layered approach starting with role-based access control (RBAC) and network policies. Pod security standards replace deprecated pod security policies, while service mesh technologies like Istio add encryption and authentication between services. Regular vulnerability scanning of container images, implementing admission controllers, and maintaining least-privilege principles protect against common attack vectors. Secrets management through dedicated tools like HashiCorp Vault enhances security posture significantly.

Team Training and Skill Development Requirements

Successful Kubernetes implementation requires investing in team education across development and operations roles. Teams need hands-on experience with kubectl commands, YAML configuration files, and debugging techniques. Training should cover container fundamentals, networking concepts, and troubleshooting methodologies. Certification programs like CKA and CKAD validate skills while fostering confidence. Regular workshops and practice environments help teams stay current with rapidly evolving cloud native applications and deployment patterns.

Kubernetes has transformed how we think about deploying and managing applications at scale. From understanding its architecture and core components to mastering essential concepts like pods, services, and deployments, K8s offers a robust foundation for modern application development. The benefits are clear: improved scalability, enhanced resource efficiency, faster deployment cycles, and better fault tolerance. Companies across industries are already seeing real results, from Netflix managing massive streaming workloads to financial institutions running critical trading systems.

Getting started with Kubernetes doesn’t have to be overwhelming. Start small with a development cluster, focus on learning the basics first, and gradually expand your implementation as your team gains confidence. The investment in learning Kubernetes pays off quickly when you see how much easier it becomes to manage complex applications and scale your infrastructure. Whether you’re running a startup or managing enterprise systems, Kubernetes gives you the tools to build resilient, scalable applications that can grow with your business.