Ever clicked a button and wondered, “What happens next?” Your simple tap sets off a dizzyingly complex chain reaction across dozens of interconnected systems in milliseconds. And yet, somehow, it all just works.

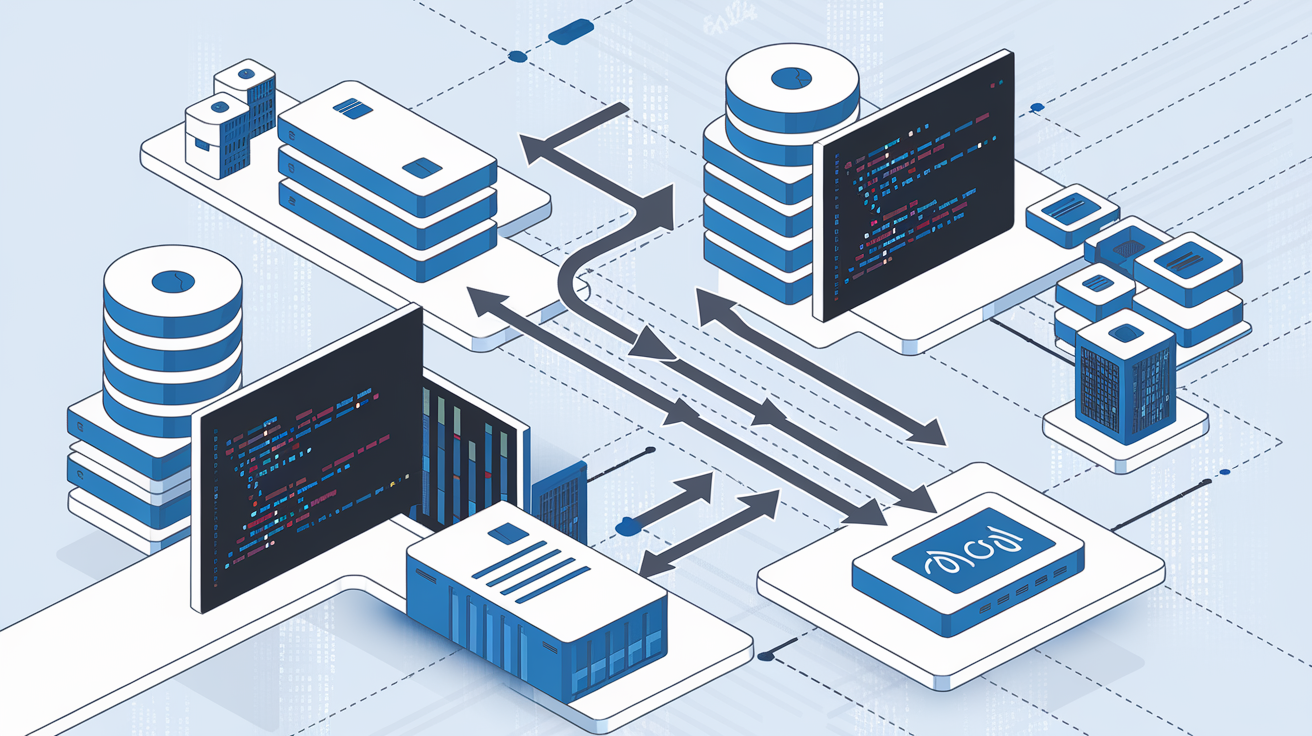

Let me take you behind the digital curtain of modern web architecture, where your requests zip through load balancers, API gateways, and microservices before returning as the webpage you see.

The average web request today touches more cloud infrastructure than most developers could explain on a whiteboard. But understanding this journey isn’t just for engineers anymore.

By the end of this post, you’ll follow a single user request through the entire cloud stack, seeing exactly how Netflix streams videos, Amazon processes orders, and Google delivers search results at breathtaking speed.

So what actually happens in those 200 milliseconds between click and content?

The Fundamentals of Modern Web Architecture

The Evolution from Monolithic to Cloud-Based Systems

Remember when websites were just HTML files sitting on a single server? Those days are long gone.

Web architecture has undergone a dramatic transformation in the last decade. Monolithic systems—where the entire application lived on a single server—were the norm until scaling became a nightmare. Imagine your entire application crashing because one component failed. Not fun.

Today’s cloud-based systems are built differently. They’re distributed, scalable, and fault-tolerant. Companies like Netflix and Airbnb completely rebuilt their architecture from monoliths to microservices because they had no choice—their growth demanded it.

The shift wasn’t just about technology; it changed how teams work. In the monolithic days, everyone worked on the same codebase. Now, teams own specific services, making development faster and more efficient.

Key Components of Modern Web Stacks

Modern web architecture isn’t just a server and some code. It’s a carefully orchestrated symphony of components:

- Front-end layer: What users see and interact with—built with frameworks like React, Vue, or Angular

- API Gateway: The traffic cop directing requests to the right service

- Microservices: Independent services handling specific functions

- Database layer: Often distributed across multiple systems (SQL, NoSQL, caching)

- Infrastructure: Cloud providers like AWS, Azure, or GCP handling the heavy lifting

This separation of concerns makes systems more resilient. If your payment service goes down, your product catalog can still function.

How User Requests Trigger Complex Interactions

When you click “Buy Now” on an e-commerce site, you’re kicking off an incredible chain reaction.

Your request hits a CDN first, then passes through load balancers to an API gateway. The gateway might route parts of your request to different microservices—one checking inventory, another processing payment, a third handling shipping calculations.

Each microservice might call its own database, cache results, publish events to message queues, or trigger serverless functions. All this happens in milliseconds.

The beauty of modern architecture? This complexity is invisible to users. They just see a smooth, responsive experience.

And when traffic spikes during Black Friday? The system scales automatically, spinning up new instances to handle the load. Try doing that with a monolith.

The Journey Begins: From Browser to Internet

What Happens When You Click a Link

Ever wondered what actually happens when you click a link? It’s way more complex than you might think.

When you click that innocent-looking link, your browser springs into action. First, it parses the URL to figure out what protocol to use (HTTP or HTTPS), which server to contact, and what resource to request. Your browser then checks its cache – why download something again if it already has a fresh copy?

If there’s nothing in the cache, your browser prepares an HTTP request. This request includes headers with information about your browser, preferred languages, and what types of responses it can handle.

But this is just the first step of an incredible journey that takes milliseconds but involves dozens of sophisticated systems working in perfect harmony.

DNS Resolution and IP Addressing

Your browser knows the website name, but computers don’t communicate with names – they need numbers.

This is where DNS (Domain Name System) comes in – the internet’s phonebook. Your browser asks a DNS resolver: “What’s the IP address for example.com?” This query might go through several servers:

- The browser first checks its local DNS cache

- If not found, it asks your operating system

- Still no luck? It queries your ISP’s DNS server

- If needed, the query travels through root servers, TLD servers, and authoritative nameservers

Once your browser gets the IP address back, it knows exactly where to send the request. This entire process typically takes just milliseconds but involves a global network of servers working together.

TCP/IP Connections Explained

Now your browser knows where to send the request, but how does it actually get there?

TCP/IP is the foundation for this communication. Think of TCP (Transmission Control Protocol) as the reliable shipping service of the internet. It breaks your request into small packets, numbers them, and ensures they all arrive correctly at the destination.

The process works like this:

- Your browser initiates a TCP connection through a “three-way handshake” (SYN, SYN-ACK, ACK)

- Once established, the connection provides a reliable channel for data exchange

- The IP (Internet Protocol) part handles the routing, making sure packets navigate the complex web of routers between you and the server

What’s amazing is how TCP handles reliability. If packets get lost or corrupted (which happens all the time), TCP automatically resends them. It’s constantly adjusting to network conditions, slowing down when congestion occurs and speeding up when the path is clear.

The Role of HTTPS in Securing Requests

Remember when websites just used HTTP? Those days are gone for good reason.

HTTPS adds a critical security layer to your connection using TLS (Transport Layer Security). Before any data is exchanged, your browser and the server perform a “TLS handshake” that accomplishes several critical security functions:

- Authenticates the server (verifies you’re talking to the real website, not an impostor)

- Establishes encryption keys unique to your session

- Enables encrypted communication that prevents eavesdropping

The encryption process is mind-boggling. Your browser and the server use complex mathematics to create encryption keys that only they know, despite the fact that all the messages used to create these keys are sent over the public internet where anyone could see them.

Once secure, all data – including cookies, form submissions, and the content you receive – is encrypted. This means your banking details, passwords, and personal information remain private as they travel across the internet.

Front-End Processing: The First Line of Defense

Load Balancers and Traffic Distribution

Ever wonder what happens when millions of people hit your favorite streaming site during a season finale? That’s where load balancers come in. These traffic cops stand at the front gates of web architecture, directing incoming requests to the servers best equipped to handle them.

Load balancers use various algorithms to make split-second decisions:

- Round Robin: Distributes requests evenly across servers

- Least Connection: Sends traffic to servers with the fewest active connections

- IP Hash: Routes users from the same IP to the same server

When you type in that URL, your request might bounce through multiple load balancers – some handling TLS termination, others filtering out suspicious traffic. Modern load balancers don’t just direct traffic; they’re becoming intelligent gatekeepers that can detect attacks and throttle excessive requests.

Content Delivery Networks (CDNs) in Action

CDNs are the secret weapon behind smooth streaming and lightning-fast websites. They’re distributed networks of servers that cache content close to users.

Picture this: you’re in Tokyo trying to access a New York-based site. Without a CDN, your request travels halfway around the world. With a CDN, you’re grabbing that content from a server just down the street.

CDNs handle:

- Static assets (images, CSS, JavaScript)

- Video streaming buffers

- Entire static sites

They’re not just caching anymore either. Modern CDNs offer edge computing, bot protection, and WAF capabilities that transform them into comprehensive front-end defenders.

Edge Computing’s Role in Request Handling

Edge computing pushes processing closer to data sources – meaning your requests get handled before they even reach the main application.

The edge can:

- Authenticate users

- Transform images on-the-fly

- Apply business logic

- Serve customized content

This isn’t just about speed (though milliseconds matter). Edge computing reduces load on your core infrastructure and creates opportunities for real-time processing that wasn’t possible before.

When your request hits an edge location, it might be completely fulfilled without ever touching the origin servers. Think personalized pricing based on location or device-specific content rendering – all happening within milliseconds at the network edge.

Application Layer Processing

Web Servers vs. Application Servers

Ever wondered what’s happening behind the scenes when you click that button on a website? Your request lands in either a web server or an application server – but they’re not the same thing.

Web servers handle the basics – they serve up static content like HTML, CSS, and images. Think of Apache or Nginx as the bouncers at the club, deciding who gets in and directing traffic to the right places. They’re optimized for delivering files fast and can handle tons of concurrent connections.

Application servers, on the other hand, are where the real magic happens. They execute business logic, process dynamic content, and talk to databases. Examples like Tomcat, JBoss, or Node.js environments actually run your code and build responses on the fly.

Here’s the key difference:

| Web Servers | Application Servers |

|---|---|

| Static content | Dynamic processing |

| HTTP handling | Business logic execution |

| File delivery | Database interactions |

| Lightweight | Resource-intensive |

Many modern setups use both – web servers handling the front door and routing, with application servers doing the heavy lifting behind them.

Containerization and Microservices Architecture

Gone are the days of monolithic applications where one codebase rules them all. Today’s web architecture has been broken down into bite-sized pieces called microservices.

Each microservice handles one specific function – user authentication, payment processing, inventory management – you name it. They communicate through well-defined APIs, allowing teams to work independently and deploy frequently without breaking the whole system.

Containers are the perfect home for these microservices. Docker changed the game by packaging applications with everything they need to run – code, runtime, libraries, environment variables – into standardized units. This solves the infamous “works on my machine” problem.

Kubernetes then stepped in as the orchestra conductor, managing these containers at scale. It handles deployment, scaling, load balancing, and self-healing when things go wrong.

The benefits are massive:

- Deploy 20 times a day instead of once a quarter

- Scale individual components based on actual demand

- Isolate failures so one broken service doesn’t crash everything

- Let different teams use different tech stacks for different services

API Gateways and Service Mesh Implementations

When your application splits into dozens of microservices, you need traffic cops to manage the chaos. Enter API gateways and service meshes.

API gateways sit at the entrance to your microservices ecosystem. Products like Kong, Amazon API Gateway, or Apigee handle crucial front-door functions:

- Routing requests to the right service

- Load balancing traffic

- Rate limiting to prevent abuse

- Request/response transformation

- Authentication in one central place

But what about service-to-service communication inside your network? That’s where service meshes shine. Tools like Istio, Linkerd, or Consul connect services through a network of proxies that handle:

- Secure service-to-service authentication

- Automatic encryption of traffic

- Detailed monitoring of request flows

- Circuit breaking to prevent cascading failures

- Canary deployments and traffic splitting

The best part? All this happens without changing your application code. The mesh operates as a separate infrastructure layer, letting developers focus on business logic while ops teams handle connectivity patterns.

Request Authentication and Authorization

Security isn’t optional in modern web architecture. Every request needs to answer two critical questions:

- Authentication: Are you who you say you are?

- Authorization: Are you allowed to do what you’re trying to do?

For authentication, most systems have moved beyond simple username/password schemes to more robust approaches:

- OAuth 2.0 and OpenID Connect for delegated authorization

- JWT (JSON Web Tokens) for stateless authentication

- Multi-factor authentication requiring something you know and something you have

- Single Sign-On (SSO) through providers like Okta or Auth0

Once we know who you are, authorization kicks in. Modern systems implement:

- Role-Based Access Control (RBAC) assigning permissions to roles rather than individuals

- Attribute-Based Access Control (ABAC) making decisions based on user attributes, resource properties, and environmental conditions

- Policy engines like Open Policy Agent (OPA) that centralize authorization rules

The trend is moving toward Zero Trust architectures where nothing is automatically trusted, even inside the network perimeter. Every request is verified, every access is authenticated, and every privilege is minimized.

This layered approach ensures your application only processes requests from legitimate users performing legitimate actions.

Data Processing and Storage

Database Interactions in the Cloud

Ever wondered how Netflix knows exactly what you watched last week? Or how Amazon remembers your shopping cart even after you close the browser? It’s all in the databases.

In modern web architecture, databases have evolved beyond simple storage systems. They’re now distributed powerhouses spanning multiple servers across geographic regions. When a user request hits the application server, it typically needs to fetch or store data.

Cloud platforms offer various database options:

- Relational databases (Amazon RDS, Google Cloud SQL): Perfect for structured data with complex relationships

- NoSQL databases (DynamoDB, MongoDB Atlas): Handle unstructured data at scale

- NewSQL (CockroachDB, Google Spanner): Combine SQL reliability with NoSQL scalability

The real magic happens in how these databases interact with application logic. Most systems use Object-Relational Mapping (ORM) to translate between application objects and database records, keeping developers from writing raw SQL queries for every operation.

Caching Strategies for Performance Optimization

Database calls are expensive. That’s why smart developers don’t make them unless absolutely necessary.

Enter caching—the secret weapon for blazing-fast web applications. By storing frequently accessed data in memory, you can drastically cut response times. Here’s what works:

Multi-level caching is the gold standard:

- Browser caches for static assets

- CDN caches for shared content

- Application-level caches (Redis, Memcached) for dynamic data

- Database query caches for expensive computations

The best part? Modern cloud platforms handle most of this complexity for you. Amazon ElastiCache and Azure Cache for Redis automatically scale with your traffic spikes.

But there’s an art to effective caching. Cache invalidation (knowing when to refresh stale data) remains one of computing’s hardest problems. Get it wrong, and users see outdated information. Too aggressive, and you lose performance benefits.

Data Persistence and Storage Options

Not all data is created equal, and neither are storage solutions.

Cloud platforms offer tiered storage options that balance performance, durability, and cost:

| Storage Type | Use Case | Example Services |

|---|---|---|

| Block Storage | Virtual machines, databases | AWS EBS, Google Persistent Disks |

| Object Storage | Media files, backups, static assets | S3, Google Cloud Storage |

| File Storage | Shared application files | EFS, Azure Files |

Smart web architectures leverage multiple storage types. User profile pictures? Object storage. Transaction logs? Block storage with high IOPS. Shared configuration files? Distributed file systems.

Beyond simple storage, modern applications implement sophisticated data lifecycle policies. Hot data stays on premium storage for fast access, while cold data automatically moves to cheaper options. Some systems even implement “warm” tiers as intermediate steps.

Data replication adds another layer of complexity. Critical information often gets copied across regions for disaster recovery—ensuring your favorite apps stay online even if an entire data center goes down.

Behind the Scenes: Infrastructure that Powers It All

Virtual Machines vs. Serverless Computing

Ever wondered what’s actually running your favorite web apps? Two major players dominate the scene: virtual machines and serverless computing.

Virtual machines (VMs) are like getting your own apartment in a building. You control everything inside your space, but you’re paying rent even when you’re not home. VMs give you complete control over your computing environment—operating system, configurations, everything. But they keep running (and charging you) 24/7, even during low traffic periods.

Serverless computing? It’s more like paying for a hotel room only for the exact minutes you use it. Your code only executes when triggered by an event—like when a user submits a form. AWS Lambda, Azure Functions, and Google Cloud Functions handle all the infrastructure details for you.

| Virtual Machines | Serverless Computing |

|------------------|----------------------|

| Always running | Runs only when needed |

| Full OS control | Function-focused |

| Manual scaling | Automatic scaling |

| Predictable cost | Pay-per-execution |

| Higher maintenance | Near-zero maintenance |

Auto-scaling and Elastic Resources

Remember that nightmare scenario when your website crashed during a traffic spike? Auto-scaling prevents exactly that.

Auto-scaling dynamically adjusts your computing resources based on real-time demand. When traffic surges, new servers spin up automatically. When things quiet down, excess capacity disappears, saving you money.

Modern cloud platforms use sophisticated metrics to trigger these scaling events:

- CPU utilization crossing thresholds

- Memory consumption spikes

- Request queue length growth

- Network traffic patterns

The magic happens through scaling policies. Horizontal scaling adds more machines to your pool, while vertical scaling upgrades existing machines with more powerful resources.

Cloud Provider Architectures Compared

Not all cloud providers are created equal. Each has architected their infrastructure with different priorities:

AWS emphasizes breadth of services. Their infrastructure spans 25+ regions with 81+ availability zones, using a complex networking layer that isolates resources while maintaining high availability. Their VPC architecture is the gold standard for cloud networking.

Google Cloud prioritizes their global network. They’ve built one of the largest private networks on earth, connecting their data centers with ultra-low latency. Their live migration technology lets them update underlying hardware without rebooting your VMs.

Azure bridges the gap for enterprises already invested in Microsoft technologies. Their infrastructure seamlessly integrates with existing Active Directory deployments and offers unique hybrid capabilities.

Infrastructure as Code (IaC) Benefits

Gone are the days of clicking through console interfaces to build servers. Infrastructure as Code transforms how we deploy cloud resources.

With IaC tools like Terraform, CloudFormation, or Pulumi, your entire infrastructure exists as code files in version control. This brings software development practices to infrastructure management:

- Version control tracks every change

- Peer reviews catch potential issues before deployment

- Automated testing validates infrastructure before it goes live

- Consistent environments eliminate “works on my machine” problems

The productivity gains are massive. Need 50 identical web servers across three regions? That’s three lines of code, not hours of clicking. Need to update all of them? Change one variable, run one command.

But the real game-changer is disaster recovery. When your infrastructure definition lives as code, you can rebuild your entire environment from scratch in minutes—not days or weeks.

Putting It All Together: A Complete Request Lifecycle

Visualizing the End-to-End Journey

Picture this: a user clicks “Login” on your website, and boom—a cascade of events unfolds across dozens of systems in milliseconds. Magic, right? Not really.

Here’s what actually happens:

- Browser Initiates Request: User clicks a button, browser packages up an HTTPS request

- DNS Resolution: Domain name gets translated to an IP address

- Load Balancer: Traffic director sends the request to the least busy server

- CDN Check: Static assets? Serve from edge locations without bothering the origin server

- Web Server: Accepts the connection, hands off to application logic

- Application Server: Processes the request, applies business rules

- Database Query: Pulls or updates user data

- Response Generation: Assembles the response (HTML, JSON, etc.)

- Return Journey: Data flows back through the stack

- Browser Rendering: Paints the pixels on screen

This journey takes anywhere from 100ms (blazing fast) to several seconds (painfully slow).

Common Bottlenecks and How to Identify Them

You know that frustrating spinning wheel? Yeah, something’s stuck. These are the usual suspects:

- Network Latency: High ping times, especially in cross-continental requests

- Database Overload: Queries taking forever, connections maxed out

- Memory Leaks: Servers gradually slowing down until restart

- Inefficient Algorithms: That O(n²) code you wrote at 2am

- Third-Party Services: External APIs that decide to take a nap

Spotting these issues requires proper instrumentation. Set up distributed tracing (like Jaeger or Zipkin) to visualize request flows. Use APM tools that highlight where time is being spent.

The dead giveaway? Check your logs for requests with response times much higher than your averages.

Performance Metrics that Matter

Skip vanity metrics. Focus on these instead:

- Time to First Byte (TTFB): How quickly your server starts responding

- Time to Interactive (TTI): When users can actually use your page

- Error Rate: Percentage of requests that fail

- Apdex Score: Ratio of satisfying response times to total requests

Here’s a handy breakdown:

| Metric | Good | Acceptable | Poor | Impact |

|---|---|---|---|---|

| TTFB | <200ms | 200-500ms | >500ms | Initial impression |

| Page Load | <2s | 2-4s | >4s | Bounce rate |

| Error Rate | <0.1% | 0.1-1% | >1% | User trust |

| CPU Utilization | <50% | 50-80% | >80% | System stability |

Remember, optimizing the full request lifecycle isn’t about fixing everything—it’s about finding and fixing the slowest parts first. That’s where you’ll get the biggest wins.

Tracing a user request through modern web architecture reveals the incredible complexity behind even the simplest online interactions. From the moment you click a link, your request travels through multiple layers of infrastructure—from browser rendering and DNS resolution to load balancers, application servers, database systems, and back again. Each component plays a vital role in delivering the seamless digital experiences we’ve come to expect.

Understanding this end-to-end journey empowers developers and architects to build more resilient, scalable systems. Whether you’re optimizing front-end performance, implementing robust application logic, or designing efficient data storage solutions, seeing the bigger picture helps create better web experiences. Next time you use a web application, take a moment to appreciate the intricate dance of technologies working together across the cloud stack to fulfill your request.