Building a robust data engineering pipeline has become essential for organizations handling massive amounts of data daily. This guide walks you through creating a production-ready ETL pipeline Apache Airflow orchestration system that combines the power of Apache Spark data processing with DynamoDB ETL capabilities.

This tutorial is designed for data engineers, backend developers, and DevOps professionals who want to master the modern data stack and build scalable data solutions. You’ll get hands-on experience with real-world scenarios and practical implementation strategies.

We’ll dive deep into Airflow Spark integration best practices, showing you how to set up seamless data pipeline orchestration that can handle enterprise-scale workloads. You’ll also discover proven techniques for ETL performance optimization and learn how to design a cloud data engineering architecture that grows with your needs.

By the end of this guide, you’ll have a complete understanding of how these three powerful technologies work together to create big data processing with Spark solutions that are both reliable and efficient.

Understanding the Modern Data Engineering Stack

Key components of ETL pipelines in today’s data landscape

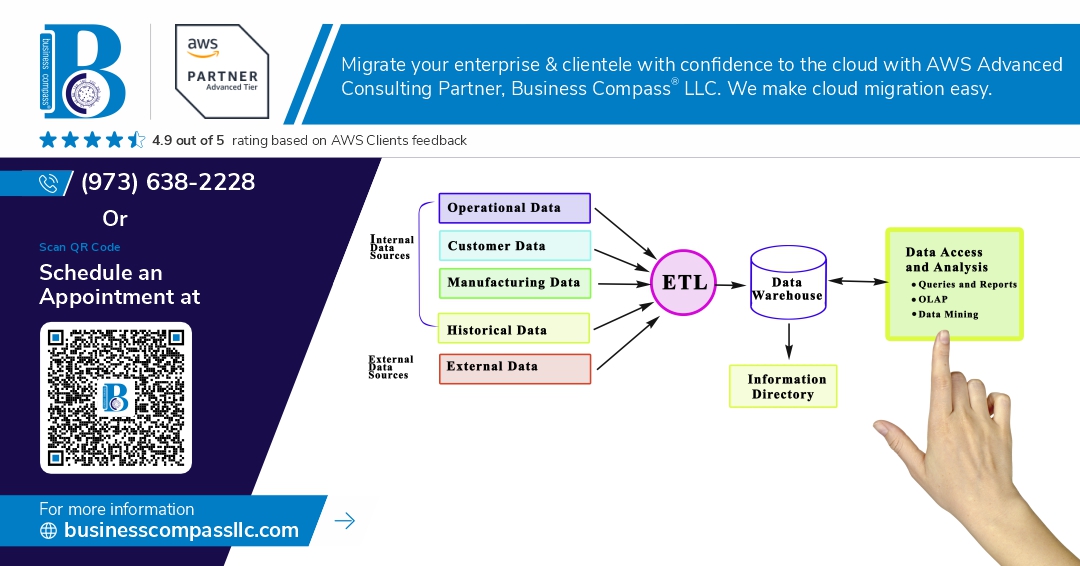

Modern data engineering pipelines rely on three critical components: orchestration tools for workflow management, processing engines for data transformation, and storage systems for data persistence. Orchestrators like Apache Airflow schedule and monitor pipeline execution, while distributed processing frameworks handle large-scale transformations. NoSQL databases and data warehouses serve as endpoints, creating a complete data engineering architecture that supports real-time and batch processing workflows.

Why Airflow, Spark, and DynamoDB form a powerful combination

This trio creates an exceptional data engineering pipeline by combining Airflow’s robust orchestration capabilities with Spark’s distributed processing power and DynamoDB’s scalable NoSQL storage. Airflow manages complex workflows and dependencies, Spark handles massive data transformations across clusters, and DynamoDB provides lightning-fast reads and writes with automatic scaling. Together, they enable seamless ETL pipeline Apache Airflow integration while supporting both structured and unstructured data processing requirements.

Benefits of cloud-native data engineering solutions

Cloud-native data engineering architecture delivers automatic scaling, reduced infrastructure management, and pay-as-you-go pricing models. These solutions eliminate the need for hardware provisioning while providing built-in redundancy and disaster recovery. Modern data stack components integrate seamlessly across cloud providers, enabling rapid deployment of ETL performance optimization strategies. Teams can focus on data logic rather than infrastructure maintenance, accelerating time-to-value for data-driven applications and analytics platforms.

Setting Up Apache Airflow for Pipeline Orchestration

Installing and Configuring Airflow in Your Environment

Getting Apache Airflow up and running requires careful environment setup to ensure smooth data pipeline orchestration. Start by creating a dedicated Python virtual environment and installing Airflow using pip with the appropriate constraint file for your Python version. Configure the airflow.cfg file to specify your database backend – PostgreSQL or MySQL work better than the default SQLite for production ETL pipeline Apache Airflow implementations. Initialize the database using airflow db init and create your admin user account. For production deployments, consider using Docker containers or Kubernetes to manage Airflow components including the webserver, scheduler, and workers. Configure your connection settings for external systems like databases and cloud services through the Airflow UI or environment variables.

Creating Your First DAG for Data Pipeline Management

Building your first Directed Acyclic Graph (DAG) starts with importing necessary modules and defining basic DAG parameters including schedule intervals, start dates, and retry policies. Create a Python file in your DAGs folder and define tasks using operators like BashOperator, PythonOperator, or custom operators for specific data engineering pipeline needs. Structure your DAG with clear task dependencies using the >> operator or set_upstream and set_downstream methods. Include proper task naming conventions and documentation strings to make your pipeline maintainable. Test your DAG using airflow dags test command before deploying to production. Consider using TaskGroups for organizing related tasks and improving visual representation in the Airflow UI.

Best Practices for Scheduling and Monitoring Workflows

Effective scheduling requires understanding Airflow’s cron-based scheduling system and choosing appropriate intervals for your data pipeline orchestration needs. Use catchup=False for DAGs that shouldn’t backfill historical runs and set proper SLA monitoring for critical tasks. Implement comprehensive logging using Airflow’s built-in logging system and consider integrating with external monitoring tools like Prometheus or DataDog. Create meaningful alerts for task failures, SLA misses, and resource constraints. Use Airflow’s Variables and Connections features to manage configuration parameters and credentials securely. Monitor DAG performance through the web interface and set up email notifications for critical pipeline failures. Implement proper resource allocation and concurrency settings to prevent system overload.

Handling Dependencies and Error Management

Robust error handling starts with defining appropriate retry policies, timeout settings, and failure callbacks for each task in your pipeline. Use Airflow’s built-in retry mechanisms with exponential backoff and jitter to handle transient failures gracefully. Implement custom error handling logic using the on_failure_callback and on_retry_callback parameters. Create external task sensors for managing dependencies between different DAGs and use trigger rules like all_success, one_failed, or none_failed to control task execution flow. Set up proper alerting channels through email, Slack, or PagerDuty for immediate notification of critical failures. Use Airflow’s XCom feature carefully for passing small amounts of data between tasks while avoiding large data transfers that can impact performance.

Leveraging Apache Spark for Large-Scale Data Processing

Configuring Spark Clusters for Optimal Performance

Proper Spark cluster configuration determines whether your big data processing with Spark runs smoothly or crashes under load. Start by sizing your cluster based on data volume and complexity – generally allocate 2-4 cores per executor with 4-8GB memory each. Set spark.sql.adaptive.enabled=true to enable adaptive query execution, which automatically optimizes joins and reduces shuffle partitions. Configure spark.sql.adaptive.coalescePartitions.enabled=true to prevent small partition overhead. For Apache Spark data processing workloads, adjust spark.default.parallelism to 2-3 times your total cores. Monitor executor memory usage and tune spark.executor.memoryFraction between 0.6-0.8 depending on your caching needs.

Writing Efficient Data Transformation Jobs

Efficient Spark transformations require understanding lazy evaluation and partition awareness. Use built-in functions from pyspark.sql.functions instead of UDFs whenever possible – they’re optimized at the Catalyst level. Cache DataFrames using .cache() or .persist() when accessed multiple times, but remember to .unpersist() afterward. Avoid wide transformations like groupBy and join early in your pipeline. Instead, filter data first using .filter() to reduce dataset size. When joining tables, broadcast smaller datasets using broadcast() for hash joins. Structure your data engineering pipeline with column pruning – select only required columns before transformations to minimize memory usage and network I/O.

Memory Management and Optimization Techniques

Memory management makes or breaks Spark performance in production ETL performance optimization scenarios. Monitor garbage collection using --conf spark.eventLog.enabled=true and analyze logs with Spark History Server. Set spark.executor.memory to 70% of container memory, leaving room for off-heap operations. Use MEMORY_AND_DISK_SER storage level for large cached datasets to reduce memory pressure. Tune spark.sql.shuffle.partitions based on data size – start with 200 for small datasets, scale to 2000+ for terabyte-scale processing. Enable dynamic allocation with spark.dynamicAllocation.enabled=true to automatically scale executors based on workload demands, reducing costs during idle periods.

Implementing DynamoDB as Your Data Destination

Designing DynamoDB tables for your use case

Creating effective DynamoDB table schemas requires careful partition key selection to distribute data evenly across nodes. Choose high-cardinality attributes that avoid hot partitions, and design composite sort keys to support your query patterns. Consider access patterns early – DynamoDB performs best when you know exactly how data will be retrieved, making single-table design approaches often more efficient than traditional relational modeling.

Optimizing write operations for high throughput

Batch write operations significantly improve ETL performance by reducing API calls and network overhead. Use batch_writer() context managers in boto3 to automatically handle batching and retry logic. Implement exponential backoff for throttled requests, and consider using DynamoDB’s on-demand billing mode for unpredictable ETL workloads. Parallel writes across multiple processes can saturate your provisioned capacity while maintaining cost efficiency.

Setting up proper indexing strategies

Global Secondary Indexes (GSIs) enable flexible query patterns beyond your main table’s primary key structure. Create sparse indexes for optional attributes to reduce storage costs and improve query performance. Local Secondary Indexes work well for different sort orders on the same partition key. Plan your index strategy during ETL pipeline design – adding indexes later requires table scans and can impact production performance.

Managing costs and performance monitoring

Enable DynamoDB auto-scaling to handle variable ETL loads without over-provisioning capacity. Use CloudWatch metrics to monitor consumed capacity, throttled requests, and hot partitions. Implement item-level timestamps for efficient data lifecycle management through TTL settings. Regular capacity planning based on historical usage patterns helps optimize costs while maintaining SLA requirements for your data engineering pipeline operations.

Building the Complete ETL Pipeline

Connecting All Components Seamlessly

Building a robust ETL pipeline Apache Airflow requires careful orchestration between Airflow DAGs, Spark jobs, and DynamoDB operations. Create dedicated Airflow operators for each component – use SparkSubmitOperator to trigger Apache Spark data processing jobs and custom operators for DynamoDB interactions. Configure connection strings and authentication credentials in Airflow’s connection management system to ensure secure communication between services.

Data Validation and Quality Checks

Implement comprehensive data quality checks at each pipeline stage to maintain data integrity in your modern data stack. Create Spark-based validation functions that check for null values, data type consistency, and business rule compliance before writing to DynamoDB. Use Airflow’s XCom feature to pass validation results between tasks, enabling downstream tasks to make decisions based on data quality metrics. Set up automated alerts when data quality thresholds are breached.

Error Handling and Retry Mechanisms

Design resilient data engineering pipeline workflows with intelligent error handling and retry logic. Configure Airflow task retries with exponential backoff for transient failures, while implementing custom failure handlers for permanent errors. Create separate failure notification channels for different error types – data quality issues versus infrastructure failures require different response strategies. Use Airflow’s trigger rules to control task execution flow when upstream tasks fail.

Testing Your Pipeline End-to-End

Establish comprehensive testing frameworks for your Airflow Spark integration that cover unit, integration, and end-to-end scenarios. Create test datasets that simulate various data conditions and edge cases, running them through your complete pipeline in isolated environments. Use Airflow’s testing utilities to validate DAG structure and task dependencies, while implementing automated regression tests that verify data transformations and DynamoDB write operations produce expected results across different data volumes.

Performance Optimization and Scaling Strategies

Identifying bottlenecks in your data pipeline

Spotting performance issues in your ETL pipeline requires monitoring key metrics across Airflow, Spark, and DynamoDB components. Watch for slow DAG execution times, memory spikes in Spark jobs, and DynamoDB throttling errors. Common bottlenecks include inefficient data partitioning, undersized cluster resources, poorly optimized SQL queries, and network latency between services. Use Airflow’s built-in metrics, Spark UI monitoring, and CloudWatch logs to track resource usage patterns and identify where your pipeline slows down.

Auto-scaling configurations for variable workloads

Modern data engineering pipeline architectures benefit from dynamic scaling to handle fluctuating data volumes efficiently. Configure Airflow with Kubernetes Executor or ECS to automatically spin up worker pods based on task queue depth. Set up Spark on EMR with auto-scaling groups that adjust cluster size based on pending applications and resource utilization. For DynamoDB, enable auto-scaling on read and write capacity units to accommodate traffic spikes. These configurations ensure your data pipeline orchestration adapts to workload changes without manual intervention.

Cost optimization techniques

Reducing expenses in your data stack involves strategic resource allocation and smart scheduling. Run non-critical Spark jobs on spot instances to save up to 90% on compute costs. Schedule heavy processing during off-peak hours when cloud resources cost less. Use DynamoDB on-demand billing for unpredictable workloads and reserved capacity for consistent traffic patterns. Implement data lifecycle policies to automatically archive old data to cheaper storage tiers. Monitor your Apache Spark data processing jobs to right-size clusters and avoid over-provisioning resources that drive up monthly bills.

Building a robust ETL pipeline using Airflow, Spark, and DynamoDB gives you the power to handle massive amounts of data while keeping everything organized and running smoothly. You’ve seen how Airflow acts as your conductor, making sure each step happens at the right time, while Spark does the heavy lifting with lightning-fast data processing. DynamoDB then serves as your reliable destination, storing your transformed data and making it instantly accessible for your applications.

The real magic happens when you put all these pieces together and fine-tune them for your specific needs. Start small with a basic pipeline, test everything thoroughly, and then scale up as your data grows. Remember to monitor your performance metrics and don’t be afraid to adjust your configurations as you learn what works best for your use case. This tech stack isn’t just about handling today’s data – it’s about building a foundation that can grow with your business and adapt to whatever data challenges come next.