Machine learning models that sit unused in notebooks don’t create business value. MLOps bridges the gap between data science experiments and real-world applications by streamlining how you build, deploy machine learning models, and keep them running reliably at scale.

This guide is designed for data scientists, ML engineers, and DevOps professionals who want to move beyond proof-of-concepts and create production-ready machine learning operations workflows on Amazon Web Services.

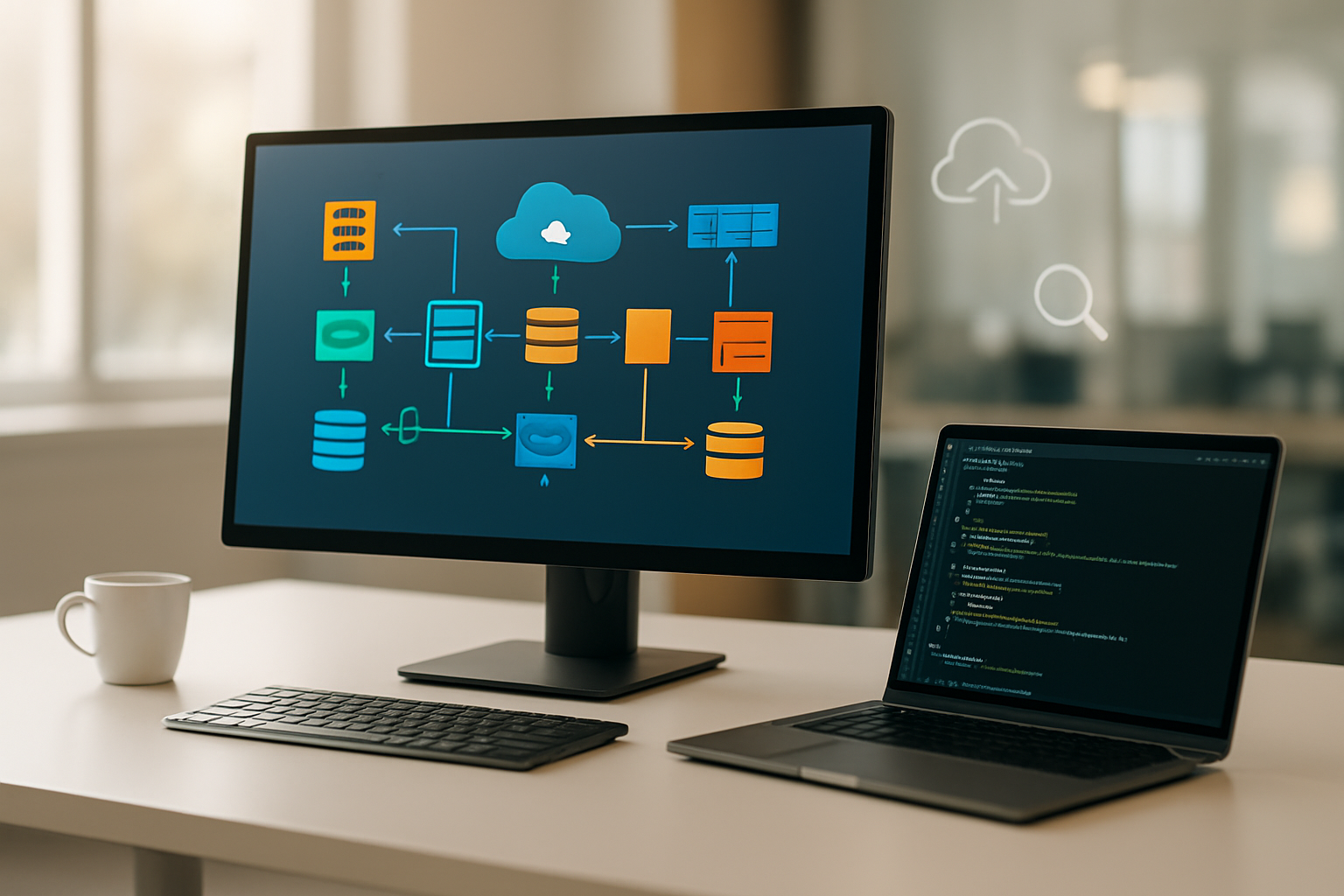

You’ll discover how to build end-to-end MLOps pipelines using AWS SageMaker and other AWS services that automate model training, validation, and deployment. We’ll walk through creating your first ML pipeline on AWS from scratch, then explore proven strategies for scaling machine learning models to handle enterprise workloads while maintaining performance and reliability in machine learning production environments.

Understanding MLOps and Its Critical Role in Modern AI

Core principles that differentiate MLOps from traditional DevOps

MLOps brings unique challenges that traditional DevOps wasn’t designed to handle. While DevOps focuses on deploying static code, machine learning operations deal with dynamic models that can drift over time. The most fundamental difference lies in data dependency – ML models are only as good as the data they’re trained on, making data versioning and quality monitoring critical components of any MLOps pipeline.

Traditional software either works or it doesn’t, but ML models exist on a performance spectrum. A model might work perfectly in development but gradually degrade in production as real-world data patterns shift. This reality demands continuous monitoring and retraining workflows that go far beyond standard application monitoring.

MLOps best practices also emphasize reproducibility in ways that traditional DevOps doesn’t need to consider. Every model training run must be traceable, from the exact dataset version to hyperparameters and infrastructure configurations. This level of detail ensures that successful experiments can be replicated and poor-performing models can be debugged effectively.

The experimentation aspect sets MLOps apart too. Data scientists run hundreds of experiments to find optimal models, requiring robust experiment tracking and model registry systems. Traditional DevOps rarely deals with this level of iterative development and comparison between different approaches.

Key benefits of implementing MLOps in your organization

Organizations that embrace MLOps see dramatic improvements in their ability to deploy machine learning models at scale. The most immediate benefit is speed – teams can move from model development to production deployment in days rather than months. This acceleration happens because MLOps standardizes the deployment process, eliminating the manual handoffs that typically slow down ML projects.

Risk reduction represents another major advantage. Proper MLOps practices include automated testing for data quality, model performance, and integration issues. These safeguards catch problems before they reach production, preventing costly failures and maintaining user trust in AI-powered features.

Machine learning production environments become more reliable when MLOps principles guide their design. Automated monitoring detects model drift early, triggering retraining workflows before performance degrades noticeably. This proactive approach keeps AI applications running smoothly without constant manual intervention.

Cost optimization emerges naturally from MLOps implementation. Automated resource scaling ensures compute resources match actual demand, while experiment tracking prevents duplicate work. Teams can identify which approaches work best and invest their time accordingly, rather than repeating failed experiments.

Collaboration between data science and engineering teams improves significantly with shared MLOps tools and processes. Everyone speaks the same language when discussing model deployment, monitoring, and maintenance, reducing friction and improving project outcomes.

Common challenges solved by effective MLOps practices

Model drift represents one of the most persistent challenges in machine learning production environments. Real-world data constantly evolves, causing even well-performing models to become less accurate over time. Effective MLOps pipeline implementation includes automated drift detection and response mechanisms, ensuring models stay current without manual oversight.

Data quality issues plague many ML projects, often going undetected until they cause significant problems. MLOps practices establish data validation pipelines that check for schema changes, missing values, and statistical anomalies before they can corrupt model training or inference. These automated checks prevent garbage-in-garbage-out scenarios that can destroy user trust.

Reproducibility challenges disappear when organizations implement proper MLOps workflows. Teams can recreate any model version with confidence, debug issues systematically, and scale successful experiments. This capability becomes essential when regulatory compliance requires explaining model decisions or when troubleshooting production incidents.

Scaling bottlenecks that limit ML adoption get resolved through MLOps automation. Manual deployment processes that work for one or two models break down completely when organizations try to manage dozens of models across multiple environments. MLOps best practices standardize these processes, enabling teams to scale their ML operations horizontally without proportional increases in operational overhead.

Human error in deployment and monitoring gets minimized through automation and standardized processes. Manual steps in ML workflows create opportunities for mistakes that can be difficult to detect and expensive to fix. MLOps replaces these error-prone manual processes with automated, tested workflows that perform consistently.

Essential Components of a Robust MLOps Pipeline

Version Control Systems for Data, Models, and Code

Managing machine learning projects requires tracking changes across three critical components: datasets, model artifacts, and source code. Traditional Git repositories work well for code versioning, but ML projects demand specialized tools that handle large binary files and complex data lineages.

Data versioning becomes essential when dealing with evolving datasets. Tools like DVC (Data Version Control) and AWS S3 versioning help track dataset changes, enabling teams to reproduce experiments with specific data snapshots. This prevents the common scenario where a model performs differently because someone updated the training data without proper documentation.

Model versioning captures trained model artifacts, hyperparameters, and metadata. MLflow and AWS SageMaker Model Registry provide centralized storage for model versions, making it easy to compare performance metrics across iterations and roll back to previous versions when needed.

Code versioning extends beyond basic Git practices to include ML-specific configurations, training scripts, and deployment code. MLOps pipelines integrate with Git workflows, automatically triggering builds when code changes occur.

The key is establishing clear naming conventions and tagging strategies that connect data versions with corresponding model versions and code commits. This creates an audit trail that makes debugging and compliance much more manageable.

Automated Testing Frameworks for Machine Learning Models

Machine learning models require different testing approaches compared to traditional software. While unit tests validate individual functions, ML testing focuses on data quality, model behavior, and performance degradation over time.

Data validation tests check for schema consistency, missing values, and distribution shifts. Great Expectations and AWS Deequ provide frameworks for defining data quality rules and automatically flagging anomalies in incoming datasets. These tests catch issues before they impact model training or inference.

Model performance tests validate that models meet accuracy thresholds on validation datasets. These tests run automatically during the CI/CD process, preventing underperforming models from reaching production. Setting up regression tests that compare new model versions against baseline performance helps maintain quality standards.

Integration tests verify that models work correctly within the broader system architecture. This includes testing API endpoints, checking inference latency, and validating output formats. AWS CodePipeline can orchestrate these tests as part of the deployment workflow.

Behavioral tests examine model responses to edge cases and adversarial inputs. These tests help identify potential bias issues and ensure models behave reasonably when encountering unexpected data patterns.

Continuous Integration and Deployment Workflows

MLOps CI/CD workflows differ from traditional software deployment because they involve model training, validation, and deployment steps. The pipeline must handle both code changes and new data, making the process more complex but also more powerful.

Continuous Integration starts when developers push code changes or new training data becomes available. AWS CodeCommit triggers automated builds that run data validation tests, retrain models if necessary, and execute the full test suite. This ensures that every change maintains system integrity.

Model deployment strategies require careful consideration of downtime and performance impact. Blue-green deployments allow testing new models in parallel with production versions, while canary releases gradually shift traffic to new models. AWS SageMaker endpoints support these deployment patterns natively.

Automated rollback mechanisms become critical when model performance degrades in production. The MLOps pipeline should monitor key metrics and automatically revert to previous model versions when performance drops below acceptable thresholds.

Infrastructure as Code principles apply to ML infrastructure, with tools like AWS CloudFormation and Terraform managing compute resources, storage, and networking components. This ensures consistent environments across development, staging, and production.

Model Monitoring and Performance Tracking Systems

Production ML models require continuous monitoring because their performance can degrade over time due to data drift, concept drift, or changing business conditions. Effective monitoring systems track both technical metrics and business outcomes.

Data drift detection compares incoming production data with training data distributions. When the statistical properties of input features change significantly, model performance often suffers. AWS SageMaker Model Monitor automatically detects data quality issues and distribution changes.

Model performance monitoring tracks prediction accuracy, precision, recall, and business-specific KPIs. Setting up dashboards that visualize these metrics over time helps teams spot trends before they become critical issues. CloudWatch and custom monitoring solutions provide real-time alerting when metrics fall outside expected ranges.

Prediction quality analysis involves comparing model predictions with actual outcomes when ground truth becomes available. This feedback loop is essential for understanding real-world model performance and identifying areas for improvement.

Resource utilization monitoring ensures that ML inference infrastructure operates efficiently. Tracking CPU usage, memory consumption, and request latency helps optimize costs while maintaining service level agreements. Auto-scaling policies can adjust compute resources based on traffic patterns.

Bias and fairness monitoring examines model predictions across different demographic groups or business segments. Regular audits help identify potential discriminatory behavior and ensure models align with organizational values and regulatory requirements.

Leveraging AWS Services for Machine Learning Operations

Amazon SageMaker for end-to-end ML workflows

Amazon SageMaker serves as the cornerstone of AWS MLOps, providing a comprehensive platform that handles the entire machine learning lifecycle. This fully managed service eliminates the heavy lifting associated with building, training, and deploying ML models at scale.

SageMaker Studio offers a unified development environment where data scientists can collaborate seamlessly. The platform includes built-in algorithms, pre-configured frameworks, and AutoML capabilities through SageMaker Autopilot, which automatically builds and tunes models without requiring extensive ML expertise.

For MLOps pipeline development, SageMaker Pipelines provides a native workflow orchestration tool. You can define multi-step workflows that include data preprocessing, model training, evaluation, and deployment stages. These pipelines support conditional execution, parallel processing, and automatic retry mechanisms, making them robust for production environments.

SageMaker Model Registry acts as a centralized repository for managing model versions, metadata, and approval workflows. This feature enables teams to track model lineage, compare performance metrics, and implement proper governance controls before promoting models to production.

The platform’s training capabilities include distributed training across multiple instances, spot instance support for cost optimization, and automatic model tuning through hyperparameter optimization jobs. SageMaker also provides managed endpoints for real-time inference and batch transform jobs for processing large datasets.

AWS CodePipeline for automated deployment processes

AWS CodePipeline creates automated workflows that streamline the deployment of machine learning models from development to production. This CI/CD service integrates seamlessly with other AWS services to create robust MLOps pipelines that reduce manual intervention and minimize deployment risks.

Pipeline stages can include source code retrieval from repositories like GitHub or CodeCommit, automated testing of ML models using CodeBuild, and deployment to various environments through CodeDeploy. Each stage can trigger specific actions based on predefined conditions, ensuring that only validated models reach production.

CodePipeline supports blue-green deployments and canary releases for ML models, allowing teams to gradually roll out new model versions while monitoring performance metrics. This approach minimizes the impact of potential issues and provides quick rollback capabilities if problems arise.

Integration with SageMaker enables automated model retraining pipelines that can trigger when new data becomes available or when model performance degrades below acceptable thresholds. These pipelines can automatically retrain models, evaluate their performance, and deploy them if they meet quality criteria.

The service provides detailed logging and monitoring capabilities, giving teams visibility into each pipeline execution. CloudWatch Events can trigger pipeline executions based on various conditions, creating truly automated MLOps workflows that respond to changes in data, code, or infrastructure.

Amazon CloudWatch for comprehensive model monitoring

Amazon CloudWatch provides essential monitoring capabilities for machine learning operations, offering real-time insights into model performance, infrastructure health, and business metrics. This monitoring foundation enables proactive issue detection and rapid response to production problems.

CloudWatch collects and tracks key ML metrics including prediction latency, throughput, error rates, and resource utilization. Custom metrics can monitor model-specific performance indicators like accuracy drift, data quality issues, or feature distribution changes that might signal model degradation.

The service’s alarm system can automatically trigger responses when metrics exceed predefined thresholds. These alarms can initiate actions like scaling inference endpoints, sending notifications to operations teams, or triggering automated model retraining pipelines. This proactive approach prevents minor issues from becoming major production problems.

CloudWatch Logs aggregates application logs, model prediction logs, and system logs in a centralized location. Advanced log analysis capabilities help identify patterns, troubleshoot issues, and maintain audit trails for compliance requirements. Log Insights provides powerful query capabilities for analyzing large volumes of log data.

Dashboard creation enables teams to visualize MLOps metrics and create custom views for different stakeholders. Operations teams can monitor infrastructure metrics, while data science teams focus on model performance indicators. These dashboards can be shared across organizations and integrated with other monitoring tools.

AWS Lambda for serverless ML inference

AWS Lambda enables serverless machine learning inference, providing a cost-effective and scalable solution for deploying lightweight models and preprocessing functions. This approach eliminates infrastructure management overhead while automatically scaling based on demand.

Lambda functions can host smaller ML models directly or act as preprocessing layers that transform input data before sending it to more complex models hosted on SageMaker endpoints. This hybrid approach optimizes costs by using the most appropriate compute resources for each task.

The serverless model works particularly well for sporadic inference requests, batch processing jobs, and real-time feature engineering. Lambda automatically scales from zero to thousands of concurrent executions, making it ideal for variable workloads where traditional always-on infrastructure would be wasteful.

Event-driven architectures using Lambda can create responsive MLOps workflows. Functions can trigger automatically when new data arrives in S3, process the data, run predictions, and store results in databases. This pattern creates efficient data processing pipelines without constant resource consumption.

Container support in Lambda allows teams to package larger models and custom runtime environments while maintaining serverless benefits. This flexibility enables deployment of models that previously required dedicated infrastructure, expanding the use cases for serverless ML inference.

S3 and RDS for scalable data storage solutions

Amazon S3 and RDS form the data foundation for scalable MLOps implementations, providing reliable storage solutions that support the massive data requirements of modern machine learning workflows. These services handle everything from raw training data to model artifacts and prediction results.

S3 serves as the primary data lake for MLOps pipelines, storing training datasets, feature stores, model artifacts, and batch inference results. Its virtually unlimited storage capacity and multiple storage classes enable cost-effective data management strategies. S3’s event notifications can trigger downstream processing when new data arrives.

Versioning capabilities in S3 ensure data lineage and reproducibility, critical requirements for MLOps workflows. Teams can track changes to training datasets, maintain multiple versions of model artifacts, and implement proper data governance practices. S3’s integration with other AWS services makes it a natural choice for MLOps data storage.

RDS provides structured data storage for MLOps metadata, including experiment tracking, model performance metrics, and operational logs. The managed database service handles backup, patching, and scaling operations, allowing teams to focus on ML workflows rather than database administration.

Data partitioning strategies in both S3 and RDS optimize query performance and reduce costs. S3 prefix organization enables efficient data access patterns, while RDS indexing and partitioning support fast metadata queries. These optimizations become crucial as MLOps implementations scale to handle larger datasets and more frequent model iterations.

Building Your First MLOps Pipeline on AWS

Setting up your development environment and permissions

Getting your AWS environment ready for MLOps requires careful attention to permissions and tooling. Start by creating a dedicated AWS account or isolated environment for your ML workloads to avoid interfering with production systems. Set up AWS CLI and configure your credentials using aws configure with appropriate access keys.

Create specific IAM roles and policies that follow the principle of least privilege. Your MLOps pipeline needs permissions for AWS SageMaker, S3, Lambda, CloudWatch, and potentially ECR for custom containers. A typical setup includes separate roles for data scientists, ML engineers, and automated services.

Install essential tools on your local machine: Python 3.8+, Docker, Git, and the AWS SDK (boto3). Consider using AWS Cloud9 or SageMaker Studio for cloud-based development environments that come pre-configured with ML tools. Set up your preferred IDE with AWS extensions and configure environment variables for consistent deployment across team members.

Version control becomes critical in MLOps pipelines. Initialize a Git repository structure that separates data processing code, model training scripts, infrastructure configuration, and deployment manifests. This organization helps maintain clean separation of concerns as your pipeline grows in complexity.

Creating reproducible data preprocessing workflows

Data preprocessing consistency makes or breaks ML model reliability in production. AWS offers several services to build robust, repeatable data workflows that handle the messiness of real-world data.

Start with AWS Glue for ETL operations or SageMaker Processing for more ML-focused data transformations. Both services let you containerize your preprocessing logic, ensuring the same transformations run identically across development, staging, and production environments.

Create reusable preprocessing scripts that handle common tasks:

- Data validation and quality checks: Implement schema validation, null value detection, and statistical anomaly identification

- Feature engineering pipelines: Build modular functions for encoding, scaling, and feature selection that can be versioned and tested

- Data partitioning: Set up time-based or stratified splits that maintain consistent train/validation/test distributions

Store preprocessed data in S3 with clear naming conventions and metadata tags. Use SageMaker Feature Store to manage feature definitions, transformations, and lineage tracking. This centralized approach prevents feature drift and ensures all team members work with identical data representations.

Parameterize your preprocessing workflows using AWS Step Functions or SageMaker Pipelines. This allows you to easily adjust data sources, transformation parameters, and output locations without modifying core processing logic.

Implementing automated model training and validation

Automated training workflows eliminate manual bottlenecks and ensure consistent model evaluation. AWS SageMaker provides the foundation for building scalable training pipelines that adapt to your specific ML requirements.

Design your training pipeline with these key components:

Hyperparameter optimization: Use SageMaker’s built-in hyperparameter tuning to automatically explore parameter spaces. Define objective metrics, search strategies, and resource constraints that balance performance improvements against training costs.

Cross-validation strategies: Implement k-fold or time-series cross-validation appropriate for your data type. Time-series models need temporal validation splits, while classification problems benefit from stratified sampling approaches.

Model comparison frameworks: Set up automated A/B testing between different algorithms, feature sets, or hyperparameter configurations. Store experiment results in SageMaker Experiments for easy comparison and reproducibility.

Distributed training capabilities: For large datasets, configure multi-GPU or multi-instance training using SageMaker’s distributed training libraries. This dramatically reduces training time for deep learning models and large-scale algorithms.

Create validation gates that automatically promote or reject models based on predefined performance thresholds. Compare new models against existing baselines using statistical significance tests, not just raw accuracy improvements. This prevents deploying models that perform marginally better due to random variance.

Establishing deployment pipelines with rollback capabilities

Production deployment requires careful orchestration of model artifacts, infrastructure provisioning, and traffic management. Build deployment pipelines that minimize downtime while providing safety nets for quick recovery.

Blue-green deployment strategy: Maintain parallel production environments where you can deploy new models to the “green” environment while “blue” serves live traffic. Once validation passes, switch traffic routing atomically. This approach enables zero-downtime deployments and instant rollbacks.

Canary releases: Gradually shift traffic from existing models to new versions using weighted routing. Start with 5-10% of requests going to the new model, monitor key metrics, and gradually increase traffic if performance meets expectations. SageMaker endpoints support traffic splitting natively.

Model versioning and artifact management: Store model artifacts in S3 with semantic versioning (v1.2.3) and comprehensive metadata including training data versions, hyperparameters, and performance metrics. Use SageMaker Model Registry to manage model lifecycle states (pending, approved, rejected) with automated approval workflows.

Infrastructure as code: Define your entire deployment infrastructure using AWS CloudFormation or CDK. This includes SageMaker endpoints, auto-scaling policies, monitoring dashboards, and alerting rules. Version control these templates alongside your model code for complete reproducibility.

Automated rollback triggers: Configure CloudWatch alarms that monitor model performance metrics like latency, error rates, and prediction accuracy. Set up automatic rollback procedures that revert to the previous model version when metrics exceed acceptable thresholds. This safety net prevents degraded user experiences from faulty model deployments.

Implement comprehensive logging throughout your deployment pipeline. Capture model predictions, input features, timestamps, and error messages. This audit trail proves invaluable for debugging production issues and understanding model behavior patterns over time.

Scaling Machine Learning Models for Production Workloads

Auto-scaling strategies for varying prediction demands

When your machine learning models hit production, request patterns become unpredictable. You might see traffic spike during business hours, drop to nearly zero at night, or experience sudden bursts during promotional campaigns. AWS SageMaker endpoints handle this beautifully through automatic scaling policies that adjust capacity based on real-time metrics.

Set up target tracking scaling using invocations per instance as your primary metric. This approach monitors how many requests each instance processes and automatically adds or removes instances to maintain your target threshold. For most MLOps pipelines, starting with 70-80 invocations per instance works well, but you’ll want to test and adjust based on your model’s complexity and response time requirements.

Application Auto Scaling integrates seamlessly with SageMaker, letting you define minimum and maximum instance counts. Always set a minimum of 2 instances for production workloads to ensure availability during scaling events. The cool-down periods are crucial – set scale-out cool-downs to 300 seconds and scale-in cool-downs to 600 seconds to prevent thrashing during temporary traffic spikes.

Consider implementing predictive scaling for workloads with known patterns. If your ML model deployment serves e-commerce recommendations that peak during lunch hours, schedule capacity increases before the expected traffic surge. This proactive approach reduces cold start latency and improves user experience.

Load balancing techniques for high-availability inference

High-availability inference requires distributing requests across multiple model instances and availability zones. SageMaker multi-model endpoints provide an excellent foundation for this, allowing you to host multiple models behind a single endpoint with built-in load distribution.

Deploy your ML models across at least two availability zones within your primary AWS region. This setup protects against zone-level failures and ensures continuous service availability. Use weighted routing to gradually shift traffic between model versions during deployments, enabling safe rollouts without service interruption.

Health checks play a critical role in maintaining inference reliability. Configure custom health check endpoints that validate model readiness beyond simple HTTP responses. Your health checks should verify model loading status, memory usage, and basic inference capability with test inputs.

Implement circuit breaker patterns using AWS Application Load Balancer with target group health monitoring. When an instance fails health checks, the load balancer automatically removes it from rotation while auto-scaling provisions a replacement. Set health check intervals to 30 seconds with a failure threshold of 3 consecutive failures to balance responsiveness with stability.

Cost optimization methods for large-scale deployments

Running machine learning models at scale can generate significant AWS bills, but smart optimization strategies dramatically reduce costs without sacrificing performance. Start by rightsizing your instances based on actual usage patterns rather than peak capacity planning.

SageMaker Savings Plans offer up to 64% discounts for consistent usage patterns. Analyze your historical usage data to identify baseline capacity that runs 24/7, then purchase Savings Plans to cover this foundation. Use On-Demand instances only for variable capacity above your baseline.

Spot instances work exceptionally well for batch inference workloads and model training in your MLOps pipeline. Configure mixed instance types in your auto-scaling groups, combining Spot instances for cost savings with On-Demand instances for guaranteed availability. Set Spot instance interruption handling to gracefully migrate requests when instances terminate.

Multi-model endpoints reduce costs by sharing infrastructure across multiple models. Instead of deploying each model on dedicated instances, consolidate models with similar performance characteristics onto shared endpoints. This approach works particularly well when models serve different customer segments or time-based traffic patterns.

Monitor costs continuously using AWS Cost Explorer and set up billing alerts. Create custom metrics that track cost per prediction, allowing you to identify expensive models and optimize them specifically.

Multi-region deployment for global accessibility

Global users expect low-latency responses regardless of their location. Multi-region deployment brings your ML model deployment closer to users while providing disaster recovery capabilities for your MLOps infrastructure.

Deploy primary inference endpoints in regions closest to your major user populations. For applications serving North American and European users, consider US East (Virginia) and Europe (Ireland) as primary regions, with Asia Pacific (Singapore) for Asian traffic. This geographic distribution typically covers most global users within acceptable latency thresholds.

Cross-region replication ensures model consistency across deployments. Use AWS CodePipeline to automate model deployments across regions, maintaining version synchronization and configuration consistency. Store your trained models in S3 with cross-region replication enabled, allowing rapid deployment to any region.

Route 53 geolocation routing directs users to their nearest regional endpoint automatically. Configure health checks for each regional endpoint, with automatic failover to healthy regions when problems occur. This setup provides both performance optimization and high availability without user intervention.

Data residency requirements often dictate deployment regions, especially for applications handling sensitive information. Plan your multi-region architecture to comply with local data protection regulations while maintaining optimal performance. Some regions may require dedicated deployments with restricted data flows between regions.

Consider implementing a hub-and-spoke model for model updates, where your primary region serves as the source for trained models that propagate to other regions. This approach simplifies model governance while ensuring consistent behavior across all deployments.

Monitoring and Maintaining ML Models in Production

Real-time performance metrics and alerting systems

Keeping your machine learning models healthy in production requires constant vigilance. AWS CloudWatch becomes your best friend here, offering comprehensive monitoring capabilities that go far beyond basic system metrics. You can track model-specific performance indicators like prediction accuracy, response times, and throughput directly through custom metrics.

Setting up effective alerting starts with defining what “normal” looks like for your model. Create CloudWatch alarms for key performance indicators such as prediction latency exceeding 500ms, error rates climbing above 1%, or request volumes dropping unexpectedly. AWS SageMaker provides built-in metrics for model endpoints, making it easy to monitor inference requests and model performance without additional instrumentation.

Real-time dashboards give you instant visibility into your MLOps pipeline health. Use CloudWatch dashboards to visualize model performance trends, resource utilization, and error patterns. Combine this with AWS Lambda functions for custom alerting logic that can trigger automated responses when specific thresholds are breached.

Data drift detection and model retraining triggers

Data drift can silently kill your model’s performance over time. AWS offers several approaches to detect when your input data starts looking different from what your model was trained on. SageMaker Model Monitor automatically detects data quality issues and statistical drift by comparing incoming data against baseline statistics from your training dataset.

Set up automated drift detection by configuring monitoring schedules that analyze prediction inputs and outputs. When statistical properties like mean, standard deviation, or distribution shape change significantly, your system should flag potential drift. You can customize sensitivity thresholds based on your specific use case and business requirements.

Creating automated retraining triggers saves countless hours of manual monitoring. Use Amazon EventBridge to orchestrate retraining workflows when drift detection systems raise alerts. Connect these events to SageMaker training jobs that automatically retrain your model with fresh data, maintaining performance without manual intervention.

A/B testing frameworks for model comparison

Testing new model versions against production models requires careful experimentation. AWS provides multiple approaches for safe model comparison through traffic splitting and canary deployments. SageMaker endpoints support multi-model hosting, allowing you to route different percentages of traffic to various model versions.

Implement A/B testing by deploying your new model alongside the existing one, then gradually shifting traffic while monitoring performance metrics. Start with 5-10% of traffic to the new model, increasing gradually as you gain confidence in its performance. This approach minimizes risk while providing statistically significant results.

Track business metrics alongside technical performance indicators during A/B tests. Monitor conversion rates, user engagement, and revenue impact to ensure your new model improves actual business outcomes, not just technical metrics. Use AWS CloudWatch or custom analytics pipelines to collect and analyze these comparative metrics.

Automated model rollback and versioning strategies

Version control for machine learning models requires more than just code versioning. SageMaker provides comprehensive model versioning through model packages and model registries. Each model version includes not only the trained artifacts but also metadata about training data, hyperparameters, and performance metrics.

Automated rollback mechanisms protect against failed deployments and performance degradation. Configure CloudWatch alarms that trigger Lambda functions to automatically revert to the previous model version when error rates spike or performance drops below acceptable thresholds. This safety net ensures your production systems remain stable even when new deployments encounter unexpected issues.

Blue-green deployment strategies work particularly well for ML model updates. Maintain two identical production environments, deploying new models to the inactive environment first. After thorough testing, switch traffic to the updated environment. Keep the previous version running as a hot standby, ready for immediate rollback if needed.

MLOps transforms how companies handle machine learning projects, turning chaotic experiments into smooth, automated processes. By building a solid pipeline with AWS services like SageMaker, Lambda, and CloudWatch, you can deploy models faster and keep them running reliably in production. The key is starting with proper data management, setting up continuous integration for your models, and never skipping the monitoring phase.

Getting started doesn’t have to be overwhelming. Pick one small ML project, build your first pipeline following AWS best practices, and focus on automation from day one. Your future self will thank you when you’re scaling models effortlessly instead of scrambling to fix broken deployments. The investment in MLOps infrastructure pays off quickly once you see how much time it saves and how much more confident you become in your model releases.