Machine learning models sitting idle in notebooks won’t drive business value. You need AWS MLOps to move your models from development to production seamlessly.

This guide is for data scientists, ML engineers, and DevOps teams who want to build reliable, automated machine learning workflows on AWS. You’ll learn how to transform manual, error-prone processes into scalable ML operations that deliver consistent results.

We’ll walk through setting up your AWS MLOps environment with the right tools and configurations. You’ll discover how to build robust ML pipelines using AWS SageMaker that handle everything from data processing to model deployment automatically.

We’ll also cover implementing ML CI/CD best practices to automate your entire workflow – from code commits to model updates in production. Finally, you’ll see how to scale these automated machine learning workflows across multiple teams and environments without losing control or visibility.

By the end, you’ll have a complete SageMaker MLOps system that turns your ML experiments into production-ready solutions your business can depend on.

Understanding AWS MLOps Fundamentals and Core Benefits

Define MLOps and its critical role in machine learning operations

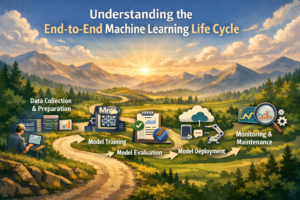

MLOps bridges the gap between machine learning development and production deployment by combining DevOps principles with data science workflows. This practice standardizes the entire ML lifecycle, from data preparation and model training to deployment and monitoring. MLOps ensures reproducible experiments, automated testing, and continuous model improvement. Teams can track model performance, manage data drift, and maintain consistent model versions across environments. The discipline transforms ad-hoc ML projects into reliable, scalable systems that deliver consistent business value while reducing technical debt and operational overhead.

Explore AWS native tools for streamlined ML workflows

AWS provides a comprehensive MLOps ecosystem centered around SageMaker, which offers end-to-end machine learning capabilities including data labeling, model building, training, and deployment. SageMaker Pipelines automates complex ML workflows, while SageMaker Model Registry manages model versions and metadata. CodePipeline integrates with ML workflows for CI/CD automation, and CloudWatch monitors model performance and infrastructure metrics. Lambda functions handle event-driven ML tasks, while S3 stores datasets and model artifacts. These native tools work together seamlessly, eliminating the complexity of integrating third-party solutions and providing built-in security, compliance, and scalability features that enterprise teams require.

Identify key advantages of cloud-based ML pipeline management

Cloud-based ML pipeline management delivers elastic scalability that automatically adjusts compute resources based on workload demands, reducing costs and eliminating infrastructure bottlenecks. Teams access virtually unlimited storage and computing power without upfront hardware investments. Built-in collaboration features enable distributed teams to share models, experiments, and datasets securely. Automated backup and disaster recovery protect critical ML assets. Pay-as-you-use pricing models optimize costs by charging only for actual resource consumption. Global availability zones ensure low-latency model serving across different geographical regions. Managed services reduce operational overhead, allowing data scientists to focus on model development rather than infrastructure management.

Recognize common challenges MLOps solves for data science teams

Data science teams often struggle with model deployment bottlenecks, where models work perfectly in notebooks but fail in production environments. Version control becomes chaotic when multiple team members modify models and datasets without proper tracking systems. Manual deployment processes create inconsistencies and human errors that impact model reliability. Monitoring model performance in production remains challenging without proper observability tools. Resource management becomes inefficient when teams can’t predict or control compute costs. MLOps addresses these pain points by providing automated deployment pipelines, comprehensive version control, standardized monitoring frameworks, and cost optimization tools that transform chaotic ML workflows into predictable, reliable operations.

Setting Up Your AWS MLOps Environment for Success

Configure essential AWS services for ML pipeline development

Start by setting up Amazon SageMaker as your primary MLOps platform, which provides end-to-end machine learning capabilities including data preparation, model training, and deployment. Launch Amazon S3 buckets for data storage and model artifacts, ensuring proper versioning and lifecycle policies. Configure Amazon ECR to store custom Docker images for your ML workloads. Set up AWS CodeCommit for version control, CodeBuild for automated builds, and CodePipeline to orchestrate your ML CI/CD workflows. Enable CloudWatch for comprehensive monitoring and logging across all services.

Establish proper IAM roles and security permissions

Create dedicated IAM roles for different ML personas – data scientists, ML engineers, and automated services. Grant SageMaker execution roles with specific permissions to access S3, ECR, and other required services while following the principle of least privilege. Set up cross-account roles if working across multiple AWS accounts. Configure resource-based policies for S3 buckets to control data access. Enable AWS CloudTrail for audit logging and implement fine-grained permissions that separate training, inference, and administrative tasks to maintain security boundaries.

Design scalable infrastructure architecture for ML workloads

Design your AWS MLOps infrastructure using a multi-environment approach with separate accounts or regions for development, staging, and production. Implement auto-scaling groups for inference endpoints and use Spot instances for cost-effective training jobs. Set up VPC configurations with proper subnets and security groups to isolate ML workloads. Configure Application Load Balancers for high-availability model serving. Use AWS Systems Manager Parameter Store or Secrets Manager for configuration management. Plan for disaster recovery with cross-region backup strategies and implement blue-green deployment patterns for zero-downtime model updates.

Building Robust ML Pipelines with AWS SageMaker

Create Automated Data Preprocessing Workflows

AWS SageMaker Processing jobs transform raw data into ML-ready datasets through scalable preprocessing workflows. Configure custom Docker containers or use built-in frameworks like scikit-learn and Spark to handle data cleaning, transformation, and feature extraction. Schedule these jobs using SageMaker Pipelines to automatically trigger when new data arrives, ensuring your MLOps pipeline stays current with fresh training data.

Implement Model Training and Validation Processes

SageMaker MLOps streamlines model training through managed infrastructure that automatically scales compute resources based on your workload. Create training scripts that leverage distributed training across multiple instances while SageMaker handles resource provisioning and teardown. Implement cross-validation and hyperparameter tuning using SageMaker’s built-in algorithms or bring your own custom training code. Set up automated model evaluation metrics to validate performance against predefined thresholds before deployment.

Design Efficient Feature Engineering Pipelines

Feature stores in AWS SageMaker centralize feature creation and management across your organization. Build reusable feature transformation logic that processes streaming and batch data sources consistently. Create feature groups that automatically version and lineage track your features, enabling teams to discover and reuse existing work. Connect your feature engineering pipelines directly to training and inference endpoints for seamless machine learning automation.

Establish Model Versioning and Experiment Tracking Systems

SageMaker Experiments automatically captures model artifacts, hyperparameters, and metrics for every training run. Organize experiments into logical groups and compare performance across different model versions using the built-in visualization tools. Register successful models in SageMaker Model Registry with approval workflows that enforce governance standards. Tag models with metadata like performance benchmarks and business context to support scalable ML operations across development, staging, and production environments.

Automating ML Workflows with CI/CD Best Practices

Integrate AWS CodePipeline for continuous model deployment

AWS CodePipeline forms the backbone of your MLOps automation strategy, orchestrating the entire ML CI/CD workflow from source code changes to production deployment. Configure your pipeline to trigger automatically when new training data arrives or model code changes, connecting seamlessly with AWS SageMaker for model building and deployment. Set up multiple stages including data validation, model training, testing, and deployment across different environments. Use AWS CodeBuild to execute custom scripts for data preprocessing and model evaluation, while CodeDeploy handles the actual model deployment to SageMaker endpoints or batch transform jobs.

Implement automated testing strategies for ML models

Automated testing for ML models goes beyond traditional software testing, requiring specialized approaches for data quality, model performance, and inference validation. Create comprehensive test suites that validate data schema consistency, feature drift detection, and statistical properties of input datasets. Implement A/B testing frameworks to compare new model versions against baseline performance metrics, ensuring statistical significance before promotion. Build automated regression tests that verify model predictions on holdout datasets, checking for accuracy degradation and bias issues. Use SageMaker Model Monitor to continuously validate model quality and data distribution changes in production environments.

Configure monitoring and alerting for pipeline failures

Robust monitoring and alerting systems prevent ML pipeline failures from impacting business operations and model performance. Set up CloudWatch alarms to track key pipeline metrics including training job failures, endpoint health, and data processing errors. Configure SNS notifications to alert your MLOps team immediately when critical thresholds are breached or pipeline stages fail. Implement custom metrics for model-specific KPIs like prediction latency, throughput, and accuracy scores. Create detailed logging strategies using CloudTrail and CloudWatch Logs to capture pipeline execution details, making troubleshooting faster and more effective when issues arise.

Establish rollback mechanisms for model performance issues

Quick rollback capabilities protect your production systems when newly deployed models underperform or cause unexpected issues. Design your deployment strategy using SageMaker’s blue-green deployment patterns, maintaining previous model versions as backup endpoints ready for immediate activation. Implement automated rollback triggers based on performance thresholds, activating when accuracy drops below acceptable levels or error rates spike beyond normal ranges. Create rollback playbooks that document step-by-step procedures for different failure scenarios, including data corruption, model degradation, and infrastructure issues. Use SageMaker’s multi-model endpoints to maintain multiple model versions simultaneously, enabling instant traffic switching without service interruption.

Scaling ML Operations Across Teams and Environments

Deploy Multi-Environment ML Pipelines for Development and Production

Setting up separate environments for development, staging, and production ensures your AWS MLOps pipeline maintains consistency across the entire machine learning lifecycle. Use AWS SageMaker Model Registry to version and promote models between environments, while implementing infrastructure-as-code with AWS CloudFormation or CDK. This approach allows teams to test model changes safely before production deployment, reducing the risk of system failures.

Implement Resource Optimization Strategies for Cost-Effective Scaling

Scalable ML operations require smart resource management to control costs while maintaining performance. Leverage SageMaker’s automatic scaling features, spot instances for training jobs, and scheduled shutdowns for non-production environments. Use AWS Cost Explorer to monitor spending patterns and implement resource tagging strategies. Consider multi-model endpoints for serving multiple models on shared infrastructure, significantly reducing hosting costs for low-traffic models.

Establish Governance Frameworks for Model Lifecycle Management

Building robust governance around your MLOps pipeline starts with clear model approval processes and automated compliance checks. Implement model bias detection using SageMaker Clarify, establish data lineage tracking, and create approval workflows for model promotions. Set up automated model performance monitoring with CloudWatch metrics and define clear criteria for model retirement. Document model decisions and maintain audit trails for regulatory compliance.

Create Collaborative Workflows for Cross-Functional ML Teams

Machine learning automation works best when data scientists, engineers, and business stakeholders collaborate effectively. Use SageMaker Studio as a shared workspace where team members can access notebooks, experiments, and model artifacts. Implement Git-based version control for code and establish clear handoff procedures between data science and engineering teams. Create shared feature stores to promote reusability and maintain consistent data preprocessing across projects.

Monitor Performance Metrics and Optimize Pipeline Efficiency

Effective monitoring goes beyond model accuracy to include pipeline performance, data quality, and resource utilization. Set up CloudWatch dashboards to track AWS SageMaker training job durations, endpoint latency, and data drift detection. Implement automated alerts for model performance degradation and establish feedback loops for continuous improvement. Use AWS X-Ray to trace pipeline execution and identify bottlenecks in your MLOps best practices implementation.

Building successful ML pipelines on AWS doesn’t have to be overwhelming when you break it down into manageable steps. From setting up your environment correctly to implementing robust automation with SageMaker, each component works together to create a system that can handle real-world machine learning challenges. The key is starting with solid fundamentals and gradually adding complexity as your team grows more comfortable with the tools.

The real magic happens when you combine AWS’s powerful infrastructure with smart CI/CD practices and proper scaling strategies. Your ML models become more reliable, your team becomes more productive, and your organization can actually deliver on those ambitious AI promises. Start small, automate what you can, and don’t be afraid to experiment with different approaches until you find what works best for your specific use case.