Microservices failures can cascade through your entire system in seconds, bringing down critical business operations. The circuit breaker pattern microservices solution acts like an electrical circuit breaker in your home—it automatically stops the flow when something goes wrong, protecting your distributed systems resilience from complete outage.

This guide is designed for software architects, backend developers, and DevOps engineers who need to build fault-tolerant systems that can handle real-world failures gracefully. You’ll learn practical microservices circuit breaker implementation techniques that prevent one failing service from crashing your entire application.

We’ll walk through the circuit breaker design pattern fundamentals, showing you exactly how circuit breaker state management works in practice. You’ll discover popular tools like hystrix circuit breaker and other modern alternatives, complete with circuit breaker configuration examples you can use immediately. Finally, we’ll cover essential circuit breaker monitoring strategies to keep your resilient microservices architecture running smoothly in production.

Whether you’re dealing with slow database calls, unresponsive APIs, or network timeouts, implementing microservices fault tolerance through circuit breakers will transform how your system handles failures—turning potential disasters into manageable hiccups.

Understanding Circuit Breaker Pattern Fundamentals

Core concept and purpose in distributed systems

The circuit breaker pattern microservices acts as an electrical safety switch for your distributed architecture. When a downstream service starts failing or responding slowly, the circuit breaker steps in to prevent cascading failures that could bring down your entire system. Think of it as a protective barrier that monitors service health and makes split-second decisions about whether to allow requests through or fail fast.

In microservices environments, services constantly communicate with each other. Without proper protection, when one service goes down, it can create a domino effect where upstream services get overwhelmed waiting for responses that never come. The circuit breaker design pattern solves this by tracking failure rates and response times, automatically cutting off traffic to unhealthy services before they drag everything else down.

The pattern originated from electrical engineering but translates perfectly to software systems. Just like an electrical circuit breaker trips when there’s too much current, a software circuit breaker “trips” when there are too many failures or timeouts from a particular service dependency.

Key components and operational states

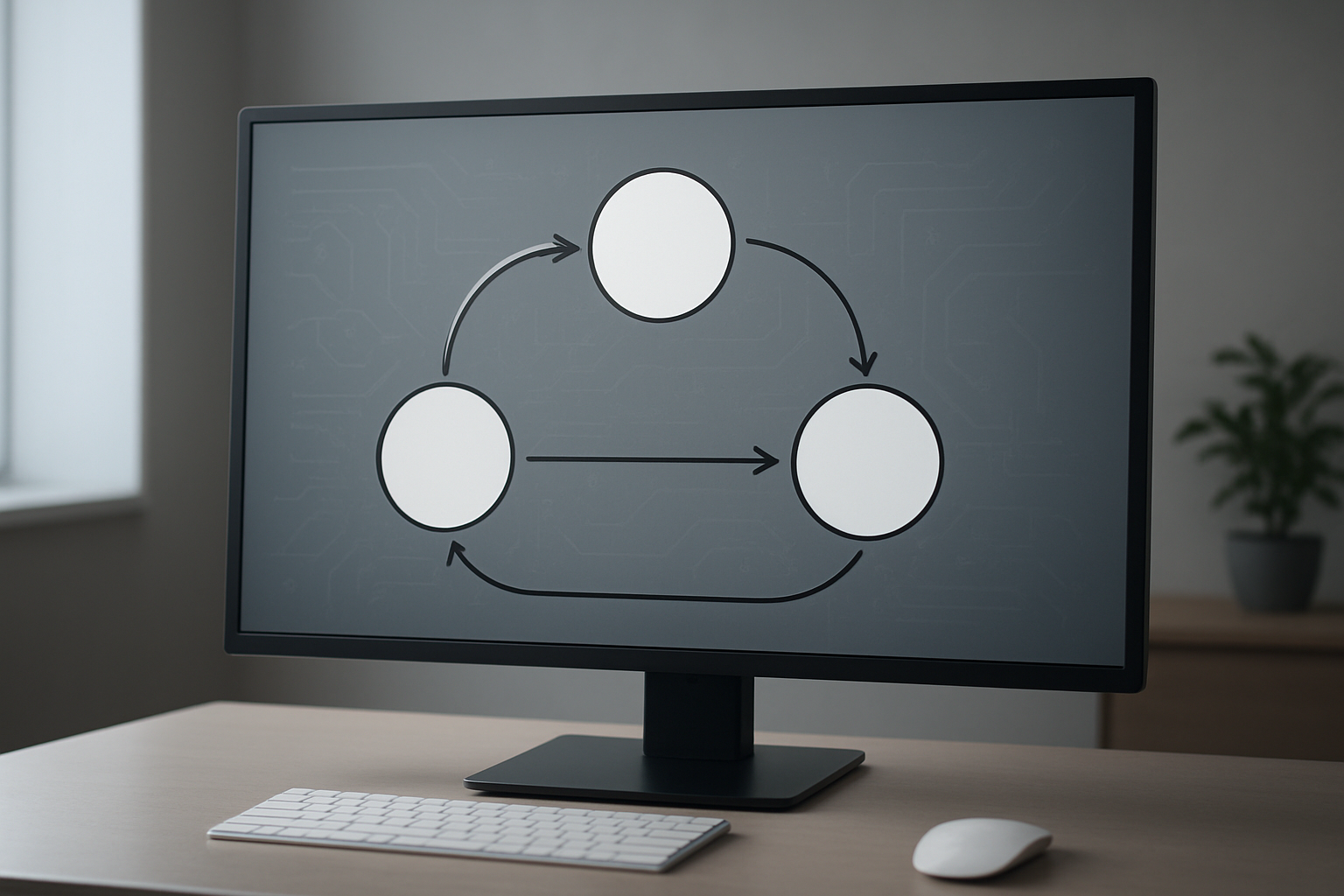

A resilient microservices architecture built with circuit breakers operates through three distinct states: Closed, Open, and Half-Open. Each state serves a specific purpose in maintaining system stability.

Closed State: This is the normal operating mode where requests flow freely to the downstream service. The circuit breaker monitors every call, tracking success rates, response times, and failure counts. Everything appears healthy, and users experience normal application behavior.

Open State: When failure thresholds are exceeded, the circuit breaker trips to this state. All requests to the failing service are immediately rejected without even attempting the call. This prevents resource exhaustion and allows the downstream service time to recover. Users might see fallback responses or cached data during this period.

Half-Open State: After a predetermined timeout period, the circuit breaker cautiously allows a limited number of test requests through. If these succeed, the circuit gradually returns to the Closed state. If they fail, it immediately goes back to Open state.

The circuit breaker also includes several key components:

- Failure threshold counters that track error rates

- Timeout configurations for determining when calls take too long

- Recovery timers that control when to attempt service restoration

- Fallback mechanisms that provide alternative responses during outages

Failure detection mechanisms

Circuit breaker state management relies on sophisticated failure detection that goes beyond simple error counting. Modern implementations track multiple metrics to make intelligent decisions about service health.

Response Time Monitoring: Slow responses can be just as damaging as outright failures. Circuit breakers monitor average response times and percentile distributions. When response times consistently exceed configured thresholds, the breaker may trip even if no explicit errors occur.

Error Rate Calculations: The system tracks various types of failures including HTTP 5xx errors, network timeouts, and connection refused errors. Most implementations use sliding window approaches rather than simple counters to ensure recent performance has more weight than historical data.

Success Rate Thresholds: Instead of just counting failures, many circuit breakers focus on success rates. For example, if success rates drop below 50% over a 30-second window, the breaker trips. This approach provides more nuanced failure detection.

Volume-Based Decisions: Smart circuit breakers consider request volume when making tripping decisions. A few failures during low-traffic periods might not trigger the breaker, while the same failure count during high traffic would cause it to open immediately.

Recovery and reset procedures

The recovery process determines how quickly and safely your microservices fault tolerance mechanisms restore normal operations. Getting this wrong can lead to oscillating circuit states or premature recovery attempts that cause additional instability.

Exponential Backoff: Most production systems implement exponential backoff strategies where the wait time between recovery attempts increases with each failure. This prevents the circuit breaker from hammering a struggling service with constant retry attempts.

Gradual Traffic Restoration: When transitioning from Open to Closed state, sophisticated implementations don’t immediately flood the recovered service with full traffic. Instead, they gradually increase the request volume, monitoring success rates at each step.

Health Check Integration: Advanced circuit breaker monitoring setups integrate with dedicated health check endpoints rather than relying solely on business request success rates. This allows for more precise recovery timing based on actual service readiness.

Manual Override Capabilities: Production systems need manual controls that allow operations teams to force circuit states during maintenance windows or emergency situations. This includes the ability to keep circuits open during planned maintenance or force them closed when you know a service has recovered but the automatic detection hasn’t caught up yet.

The reset procedures also include cooldown periods that prevent rapid state transitions. Without proper cooldowns, circuit breakers can become unstable, rapidly switching between states and creating unpredictable application behavior.

Business Benefits of Circuit Breaker Implementation

Preventing cascading failures across services

Cascading failures represent one of the most dangerous scenarios in distributed microservices architecture. When one service becomes unavailable, dependent services can quickly exhaust their resources by continuously retrying failed requests, creating a domino effect that brings down entire system segments.

The circuit breaker pattern microservices solution acts as a protective barrier between services. When a downstream service starts failing, the circuit breaker immediately stops sending requests to that service, preventing the failure from spreading upstream. This isolation mechanism ensures that healthy services remain operational even when their dependencies encounter issues.

Consider an e-commerce platform where the payment service experiences downtime. Without circuit breakers, the order service would keep attempting payment processing, consuming threads and memory while waiting for timeouts. These blocked resources would eventually cause the order service to fail, potentially affecting inventory management and user notification services. Circuit breakers eliminate this chain reaction by failing fast when payment issues are detected.

The pattern also provides graceful degradation options. Instead of complete service failure, applications can fall back to cached data, default responses, or alternative service implementations while the primary service recovers.

Improved system resilience and availability

Circuit breaker implementation significantly enhances overall system resilience by introducing intelligent failure handling mechanisms. Traditional microservices without circuit breakers often experience prolonged downtimes because failures create resource bottlenecks that prevent services from recovering efficiently.

With circuit breakers in place, services can maintain higher availability percentages even during partial system failures. The pattern enables services to:

- Recover faster from failures by reducing load on struggling dependencies

- Maintain core functionality through fallback mechanisms and cached responses

- Isolate problems to specific service boundaries rather than system-wide outages

- Adapt automatically to changing service health conditions

Real-world implementations show availability improvements from 95% to 99.5% or higher when circuit breakers are properly configured. The pattern works especially well in cloud environments where services may experience temporary network issues, resource constraints, or deployment-related interruptions.

Circuit breakers also enable better capacity planning by providing clear visibility into service dependencies and failure patterns. This insight helps teams identify bottlenecks and strengthen weak points in their resilient microservices architecture before they cause significant downtime.

Reduced resource consumption during outages

Resource efficiency becomes critical during service outages, and circuit breakers provide substantial improvements in how systems handle these scenarios. Without circuit breakers, failing services consume excessive computational resources through repeated timeout cycles, thread pool exhaustion, and memory leaks from pending requests.

Circuit breaker design pattern implementation immediately reduces resource waste by:

- Eliminating unnecessary network calls to known failing services

- Freeing up connection pools that would otherwise be blocked on timeouts

- Reducing CPU usage from processing doomed requests

- Preventing memory bloat caused by queued failed operations

During a database outage, for example, application servers typically accumulate hundreds of pending database connections, each consuming memory and thread resources while waiting for timeouts. Circuit breakers detect this condition early and reject new database requests immediately, allowing servers to maintain performance for other operations.

The resource savings extend beyond immediate failure scenarios. Circuit breakers help services recover more quickly by ensuring adequate resources remain available for handling successful requests and processing recovery operations. This efficiency gain becomes particularly valuable in containerized environments where resource limits are strictly enforced.

Load balancers and auto-scaling systems also benefit from circuit breaker implementations, as they receive more accurate health signals and can make better scaling decisions based on actual service capacity rather than resource exhaustion symptoms.

Circuit Breaker States and State Transitions

Closed State Behavior and Monitoring

The closed state represents normal operation in circuit breaker pattern microservices. During this phase, all requests flow through to the downstream service without interruption. The circuit breaker acts like a monitoring proxy, tracking success rates, response times, and failure counts. This continuous monitoring forms the foundation of resilient microservices architecture.

Key metrics tracked include:

- Request volume and throughput

- Response latency percentiles

- Error rates and exception types

- Timeout occurrences

- Resource consumption patterns

The circuit breaker maintains a rolling window of recent requests, typically covering the last 10-20 seconds or last 100-1000 requests. This sliding window approach ensures decisions reflect current system health rather than historical data. When error rates stay below configured thresholds (usually 50-60%), the circuit remains closed and operations continue normally.

Monitoring during the closed state requires careful attention to baseline metrics. Teams should establish clear SLAs and configure alerting when metrics approach threshold values. This proactive monitoring prevents sudden service degradation and enables early intervention.

Open State Activation and Request Blocking

When failure thresholds breach configured limits, the circuit breaker transitions to an open state, immediately blocking all incoming requests. This protective mechanism prevents cascading failures across distributed systems resilience architectures. Instead of forwarding requests to struggling services, the circuit breaker returns predetermined responses or cached data.

The open state activation triggers include:

- Error rate exceeding threshold percentage

- Consecutive failure count surpassing limits

- Response time degradation beyond acceptable levels

- Resource exhaustion indicators

During the open state, requests fail fast with minimal resource consumption. This behavior protects both the failing service and calling applications from resource starvation. The circuit breaker typically returns cached responses, default values, or graceful error messages to maintain user experience.

Open state duration follows configurable timeout periods, usually ranging from 30 seconds to several minutes. This cooling-off period allows the downstream service time to recover from issues like temporary network problems, database connection pool exhaustion, or high load spikes.

Half-Open State Testing and Validation

The half-open state serves as a testing phase where the circuit breaker cautiously probes the downstream service’s health. After the open state timeout expires, the circuit breaker allows a limited number of requests through to test service availability. This controlled approach prevents immediate system overload if the service hasn’t fully recovered.

Testing characteristics in half-open state:

- Limited request volume (typically 1-5 requests)

- Shorter timeout periods for faster decision making

- Strict success criteria for state transitions

- Immediate fallback to open state on failures

The circuit breaker monitors these test requests closely. Success leads to closed state restoration, while failures trigger an immediate return to the open state with an extended timeout period. This validation process ensures that only genuinely recovered services resume normal traffic handling.

Some implementations use exponential backoff during repeated half-open attempts, gradually increasing the wait time between tests. This approach reduces unnecessary load on struggling services while maintaining reasonable recovery detection.

Automatic State Transition Triggers

Circuit breaker state management relies on automated triggers that respond to real-time system conditions. These triggers eliminate manual intervention requirements and ensure rapid response to changing service health. The automation logic considers multiple factors simultaneously to make accurate state transition decisions.

Primary transition triggers include:

- Closed to Open: Error rate thresholds, consecutive failures, timeout accumulation

- Open to Half-Open: Configurable time-based intervals, exponential backoff schedules

- Half-Open to Closed: Successful test request completion, health check validation

- Half-Open to Open: Test request failures, timeout occurrences

Advanced implementations incorporate machine learning algorithms that adapt thresholds based on historical patterns and service behavior. These intelligent systems recognize normal variation versus genuine problems, reducing false positives and improving overall system stability.

Configuration flexibility allows teams to tune triggers for specific service characteristics. High-volume services might require different thresholds than low-traffic endpoints. Critical services may need more conservative settings, while non-essential services can tolerate higher failure rates before activation.

The trigger system should integrate with existing monitoring infrastructure, publishing state change events to observability platforms. This integration enables comprehensive circuit breaker monitoring across entire microservices ecosystems, supporting both operational awareness and post-incident analysis.

Popular Circuit Breaker Libraries and Tools

Netflix Hystrix Features and Capabilities

Netflix Hystrix revolutionized circuit breaker pattern microservices by introducing comprehensive fault tolerance mechanisms for distributed systems. This battle-tested library handles service failures through automatic circuit breaker state management, thread isolation, and request caching. Hystrix provides real-time monitoring through metrics streams and offers a powerful dashboard that visualizes circuit breaker states, request volumes, and error rates across your microservices architecture.

Key features include:

- Fallback mechanisms: Automatic graceful degradation when services fail

- Bulkhead isolation: Separate thread pools prevent cascading failures

- Request collapsing: Batches similar requests to reduce network overhead

- Timeout handling: Configurable timeouts prevent hanging requests

- Circuit breaker monitoring: Built-in metrics collection and reporting

While Netflix moved Hystrix to maintenance mode in 2018, it remains widely deployed in production environments. The library excels in Java-based microservices where proven stability matters more than cutting-edge features.

Resilience4j Modern Implementation Advantages

Resilience4j represents the next generation of resilient microservices architecture tools, designed as a lightweight alternative to Hystrix. Built with Java 8+ functional programming principles, it offers better performance and more flexible configuration options. Unlike Hystrix’s dependency on RxJava, Resilience4j works seamlessly with CompletableFuture, reactive streams, and traditional synchronous code.

Modern advantages include:

- Modular design: Use only the components you need (circuit breaker, retry, rate limiter)

- No external dependencies: Minimal footprint with optional integrations

- Functional API: Clean, composable interfaces for complex resilience patterns

- Better metrics: Integration with Micrometer for modern observability stacks

- Reactive support: Native compatibility with Spring WebFlux and reactive frameworks

The library supports advanced features like sliding window-based failure detection and customizable failure predicates, making it ideal for microservices circuit breaker implementation in cloud-native applications.

Spring Cloud Circuit Breaker Integration Options

Spring Cloud Circuit Breaker provides an abstraction layer that lets you switch between different circuit breaker implementations without changing application code. This approach supports both Hystrix and Resilience4j backends, plus custom implementations through a unified API.

Integration options include:

- Annotation-based configuration: Simple

@CircuitBreakerannotations for method-level protection - Reactive support: Built-in integration with Spring WebFlux for non-blocking applications

- Auto-configuration: Automatic setup with sensible defaults for Spring Boot applications

- Customizable fallbacks: Type-safe fallback methods with full Spring context access

The abstraction layer simplifies circuit breaker design pattern implementation by handling configuration management and providing consistent behavior across different underlying libraries. This flexibility proves valuable when migrating from Hystrix to modern alternatives or when different teams prefer different implementations.

Language-Specific Library Comparisons

Different programming languages offer various circuit breaker libraries, each with unique strengths for distributed systems resilience:

Python Options:

- PyBreaker: Simple, lightweight implementation with basic circuit breaker functionality

- Circuit Breaker: More feature-rich with timeout handling and custom failure detection

Node.js Solutions:

- Opossum: Feature-complete circuit breaker with promise support and metrics

- Circuit-breaker-js: Lightweight option for basic use cases

.NET Implementations:

- Polly: Comprehensive resilience library with circuit breakers, retries, and timeouts

- Microsoft.Extensions.Http.Polly: Integration package for HttpClient scenarios

Go Libraries:

- Hystrix-go: Port of Netflix Hystrix concepts to Go

- Sony/gobreaker: Simple, efficient implementation following Go idioms

Language Considerations:

- Java ecosystem offers the most mature options (Hystrix, Resilience4j)

- .NET Polly provides excellent integration with Microsoft frameworks

- Node.js libraries focus on promise-based APIs for async operations

- Go implementations emphasize simplicity and performance

- Python options range from basic to feature-rich depending on requirements

Choose libraries based on your team’s expertise, existing technology stack, and specific resilience requirements rather than popularity alone.

Implementation Strategies and Best Practices

Timeout Configuration and Threshold Setting

Setting up proper timeouts and thresholds forms the backbone of effective circuit breaker pattern microservices implementation. Your timeout values should reflect realistic response times based on your service’s historical performance data. Start by analyzing your service’s 95th percentile response times during normal operations, then add a buffer of 20-30% to account for minor fluctuations.

For failure thresholds, begin with conservative settings like 50% failure rate over a 10-request window. This prevents the circuit breaker from tripping due to occasional network hiccups while still protecting against genuine service degradation. The sliding window approach works better than fixed time windows because it provides more consistent behavior across varying traffic patterns.

Consider implementing different threshold configurations for different types of operations:

- Critical operations: Lower thresholds (3-5 failures in 10 requests)

- Non-critical operations: Higher thresholds (7-8 failures in 10 requests)

- Background processes: More lenient thresholds to avoid unnecessary interruptions

Rolling window sizes should typically range from 10-20 requests for high-traffic services and 5-10 for lower-volume services. Smaller windows react faster to problems but may cause false positives during brief traffic spikes.

Fallback Mechanism Design Patterns

Designing robust fallback mechanisms ensures your microservices circuit breaker implementation maintains service availability even when downstream dependencies fail. The key is choosing the right fallback strategy based on your specific use case and business requirements.

Cache-Based Fallbacks work exceptionally well for read operations. Store frequently accessed data in Redis or an in-memory cache, allowing your service to serve stale but valid data when the primary data source becomes unavailable. Set appropriate TTL values that balance data freshness with availability requirements.

Default Response Patterns provide predetermined responses when services fail. For user profiles, return basic information with placeholder values. For recommendation engines, serve popular or trending items instead of personalized suggestions. This approach maintains functionality while clearly indicating reduced service levels.

Service Degradation Strategies involve progressively reducing feature complexity. A shopping cart service might disable real-time inventory checks but continue processing orders, then validate inventory asynchronously. This keeps core business functions operational while non-essential features gracefully degrade.

Alternative Service Routing redirects traffic to backup services or different implementations. Your primary payment processor might route to a secondary provider when the primary service circuit opens. This requires careful coordination and consistent API contracts across alternatives.

Health Check Endpoint Integration

Integrating health check endpoints with your circuit breaker creates a more intelligent and responsive resilient microservices architecture. These endpoints should provide detailed information about service dependencies and internal state, not just basic “up/down” status indicators.

Design health checks that examine multiple layers of your service stack:

- Database connectivity: Test actual query execution, not just connection availability

- External API dependencies: Verify both connectivity and response quality

- Resource utilization: Monitor memory, CPU, and disk usage patterns

- Cache availability: Ensure distributed caches respond within acceptable timeframes

Implement graduated health responses rather than binary healthy/unhealthy states. Use HTTP status codes strategically – 200 for healthy, 503 for degraded but functional, and 500 for completely unavailable. This granular approach helps circuit breakers make better decisions about when to open or close.

Your circuit breaker should consume these health signals through:

- Periodic polling: Check health endpoints every 30-60 seconds during normal operation

- Event-driven updates: Subscribe to health state changes through message queues

- Synthetic monitoring: Run automated tests that simulate real user scenarios

Monitoring and Alerting Setup

Comprehensive circuit breaker monitoring provides visibility into your system’s resilience patterns and helps identify potential issues before they cascade. Your monitoring strategy should capture both technical metrics and business impact indicators.

Track these essential circuit breaker state management metrics:

- State transition frequency: How often circuits open, close, or enter half-open states

- Failure rate trends: Track both instantaneous and rolling failure percentages

- Response time distributions: Monitor latency changes as circuit states shift

- Throughput impact: Measure request volume changes during different circuit states

Set up alerting rules that account for your business context. A circuit opening during low-traffic periods might be informational, while the same event during peak hours could indicate a serious problem requiring immediate attention. Create alert severity levels based on:

- Service criticality: Payment services warrant immediate alerts, while analytics services might tolerate delays

- Time of day: Peak business hours require faster response times

- Cascading failure potential: Services with many downstream dependencies need more sensitive monitoring

Dashboard design should emphasize trend analysis over point-in-time snapshots. Circuit breaker behavior often reveals patterns that emerge over hours or days rather than minutes. Include business metrics alongside technical ones – showing both “circuit opened” and “revenue impact” helps teams prioritize response efforts effectively.

Real-World Configuration Examples

Database Connection Circuit Breakers

Database connections represent one of the most critical failure points in microservices architecture. When your database becomes unresponsive or experiences high latency, a well-configured circuit breaker pattern microservices implementation can save your entire system from cascading failures.

Here’s a practical Spring Boot configuration using Resilience4j for database protection:

resilience4j:

circuitbreaker:

instances:

database-service:

failure-rate-threshold: 60

wait-duration-in-open-state: 30s

sliding-window-size: 10

minimum-number-of-calls: 5

permitted-number-of-calls-in-half-open-state: 3

automatic-transition-from-open-to-half-open-enabled: true

This microservices circuit breaker implementation opens when 60% of the last 10 calls fail. The circuit stays open for 30 seconds before allowing 3 test calls. You can apply this to your database repository methods:

@CircuitBreaker(name = "database-service", fallbackMethod = "fallbackGetUser")

public User getUserById(Long id) {

return userRepository.findById(id);

}

public User fallbackGetUser(Long id, Exception ex) {

return User.builder()

.id(id)

.name("Unknown User")

.status("Service Unavailable")

.build();

}

For connection pool scenarios, consider implementing circuit breakers at both the connection acquisition and query execution levels. This dual-layer protection catches both connection exhaustion and slow query problems before they impact your users.

External API Call Protection

Third-party API integrations are notorious for unpredictable behavior. Your circuit breaker design pattern needs to handle various failure scenarios including timeouts, rate limiting, and service degradation.

Netflix Hystrix configuration for API protection:

@HystrixCommand(

commandProperties = {

@HystrixProperty(name = "circuitBreaker.requestVolumeThreshold", value = "20"),

@HystrixProperty(name = "circuitBreaker.errorThresholdPercentage", value = "50"),

@HystrixProperty(name = "circuitBreaker.sleepWindowInMilliseconds", value = "60000"),

@HystrixProperty(name = "execution.isolation.thread.timeoutInMilliseconds", value = "5000")

},

fallbackMethod = "getPaymentDataFallback"

)

public PaymentResponse processPayment(PaymentRequest request) {

return externalPaymentApi.process(request);

}

Your resilient microservices architecture should include different circuit breaker configurations for various API types. Payment APIs might need stricter thresholds (30% failure rate) while analytics APIs could tolerate higher failure rates (70%) since they’re not business-critical.

Consider implementing circuit breaker state management with different strategies:

- Fast-fail for critical APIs: Open circuit immediately on authentication failures

- Gradual degradation for optional services: Allow higher failure rates but reduce request frequency

- Intelligent retry for rate-limited APIs: Respect rate limits and adjust circuit timing accordingly

Message Queue Integration Scenarios

Message queues add complexity to circuit breaker configuration examples because failures can occur at multiple levels: connection failures, broker unavailability, queue full conditions, and consumer processing errors.

RabbitMQ integration with circuit breaker protection:

@Component

public class OrderEventPublisher {

@CircuitBreaker(name = "rabbitmq-publisher")

@Retryable(maxAttempts = 3, backoff = @Backoff(delay = 1000))

public void publishOrderEvent(OrderEvent event) {

try {

rabbitTemplate.convertAndSend("order.exchange", "order.created", event);

} catch (AmqpException e) {

// Circuit breaker will track these failures

throw new MessagePublishException("Failed to publish order event", e);

}

}

@Recover

public void recover(MessagePublishException ex, OrderEvent event) {

// Store event locally for later retry

eventRepository.save(event.withStatus("PENDING_RETRY"));

alertingService.sendAlert("Message queue circuit breaker activated");

}

}

For Apache Kafka scenarios, your distributed systems resilience strategy should account for partition-specific failures:

resilience4j:

circuitbreaker:

instances:

kafka-producer:

failure-rate-threshold: 40

slow-call-rate-threshold: 80

slow-call-duration-threshold: 3s

wait-duration-in-open-state: 60s

Consumer-side circuit breakers need different considerations. When processing messages fails consistently, you want to pause consumption rather than fill dead letter queues:

@KafkaListener(topics = "user-events")

@CircuitBreaker(name = "kafka-consumer", fallbackMethod = "pauseConsumption")

public void processUserEvent(UserEvent event) {

userService.processEvent(event);

}

public void pauseConsumption(UserEvent event, Exception ex) {

// Temporarily pause this consumer

kafkaConsumer.pause(Collections.singletonList(topicPartition));

scheduleConsumerResume(30); // Resume after 30 seconds

}

Queue-specific circuit breaker monitoring should track message throughput, processing latency, and dead letter queue growth. This gives you early warning when your message processing pipeline starts degrading, allowing proactive intervention before complete system failure.

Testing and Validation Approaches

Unit Testing Circuit Breaker Logic

Creating comprehensive unit tests for your circuit breaker pattern microservices implementation requires isolating the core state management logic from external dependencies. Start by testing each circuit breaker state individually – closed, open, and half-open – to verify correct behavior under different scenarios.

Mock the underlying service calls to simulate various response times, timeouts, and exceptions. Your test suite should validate that failure thresholds trigger state transitions appropriately. For instance, test that five consecutive failures within a 60-second window moves the circuit from closed to open state.

Test edge cases like rapid state changes, concurrent requests during state transitions, and recovery scenarios. Verify that success rate calculations work correctly and that the circuit breaker resets its failure count when transitioning back to closed state. Mock time-based operations to test timeout behaviors without waiting for actual timeouts during test execution.

Key areas to focus on include:

- State transition accuracy based on configured thresholds

- Thread safety during concurrent access

- Proper handling of different exception types

- Metrics collection and reporting accuracy

- Configuration parameter validation

Integration Testing with Failure Simulation

Integration tests for microservices circuit breaker implementation need to simulate real-world failure scenarios across service boundaries. Set up test environments that mirror your production architecture, including network latencies and service dependencies.

Use tools like WireMock or Testcontainers to create controllable service stubs that can simulate various failure patterns. Program these stubs to return specific HTTP status codes, introduce delays, or completely drop connections based on your test scenarios.

Create test cases that validate end-to-end behavior when downstream services become unavailable. Verify that your circuit breaker correctly falls back to cached responses or alternative service paths when the primary service fails. Test scenarios where services recover at different rates to ensure your half-open state logic works correctly.

Design tests that span multiple service hops to validate how circuit breaker states propagate through your service mesh. Check that bulkhead patterns work alongside circuit breakers to prevent cascade failures.

Essential integration test scenarios include:

- Gradual service degradation patterns

- Complete service outages and recovery

- Intermittent connectivity issues

- Dependency chain failures

- Cross-service timeout configurations

Load Testing Under Various Failure Conditions

Load testing your resilient microservices architecture with circuit breakers requires sophisticated failure injection during high-traffic scenarios. Use tools like JMeter, K6, or Gatling to generate realistic traffic patterns while simultaneously introducing controlled failures.

Design load tests that gradually increase failure rates in downstream services while maintaining consistent request volumes. This approach reveals how your circuit breaker configuration performs under stress and helps identify optimal threshold settings for your specific traffic patterns.

Implement chaos engineering principles by randomly failing services, introducing network partitions, or simulating resource exhaustion during load tests. Monitor how quickly circuit breakers detect and respond to these failures while tracking overall system throughput and response times.

Test different failure scenarios simultaneously – some services experiencing high latency while others return errors. This multi-dimensional testing approach validates that your circuit breaker monitoring and distributed systems resilience strategies work effectively under complex failure conditions.

Critical load testing scenarios to validate:

- Peak traffic with 10-50% service failure rates

- Burst traffic patterns during service degradation

- Recovery behavior under sustained load

- Resource exhaustion and memory leak detection

- Performance impact of circuit breaker overhead

Monitor key metrics during load testing including request success rates, average response times, circuit breaker state transitions per second, and system resource utilization to ensure your implementation scales effectively.

Monitoring and Observability Setup

Key metrics collection and tracking

Effective circuit breaker monitoring starts with collecting the right metrics that provide visibility into your system’s health and behavior. The most critical metrics include failure rates, response times, circuit breaker state changes, and throughput measurements across your microservices ecosystem.

Track the percentage of successful and failed requests over time windows to identify patterns and potential cascading failures. Monitor request latency at different percentiles (50th, 95th, 99th) to understand performance degradation before circuits trip. Count state transitions between closed, open, and half-open states to measure how frequently your circuit breakers activate and recover.

Volume metrics help determine if your thresholds are appropriately configured. Low request volumes might lead to premature circuit opening, while high volumes could mask individual service degradation. Capture concurrent request counts to understand load distribution and identify bottlenecks in your microservices circuit breaker implementation.

Time-based metrics like mean time to recovery (MTTR) and mean time between failures (MTBF) provide insights into system stability. These measurements help teams optimize timeout configurations and failure detection strategies within their resilient microservices architecture.

Custom business metrics specific to your application domain add context to technical measurements. For example, tracking order completion rates alongside circuit breaker states reveals the real business impact of service failures.

Dashboard creation for operational visibility

Building effective dashboards requires organizing metrics into logical groupings that support different operational roles and use cases. Create service-level dashboards that show individual circuit breaker states, error rates, and performance trends for each microservice in your system.

System-wide overview dashboards provide executives and architects with high-level health indicators across all services. These views should highlight critical paths, dependency relationships, and overall system resilience metrics. Color-coding and visual indicators help teams quickly identify services experiencing issues or approaching failure thresholds.

Real-time charts showing request volumes, error rates, and circuit breaker state changes over the last hour help operations teams respond quickly to incidents. Historical views spanning days or weeks reveal patterns and help with capacity planning and threshold tuning.

Heat maps and dependency graphs visualize service interactions and failure propagation paths. When one service’s circuit breaker opens, teams can quickly identify downstream impacts and prioritize recovery efforts. These visualizations become especially valuable during major incidents when multiple services might be affected.

Interactive filtering capabilities allow users to drill down from system-wide views to specific service instances or time periods. This flexibility supports both high-level monitoring and detailed troubleshooting workflows.

Alert configuration for proactive response

Smart alerting strategies prevent alert fatigue while ensuring critical issues receive immediate attention. Configure multi-level alerts that escalate based on severity and duration of circuit breaker activations.

Set up immediate notifications when circuit breakers transition to the open state, especially for critical services that impact user-facing features. Include contextual information like error rates, recent deployments, and affected user segments to help responders understand the scope and potential causes.

Threshold-based alerts trigger when failure rates exceed acceptable levels but before circuit breakers open. These early warning signals give teams opportunities to investigate and resolve issues before service degradation affects end users. Configure different thresholds for different services based on their criticality and normal operating patterns.

Anomaly detection alerts identify unusual patterns that might not trigger threshold-based rules. Machine learning algorithms can detect subtle changes in traffic patterns, response times, or error distributions that indicate emerging problems in your distributed systems resilience.

Recovery alerts notify teams when circuit breakers successfully transition back to closed states after periods of instability. These positive signals help teams understand resolution timelines and validate that fixes are working properly.

Group related alerts to prevent notification storms during major incidents. When multiple services fail simultaneously, intelligent alert grouping helps teams focus on root causes rather than getting overwhelmed by symptoms.

Circuit breakers serve as your microservices’ safety net, preventing cascading failures and keeping your system stable when things go wrong. By implementing this pattern correctly, you’ll see faster response times, better user experiences, and way less stress during those inevitable service outages. The three states – closed, open, and half-open – work together to automatically detect problems and give failing services time to recover without bringing down your entire application.

Getting started doesn’t have to be complicated. Pick a library that fits your tech stack, set reasonable thresholds based on your actual traffic patterns, and don’t forget to monitor everything. Your circuit breakers are only as good as your ability to see what they’re doing and adjust when needed. Start small with one critical service, learn from the experience, and gradually roll it out across your system. Your future self will thank you when that next service hiccup happens and your users barely notice.