Building an AWS ETL pipeline that handles growing data volumes without breaking the bank or your peace of mind requires the right combination of services and smart architectural decisions. This guide is designed for data engineers, cloud architects, and developers who need to create robust, scalable data pipeline AWS solutions that can process everything from daily transaction logs to streaming sensor data.

Amazon S3 data lake storage, AWS Glue ETL jobs, and Amazon Athena analytics form the backbone of modern serverless ETL AWS architectures. These services work together to ingest, transform, and analyze data without the overhead of managing servers or complex infrastructure.

We’ll walk through the essential ETL pipeline architecture patterns that make data processing both cost-effective and reliable. You’ll learn how to set up automated data transformation workflows using AWS Glue crawler tools and design systems that scale seamlessly as your data grows. We’ll also cover data pipeline monitoring AWS best practices that help you catch issues before they impact your business and keep your production systems running smoothly.

Understanding ETL Pipeline Architecture on AWS

Core Components of Modern ETL Systems

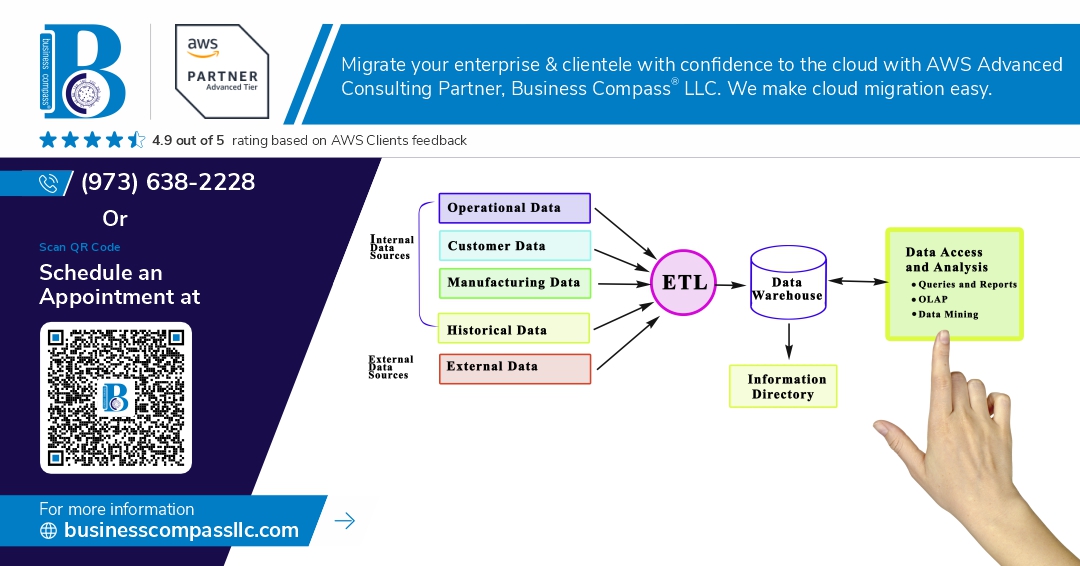

Modern AWS ETL pipeline architecture consists of three fundamental layers that work together seamlessly. The ingestion layer captures raw data from various sources like databases, APIs, and streaming services. The processing layer transforms this data using serverless ETL AWS services, applying business rules and data quality checks. The storage layer organizes processed data in structured formats optimized for analytics. These components create a robust foundation for scalable data pipeline AWS implementations that can handle growing data volumes without compromising performance.

Benefits of Cloud-Based Data Processing

Cloud-based ETL pipeline architecture delivers unmatched flexibility and cost efficiency compared to traditional on-premises solutions. You pay only for actual compute resources used during data processing jobs, eliminating expensive idle infrastructure costs. Auto-scaling capabilities automatically adjust resources based on workload demands, ensuring consistent performance during peak processing times. The managed nature of cloud services reduces operational overhead, letting your team focus on data insights rather than infrastructure maintenance. Built-in security features and compliance certifications provide enterprise-grade protection for sensitive data assets.

AWS Service Integration Advantages

Amazon S3 data lake, AWS Glue ETL jobs, and Amazon Athena analytics form a tightly integrated ecosystem that simplifies complex data workflows. Native service integration eliminates the need for custom connectors or data movement between platforms. AWS Glue crawler automatically discovers and catalogs data schemas, making datasets immediately queryable through Athena. This seamless connectivity enables rapid deployment of analytics solutions while maintaining consistent security policies across all components. The unified AWS billing and monitoring framework provides complete visibility into pipeline costs and performance metrics.

Setting Up Your Data Foundation with Amazon S3

Optimizing S3 Bucket Structure for ETL Workloads

Design your Amazon S3 data lake with a clear hierarchy that separates raw, processed, and curated data layers. Create dedicated prefixes for different data sources and processing stages like raw/, staging/, and gold/. This structure supports your scalable data pipeline AWS requirements by enabling parallel processing and reducing data movement costs. Use consistent naming conventions with timestamps and data source identifiers to make your AWS ETL pipeline maintenance easier.

Implementing Data Partitioning Strategies

Partition your data based on query patterns and processing requirements to maximize performance in your ETL pipeline architecture. Date-based partitioning (year/month/day) works well for time-series data, while source-based partitioning helps when processing multiple data streams. Store data in columnar formats like Parquet or ORC to reduce storage costs and improve query performance. Proper partitioning reduces the amount of data scanned during transformations and analytics queries.

Configuring Security and Access Controls

Set up IAM roles and policies that follow the principle of least privilege for your AWS Glue ETL jobs and analytics users. Enable S3 bucket versioning and MFA delete protection for critical data assets. Use S3 bucket policies to restrict access based on IP addresses or VPC endpoints. Implement server-side encryption with KMS keys to protect sensitive data at rest. Configure CloudTrail logging to track all API calls and data access patterns across your data pipeline.

Cost-Effective Storage Class Selection

Choose appropriate S3 storage classes based on data access patterns and retention requirements. Use S3 Standard for frequently accessed raw data and current processing datasets. Move historical data to S3 Standard-IA after 30 days and archive older datasets to S3 Glacier or Deep Archive. Set up lifecycle policies to automatically transition data between storage classes. Monitor access patterns using S3 analytics and adjust your storage strategy to optimize costs while maintaining performance for your serverless ETL AWS workloads.

Building Automated Data Transformation with AWS Glue

Creating and Managing Glue Data Catalogs

Setting up your AWS Glue crawler starts with configuring data sources and defining crawling schedules. The Data Catalog automatically discovers schema changes and maintains metadata consistency across your scalable data pipeline AWS. Configure multiple crawlers for different data sources, set appropriate exclusion patterns, and enable schema versioning to handle evolving datasets. Regular catalog maintenance includes removing obsolete tables and optimizing partition structures for better query performance.

Developing Scalable ETL Jobs with Glue Studio

Glue Studio provides a visual interface for building complex AWS Glue ETL jobs without extensive coding. Start with built-in transforms like joins, filters, and aggregations, then customize with Python or Scala scripts when needed. Design jobs with proper error handling, implement checkpointing for large datasets, and configure dynamic scaling to handle varying workloads. Use job bookmarks to process only new or changed data, reducing processing time and costs in your AWS ETL pipeline.

Implementing Data Quality Checks and Validation

Data quality rules in Glue evaluate completeness, accuracy, and consistency before downstream processing. Create custom validation logic using DQ Transform nodes to check for null values, data format compliance, and statistical outliers. Implement conditional branching to route failed records to separate error handling paths. Set up automated alerts when quality thresholds are breached, ensuring your AWS data transformation maintains high standards throughout the pipeline lifecycle.

Monitoring Job Performance and Optimization

Track ETL job metrics through CloudWatch dashboards, monitoring execution time, memory usage, and DPU consumption patterns. Analyze job logs to identify bottlenecks and optimize resource allocation accordingly. Enable auto-scaling for variable workloads and use spot instances for cost optimization. Set up custom CloudWatch alarms for job failures and performance degradation. Regular performance tuning includes adjusting worker types, partition sizes, and connection pooling parameters.

Handling Schema Evolution and Data Drift

Schema evolution requires proactive monitoring of incoming data structures and automated adaptation strategies. Configure crawlers to detect schema changes and update table definitions automatically. Implement backward compatibility checks when modifying existing transformations. Use schema registries to version control data structures and enable gradual migration strategies. Set up alerts for unexpected schema changes that might break downstream Amazon Athena analytics queries or reporting systems.

Enabling Fast Analytics with Amazon Athena

Configuring Athena for Optimal Query Performance

Amazon Athena delivers fast analytics on your AWS ETL pipeline data through serverless SQL queries. Configure query result locations in dedicated S3 buckets to avoid data access issues. Set up workgroups to control query execution, manage costs, and enforce resource limits across teams. Enable query result caching to speed up repeated queries and reduce compute costs. Configure appropriate data source connections through the AWS Glue Data Catalog for seamless table discovery. Use partition projection for time-series data to eliminate the need for partition discovery and dramatically improve query startup times.

Implementing Efficient Data Formats and Compression

Choose columnar formats like Parquet or ORC for your AWS data transformation outputs to maximize Athena performance. These formats reduce I/O operations by up to 90% compared to row-based formats like CSV. Apply GZIP or Snappy compression to balance compression ratio with query speed. Partition your data logically by frequently queried columns such as date or region to enable partition pruning. Size your files between 128MB and 1GB for optimal parallel processing. Convert nested JSON data into flattened Parquet tables using AWS Glue ETL jobs to improve query performance and reduce costs significantly.

Creating Views and Managing Query Results

Build logical views in Athena to simplify complex joins and calculations for business users accessing your scalable data pipeline AWS infrastructure. Create materialized views for frequently accessed aggregations to pre-compute expensive operations. Set up automated result retention policies to manage S3 storage costs from query outputs. Use named queries to standardize common analytical patterns across your organization. Implement result encryption and access controls through IAM policies and bucket permissions. Configure CloudWatch metrics to monitor query performance, data scanned, and execution times for continuous optimization of your Amazon Athena analytics workflows.

Designing for Scale and Performance

Implementing Parallel Processing Strategies

AWS Glue jobs excel at parallel processing by automatically partitioning data across multiple workers. Configure dynamic scaling with worker types like G.1X or G.2X to handle varying workloads. Use bookmarks to track processed data and prevent reprocessing. Implement job concurrency limits to avoid resource conflicts while maximizing throughput across your scalable data pipeline AWS infrastructure.

Managing Resource Allocation and Auto-Scaling

Set up auto-scaling policies that respond to queue depth and processing time metrics. Define minimum and maximum worker counts based on your data volumes and SLA requirements. Monitor CloudWatch metrics to fine-tune scaling triggers. Use spot instances for non-critical batch processing to reduce costs while maintaining performance in your AWS ETL pipeline operations.

Optimizing Data Pipeline Throughput

Partition your data strategically in Amazon S3 using year/month/day hierarchies to enable parallel reads. Compress files using formats like Parquet or ORC for better I/O performance. Size your files between 128MB-1GB for optimal processing. Configure Glue job parameters including --enable-metrics and connection pooling to maximize data transformation efficiency across your serverless ETL AWS environment.

Handling Large Volume Data Processing

Design incremental processing patterns to handle massive datasets efficiently. Implement data lake partitioning strategies that align with your query patterns. Use AWS Glue bookmarks for change data capture scenarios. Configure appropriate timeout values and retry policies for long-running jobs. Leverage Amazon Athena’s columnar storage optimization and query result caching to handle petabyte-scale analytics workloads seamlessly.

Monitoring and Maintaining Production Pipelines

Setting Up Comprehensive Pipeline Monitoring

CloudWatch metrics serve as your first line of defense for monitoring AWS ETL pipeline health. Track key performance indicators like Glue job duration, S3 bucket size changes, and Athena query execution times. Set up custom dashboards that visualize data freshness, error rates, and throughput metrics. Configure SNS notifications for critical failures and performance degradation alerts. AWS X-Ray provides detailed tracing for complex data transformations, helping identify bottlenecks across your entire pipeline architecture.

Implementing Automated Error Handling and Recovery

Build resilience into your scalable data pipeline AWS infrastructure through smart retry mechanisms and circuit breakers. Configure Glue jobs with automatic restart policies for transient failures, while implementing dead letter queues for persistent errors. Use Step Functions to orchestrate complex ETL workflows with built-in error handling and conditional branching. Set up Lambda functions that automatically recover from common issues like temporary S3 access problems or network timeouts. Create rollback procedures that can restore previous data states when transformation errors corrupt your data lake.

Performance Tuning and Bottleneck Resolution

Optimize AWS Glue ETL jobs by right-sizing worker instances and adjusting the number of Data Processing Units based on your data volume. Partition your S3 data strategically to improve Athena query performance and reduce costs. Monitor memory usage patterns and CPU utilization to identify resource constraints. Use Glue job bookmarks to process only new or changed data, avoiding unnecessary reprocessing. Implement data compression and columnar storage formats like Parquet to speed up both transformation and analytics workflows while reducing storage costs.

Building robust ETL pipelines on AWS doesn’t have to be overwhelming when you break it down into manageable pieces. By combining S3 for storage, Glue for transformations, and Athena for analytics, you create a powerful foundation that can handle massive amounts of data while keeping costs under control. The key is starting with a solid architecture that thinks about scalability from day one, then automating your data transformations to reduce manual work and potential errors.

Don’t forget that your pipeline is only as good as your monitoring and maintenance strategy. Set up proper alerts, track your job performance, and regularly review your costs to make sure everything runs smoothly. Start small with a pilot project, test your approach thoroughly, and then scale up as your confidence and data volumes grow. Your future self will thank you for taking the time to build something that can evolve with your business needs.