Looking to unlock insights from your data without complex infrastructure? AWS offers a complete toolkit for data analysts, business intelligence professionals, and data engineers who need simplified yet powerful analysis solutions.

In this guide, we’ll explore how AWS services work together to create a seamless data storytelling pipeline. You’ll learn how S3 serves as your scalable data foundation, how AWS Glue transforms raw data into usable formats, and how Athena’s serverless SQL queries help you extract meaningful insights quickly.

We’ll also cover QuickSight’s visualization capabilities and how QuickSight Q allows anyone on your team to ask data questions in plain English—no coding required.

Understanding the AWS Data Analysis Ecosystem

How these tools work together to simplify data workflows

Think of AWS’s data tools as a well-oiled machine where each part has a specific job but works in harmony with the others. S3 serves as your central storage hub – a massive digital filing cabinet where all your raw data lives. AWS Glue then steps in as your data janitor, cleaning up and organizing this information into usable formats.

Once your data is clean, Athena becomes your question-asker. Need to know last quarter’s sales figures? Just ask Athena using familiar SQL, and it’ll dig through your data lake without moving a single file. The real magic happens when QuickSight enters the picture. It transforms those dry numbers into colorful charts and interactive dashboards that anyone can understand. And when your marketing team has questions? QuickSight Q lets them ask in plain English – no code required.

Key benefits of using AWS services for data analysis

The AWS stack comes with some serious perks that make life easier for data teams:

- Pay for what you use: No need for expensive hardware that sits idle half the time

- Scale instantly: Handling a sudden 10x data increase? No problem

- Zero maintenance headaches: AWS handles all the server upkeep

- Built-in security: Enterprise-grade protection without extra effort

- Seamless integration: These tools were designed to work together from day one

Why this stack is ideal for both technical and non-technical users

Data scientists and analysts get to work with powerful tools that handle massive datasets without writing complex infrastructure code. They can focus on insights rather than server management.

For business users who break out in hives at the mention of code, QuickSight’s visual interface and natural language queries are game-changers. Marketing managers can create their own reports without IT tickets. Executives can ask questions directly and get answers in seconds.

The real win? Everyone works from the same data sources, eliminating the “my numbers don’t match your numbers” debates that plague cross-department meetings.

Amazon S3: The Foundation of Your Data Lake

Setting up and organizing your data storage efficiently

Amazon S3 isn’t just cloud storage—it’s the backbone of your entire data lake strategy. Getting your S3 setup right from day one saves massive headaches down the road.

Start with a clear bucket naming convention. Something like:

{company}-{environment}-{purpose}-{region}

Inside your buckets, folder structure matters. Consider this organization:

/raw/ # Original unprocessed data

/staged/ # Cleaned, validated data

/processed/ # Transformed, analysis-ready data

/curated/ # Business-domain specific datasets

Don’t dump everything into a single bucket. Split your data by frequency of access, retention requirements, and processing stage.

File formats make a huge difference too. Parquet and ORC will dramatically outperform CSV and JSON for analytical workloads. They’re columnar, compressed, and include schema information—perfect for Athena queries.

Best practices for data lake architecture

The secret to a successful data lake? Think layers, not just storage.

Your data lake architecture should follow these zones:

- Landing zone: Raw, untouched data exactly as received

- Raw zone: Validated, cataloged data in original format

- Trusted zone: Cleansed, standardized, transformed data

- Refined zone: Business-specific datasets optimized for consumption

Partitioning is your best friend. Slice your data by date, region, or category:

s3://mybucket/data/year=2023/month=10/day=15/

This simple structure can cut query costs by 90% by limiting the data Athena needs to scan.

Implement a data catalog from day one. AWS Glue Crawler can automatically discover and register your schemas, making the data immediately queryable.

Cost-effective strategies for managing large datasets

S3 seems cheap until your bill arrives. Smart management makes all the difference.

First, understand S3 storage classes:

| Storage Class | Use Case | Retrieval Time | Cost (relative) |

|---|---|---|---|

| Standard | Active data | Immediate | $$$ |

| Intelligent-Tiering | Variable access | Immediate | $$ |

| Glacier Deep Archive | Long-term backup | Hours | $ |

Set lifecycle policies to automatically transition older data to cheaper storage tiers. Data older than 90 days? Move it to Infrequent Access. Older than a year? Glacier.

Compress everything. Parquet files with Snappy compression can reduce storage by 75% while improving query performance.

Delete what you don’t need. Set up expiration policies for temporary processing data and logs.

Finally, use S3 Storage Lens to identify cost-saving opportunities across your entire organization.

Security considerations for your data assets

Data lakes are gold mines—protect them accordingly.

Start with the principle of least privilege. Use IAM roles with specific permissions rather than long-term access keys.

Encrypt everything—both in transit (HTTPS) and at rest (S3-SSE or KMS). Let me be clear: there’s zero reason not to encrypt your data lake.

Block public access at the account level. One misconfigured bucket can expose your entire business.

Implement S3 Object Lock for compliance data that should never be modified or deleted.

Use VPC endpoints to keep data transfer within the AWS network. This prevents your data from traversing the public internet.

Enable access logging and CloudTrail to track who’s accessing what, when.

Finally, consider implementing S3 Access Points to simplify permissions for different teams without compromising security.

AWS Glue: Transforming Raw Data into Valuable Insights

Creating automated ETL jobs without complex coding

Gone are the days when you needed a team of developers to build ETL pipelines. AWS Glue changes the game completely. With its visual interface, you can drag and drop components to create complete data workflows without writing a single line of code.

Want to convert CSV files to Parquet? Just a few clicks. Need to join datasets from different sources? Drag, connect, done.

But here’s what makes Glue truly special – it generates the code for you. Behind those visual elements, Glue is writing optimized PySpark scripts that you can customize if needed. It’s like having a data engineer in your pocket.

# Glue automatically generates code like this

datasource0 = glueContext.create_dynamic_frame.from_catalog(

database = "my_database",

table_name = "raw_data")

Leveraging Glue Crawlers to discover and catalog data

Crawlers are your secret weapon for data discovery. Point them at your S3 buckets, and they’ll scan everything – identifying file formats, schemas, and even sample data.

The best part? They automatically organize everything in the Glue Data Catalog, creating tables you can query with Athena or use in ETL jobs.

Pro tip: Schedule your crawlers to run regularly. Data changes, and your catalog should too.

Implementing data quality checks in your pipeline

Garbage in, garbage out. That’s why data quality is non-negotiable.

Glue Data Quality gives you built-in tools to validate your data at every step:

- Check for null values in critical columns

- Validate data types and formats

- Ensure values fall within expected ranges

- Flag anomalies and outliers

You can even set up automatic alerts when quality issues are detected, stopping bad data before it poisons your analytics.

Tips for optimizing Glue performance and reducing costs

Glue jobs can get expensive if you’re not careful. Here’s how to keep costs down while maximizing performance:

- Use job bookmarks to process only new data

- Set appropriate DPU limits for your workloads

- Schedule jobs instead of running on-demand

- Take advantage of AWS Glue version 3.0+ for faster startup times

- Partition your data intelligently

For large datasets, try this sneaky trick: split your job into multiple smaller jobs that run in parallel. You’ll cut processing time dramatically.

Real-world ETL scenarios and solutions

Data transformation isn’t just academic – it solves real business problems.

Take an e-commerce company that needed to combine website clickstream data with inventory and sales. Their solution? A Glue ETL pipeline that:

- Ingested raw logs from S3

- Cleaned and normalized user behavior data

- Joined it with transaction records

- Aggregated results for analysis

The outcome? They identified product browsing patterns that never converted to sales, discovered inventory problems, and increased revenue by 15%.

Another example: a healthcare provider used Glue to transform patient records from multiple systems into FHIR-compliant formats. This standardization unlocked interoperability across their entire network.

Amazon Athena: SQL Power Without the Infrastructure

Writing effective queries for large datasets

Querying terabytes of data doesn’t have to be a headache. With Athena, you’re just a few SQL statements away from insights without worrying about servers or clusters.

Start simple. Focus on selecting only the columns you actually need:

SELECT customer_id, purchase_amount, purchase_date

FROM sales

WHERE purchase_date > '2023-01-01'

Not:

SELECT *

FROM sales

WHERE purchase_date > '2023-01-01'

The second query pulls unnecessary data and costs you money. Athena charges by the amount of data scanned, so being selective pays off.

Partition your data. It’s a game-changer. When your queries include partition fields in the WHERE clause, Athena skips irrelevant partitions entirely:

SELECT sum(revenue)

FROM orders

WHERE date_partition = '2023-04-15'

This query might scan megabytes instead of gigabytes. Smart.

Performance optimization techniques

Want faster queries without breaking the bank? Try these tricks:

- Compress your files – Gzip or Snappy compression reduces the data Athena needs to scan.

- Convert to columnar formats – Parquet and ORC are your friends here. They store data by column rather than row, so Athena can skip columns not in your query.

- Right-size your files – Aim for files between 128MB and 1GB. Too many small files create overhead; too few large files limit parallelization.

- Use approximate functions – Need a rough count of unique values?

approx_distinct()is way faster thanCOUNT(DISTINCT). - Create views for common queries – This simplifies complex joins and subqueries, making your code cleaner.

Integrating Athena with other AWS services

Athena doesn’t live in isolation – it’s part of a powerful ecosystem.

Connect Athena to QuickSight and suddenly your SQL queries power beautiful visualizations and dashboards. No need to export data or build ETL pipelines.

Use AWS Lambda with Athena for scheduled queries. Maybe you want daily sales reports emailed to your team? Lambda can trigger the query, format the results, and send them automatically.

Glue and Athena form a dream team. Glue catalogs your data, and Athena queries it – no manual schema management required.

For security teams, CloudTrail logs in S3 become instantly queryable through Athena. Investigating unusual account activity? Write a SQL query instead of sifting through log files.

The real magic happens when you combine these services. Imagine customer data flowing from S3 through Glue transformations, becoming queryable in Athena, and finally displayed in QuickSight dashboards – all without managing a single server.

Amazon QuickSight: Visualizing Data for Impactful Storytelling

Creating your first interactive dashboard

Getting started with QuickSight is surprisingly straightforward. Once you’ve connected your data source, you’re just a few clicks away from your first dashboard.

- Hit the “New analysis” button

- Select your dataset

- Choose “Create analysis”

That’s it! QuickSight automatically suggests visualizations based on your data types. Drag fields into the visual editor, and watch your dashboard come to life.

Want to spice things up? Try the “Suggested” button when adding a new visual. QuickSight analyzes your data and recommends the most impactful visualizations. It’s like having a data viz expert right at your fingertips.

Designing visualizations that communicate clearly

Great visualizations answer questions before they’re asked. Here’s how to nail it:

- Start with the question, not the chart type

- Embrace white space – crowded visuals confuse viewers

- Use color purposefully – highlight what matters, mute what doesn’t

- Keep it simple – if you need a manual to explain your chart, it’s too complicated

The best dashboards tell a story at a glance. Instead of cramming everything into one visual, break insights into digestible chunks. Your colleagues will thank you.

Pro tip: Add text boxes with key takeaways. Numbers are great, but explicit insights are better.

Sharing insights across your organization

What good is brilliant analysis if nobody sees it?

QuickSight makes sharing ridiculously easy. You can:

- Email direct dashboard links

- Schedule automated report delivery

- Embed dashboards in internal portals

- Set up role-based access controls

The “Dashboard sharing” feature lets you customize exactly what each team sees. Your finance folks might need detailed cost breakdowns, while executives just want high-level metrics. With QuickSight, everyone gets exactly what they need.

Advanced features for data-driven professionals

Ready to level up? QuickSight packs some serious analytical firepower:

- ML-powered anomaly detection spots unusual trends automatically

- Forecasting capabilities predict future values based on historical patterns

- What-if analysis lets you model different scenarios

- Natural language Q&A with QuickSight Q – just type questions like “show me monthly sales trends by region” and get instant visualizations

The narrative insights feature is a game-changer. It automatically generates text explanations of your data trends, saving hours of analysis time.

Custom calculations? Drill-downs? Interactive filtering? All there, waiting for you to explore.

QuickSight Q: Democratizing Data Analysis with Natural Language

How Q transforms business questions into visual answers

Ever asked a question about your data and wished it would just answer you directly? That’s exactly what QuickSight Q does. No more complex query building or dashboard hunting.

Q works its magic by interpreting what you’re actually asking for. Type something like “What were our sales in California last month?” and Q immediately understands you want:

- A visualization of sales data

- Filtered to California location

- Limited to last month’s timeframe

Behind the scenes, Q is breaking down your question, mapping terms to your data fields, figuring out relationships, and selecting the best visualization type. Ask about trends? You’ll get a line chart. Comparing categories? Hello, bar chart.

The brilliance is that Q doesn’t just give you rigid answers. Didn’t quite get what you wanted? Refine your question on the fly. Need to pivot? Ask a follow-up. The entire experience feels like chatting with a data analyst who has instant access to all your information.

Training Q to understand your specific business context

Q isn’t just smart out of the box—it gets smarter about your business.

Your industry has its own language. “Conversion” means something different to an e-commerce company than to a religious organization. Q learns these distinctions through topic training.

Here’s how to make Q speak your language:

- Create topics that map to your data sources

- Review and verify field names and synonyms

- Add business-specific terminology and jargon

- Teach Q how metrics should be calculated

The real power comes when you actively improve Q’s knowledge. When Q misunderstands a question, correct it. These corrections become part of its learning. Over time, Q develops an understanding of your business that rivals your most experienced analysts.

Empowering non-technical users to find insights independently

The data gap in most organizations is real. Technical teams drowning in dashboard requests while business users wait for answers they need now.

Q completely flips this dynamic.

Marketing managers can ask “How are our social campaigns performing?” without learning SQL. Sales reps can check “Who are my highest value prospects?” without bothering the analytics team. Executives can inquire “Where are we losing market share?” right before a critical meeting.

This independence creates a virtuous cycle. When people can answer their own questions:

- They ask more questions

- They discover unexpected insights

- They make data-driven decisions faster

- They develop deeper data intuition

The impact goes beyond convenience. When everyone can access insights directly, your entire organization becomes more responsive and data-driven. Decisions improve, opportunities are spotted earlier, and problems are addressed before they grow.

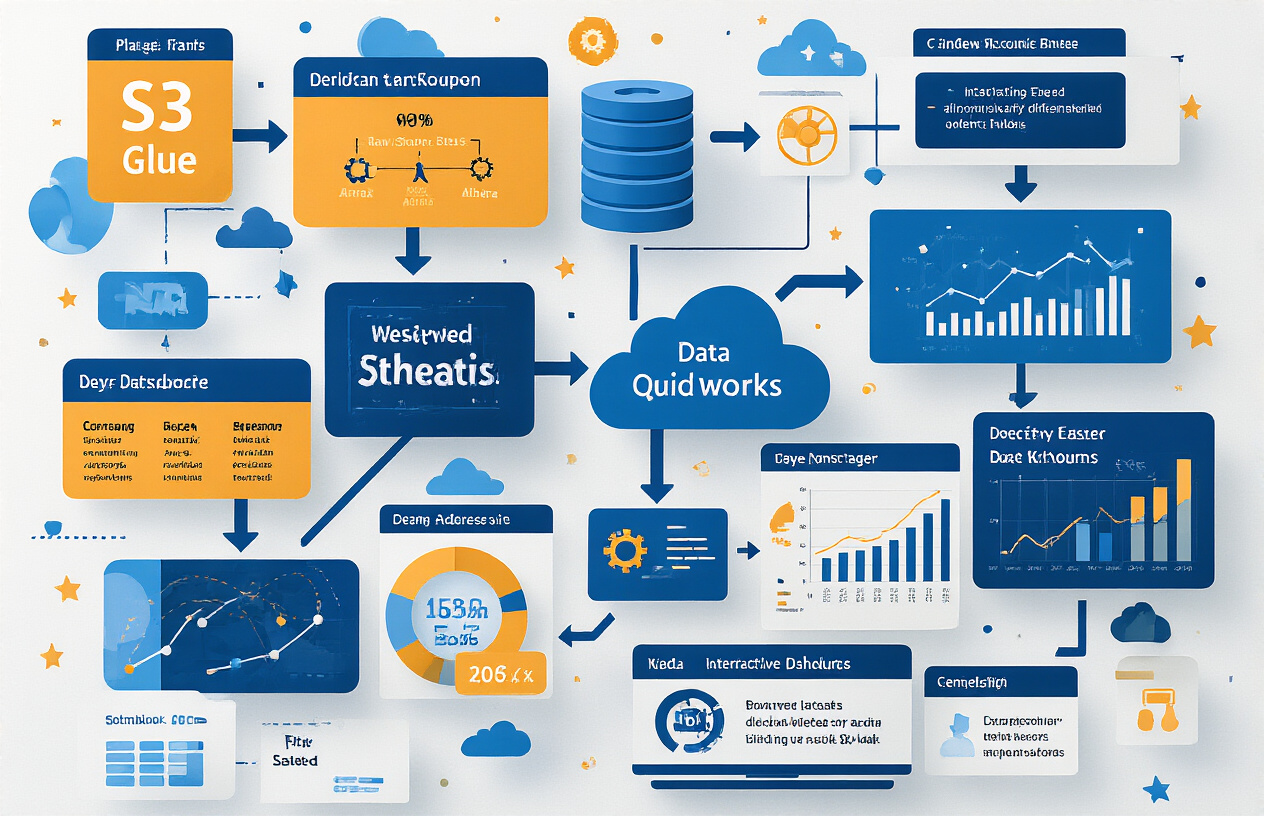

Building End-to-End Data Storytelling Workflows

A. Connecting the dots between S3, Glue, Athena and QuickSight

Data storytelling isn’t about isolated tools—it’s about how they work together. The magic happens when you connect S3, Glue, Athena, and QuickSight into a seamless pipeline.

Think of S3 as your data’s home base. It stores everything from CSV files to JSON logs at pennies per gigabyte. But raw data isn’t very useful on its own.

That’s where AWS Glue steps in. It crawls through your S3 buckets, figures out what’s what, and builds a catalog of your data. Need to transform messy data? Glue jobs handle the dirty work—converting formats, cleaning values, and structuring your information without you writing complex code.

Once your data’s organized, Athena lets you ask questions using familiar SQL. No servers to manage, no databases to tune. Just type your query and get answers from terabytes of data in seconds.

QuickSight completes the circle by turning those query results into visuals anyone can understand. Drag-and-drop charts, AI-powered insights, and interactive dashboards make your data tell its story.

The best part? These services talk to each other automatically. Glue tells Athena what data you have, Athena feeds results to QuickSight, and everything stays in sync as your S3 data grows.

B. Automating insight generation and distribution

Manually creating reports is so 2010. Today’s data heroes automate everything.

Start with scheduled Glue jobs that transform fresh data as it arrives. Set them to run hourly, daily, or whenever new files land in S3. Your data gets processed while you sleep.

Athena queries can be embedded into Lambda functions that run on schedules or triggers. Imagine automatically analyzing yesterday’s sales data every morning at 6 AM, with anomalies flagged before you’ve had your coffee.

QuickSight takes automation to another level. Schedule email reports to hit stakeholders’ inboxes with the latest numbers. Create dashboard alerts that ping your team when metrics cross thresholds. Set up QuickSight Q to generate weekly summaries in plain English.

Want to embed analytics in your internal apps? QuickSight’s embedding API lets you put live dashboards anywhere your team works. No more “check the dashboard” reminders—the insights come to them.

The real power move? Creating a notification workflow where unusual data patterns trigger targeted alerts. Sales spike in a region? The regional manager gets an automatic analysis. Customer satisfaction dropping? Product teams receive instant feedback dashboards.

C. Establishing governance and security throughout the pipeline

Data storytelling falls flat if nobody trusts the data. Security and governance aren’t afterthoughts—they’re foundational.

S3 bucket policies let you lock down who sees what raw data. Use AWS KMS for encryption at rest, ensuring sensitive information stays protected even in storage.

Glue’s integration with AWS Lake Formation provides fine-grained access controls at the table and column level. Marketing can see customer preferences while being blocked from seeing personal identifiers. Finance sees purchase amounts but not browsing history.

For Athena, query results can be encrypted, and access patterns monitored through CloudTrail. Set up query result size limitations to prevent accidental (or malicious) resource drainage.

QuickSight’s row-level security is a game-changer. Regional managers see only their territory’s data, while executives view everything. All from the same dashboard, with permissions handled automatically.

Don’t forget data lineage tracking. AWS Glue now offers data lineage features showing how information flows through your pipeline. When someone questions a metric, you can trace it back to the source.

D. Scaling your solution as data volumes grow

The beauty of this AWS stack? It grows with you without breaking a sweat.

S3 scales infinitely, handling petabytes as easily as gigabytes. Your storage costs increase linearly, but there’s no performance cliff as volumes expand.

Glue jobs automatically distribute workloads across multiple workers. As your data doubles, just dial up the DPU settings—no rewriting code or rearchitecting solutions.

Athena’s secret weapon is partitioning. By organizing S3 data into year/month/day folders, queries touch only relevant data. A query that once scanned terabytes now scans gigabytes, cutting costs and boosting speed.

For massive datasets, compress files and convert to columnar formats like Parquet. Your queries run faster, and your S3 bill shrinks. Win-win.

QuickSight handles millions of rows with SPICE, its in-memory calculation engine. It automatically samples large datasets for lightning-fast visualizations, then refines results as users drill down.

Need to go bigger? Direct Query mode lets QuickSight pass queries straight to Athena for truly massive datasets, trading some speed for unlimited scale.

Practical Use Cases and Implementation Strategies

A. Marketing analytics and customer insights

Picture this: You’ve got thousands of customer interactions happening daily across your digital platforms. That’s gold sitting in your AWS S3 buckets! With this stack, marketing teams can finally connect the dots without begging IT for help.

Start by dumping your CRM exports, website analytics, and campaign data into S3. AWS Glue crawlers automatically catalog everything, identifying customer segments and behavioral patterns. Then create an Athena query like this:

SELECT campaign_source, COUNT(*) as conversions, AVG(purchase_value) as avg_value

FROM marketing_data

WHERE conversion_date BETWEEN '2023-01-01' AND '2023-03-31'

GROUP BY campaign_source

ORDER BY conversions DESC

One marketing director I worked with slashed their analysis time from days to minutes. They built QuickSight dashboards showing customer journey funnels and campaign ROI side-by-side. When the CMO asked “which segments responded best to our spring promotion?” they just typed that exact question into QuickSight Q and got instant visualizations.

The game-changer? Combining historical purchase data with real-time website behavior using the same infrastructure.

B. Financial reporting and forecasting

CFOs hate surprises. This AWS stack eliminates them.

Financial data from disparate systems lands in your S3 data lake – ERP exports, transaction logs, budget spreadsheets. AWS Glue transforms and standardizes everything, handling currency conversions and fiscal period alignments automatically.

Want to spot trends? Create an Athena view combining historical performance with current data:

CREATE OR REPLACE VIEW quarterly_performance AS

SELECT fiscal_quarter,

SUM(revenue) as total_revenue,

SUM(costs) as total_costs,

SUM(revenue - costs) as profit

FROM financial_transactions

GROUP BY fiscal_quarter

Then visualize cash flow projections in QuickSight with confidence intervals showing best/worst case scenarios. One fintech company I advised uses this exact setup to reconcile transactions across 12 payment processors daily – what used to take a team of analysts now runs automatically.

The best part? When board members ask tough questions during quarterly reviews, finance leaders can drill down into the details instantly, right in the meeting.

C. Operational monitoring and optimization

Operations teams drowning in data? This solution turns that flood into actionable insights.

Warehouse management systems, delivery tracking, supply chain data – pour it all into S3 buckets. AWS Glue can normalize timestamps across time zones and join datasets that never talked to each other before.

Here’s a game-changing Athena query one logistics company uses:

SELECT

facility_id,

AVG(processing_time) as avg_processing,

STDDEV(processing_time) as processing_variability,

COUNT(*) as volume

FROM shipping_operations

WHERE date_processed > CURRENT_DATE - INTERVAL '30' DAY

GROUP BY facility_id

HAVING COUNT(*) > 1000

ORDER BY processing_variability DESC

This immediately highlights facilities with inconsistent performance. Their ops team created QuickSight dashboards showing real-time bottlenecks and resource utilization across 200+ locations.

When unexpected delays hit their Midwest distribution center, managers didn’t waste time hunting for the cause – QuickSight highlighted the staffing shortfall compared to incoming volume in seconds.

D. Product usage analysis and feature adoption

Product teams often build features nobody uses. This AWS setup prevents that costly mistake.

Stream user interaction logs directly to S3. AWS Glue can parse complex JSON events and transform them into analysis-ready tables. Then Athena lets product managers run cohort analyses without learning Python:

SELECT

DATE_TRUNC('week', first_seen) as cohort_week,

feature_name,

COUNT(DISTINCT user_id) as users,

COUNT(DISTINCT CASE WHEN days_active > 7 THEN user_id END) as retained_users

FROM user_feature_adoption

GROUP BY DATE_TRUNC('week', first_seen), feature_name

One SaaS company discovered their most expensive feature to build was used by less than 2% of customers – information that reshaped their entire roadmap.

Their product managers now keep QuickSight dashboards showing feature adoption curves alongside customer satisfaction scores. When someone asks “how’s the new collaboration tool performing?” they simply type that into QuickSight Q and get instant visual confirmation.

E. IoT and real-time data processing

Connected devices generate mountains of data. Most companies collect it but never use it. Big mistake.

This AWS architecture handles it brilliantly. IoT device data streams into S3, while AWS Glue manages schema evolution as device firmware updates change data formats.

Athena’s ability to query S3 directly means you can analyze petabytes of sensor data without moving it:

SELECT

device_id,

sensor_type,

AVG(reading) as avg_reading,

MIN(reading) as min_reading,

MAX(reading) as max_reading,

STDDEV(reading) as reading_variability

FROM iot_sensor_data

WHERE reading_timestamp > CURRENT_TIMESTAMP - INTERVAL '24' HOUR

AND reading IS NOT NULL

GROUP BY device_id, sensor_type

HAVING COUNT(*) > 100

A manufacturing client uses this exact setup to monitor 12,000+ factory sensors. They’ve built QuickSight dashboards that automatically trigger alerts when equipment shows early failure patterns.

The ROI? Maintenance costs down 34% and unplanned downtime reduced by over half. Their team now spends time preventing problems instead of reacting to them.

Mastering AWS’s data analysis stack empowers organizations to transform raw data into compelling visual narratives without complex infrastructure management. By combining S3’s scalable storage, Glue’s ETL capabilities, Athena’s serverless SQL querying, and QuickSight’s powerful visualization tools, teams can build end-to-end data storytelling workflows that drive better decision-making across the organization.

Whether you’re analyzing customer behavior, optimizing operations, or identifying market trends, this integrated ecosystem makes sophisticated data analysis accessible to users of all technical levels. Start small with a focused use case, leverage these serverless technologies to minimize overhead, and watch as your organization’s data culture flourishes with insights that were previously locked away in raw datasets.