CPU vs GPU vs TPU vs Quantum Computing: What They Really Mean and When to Use Each

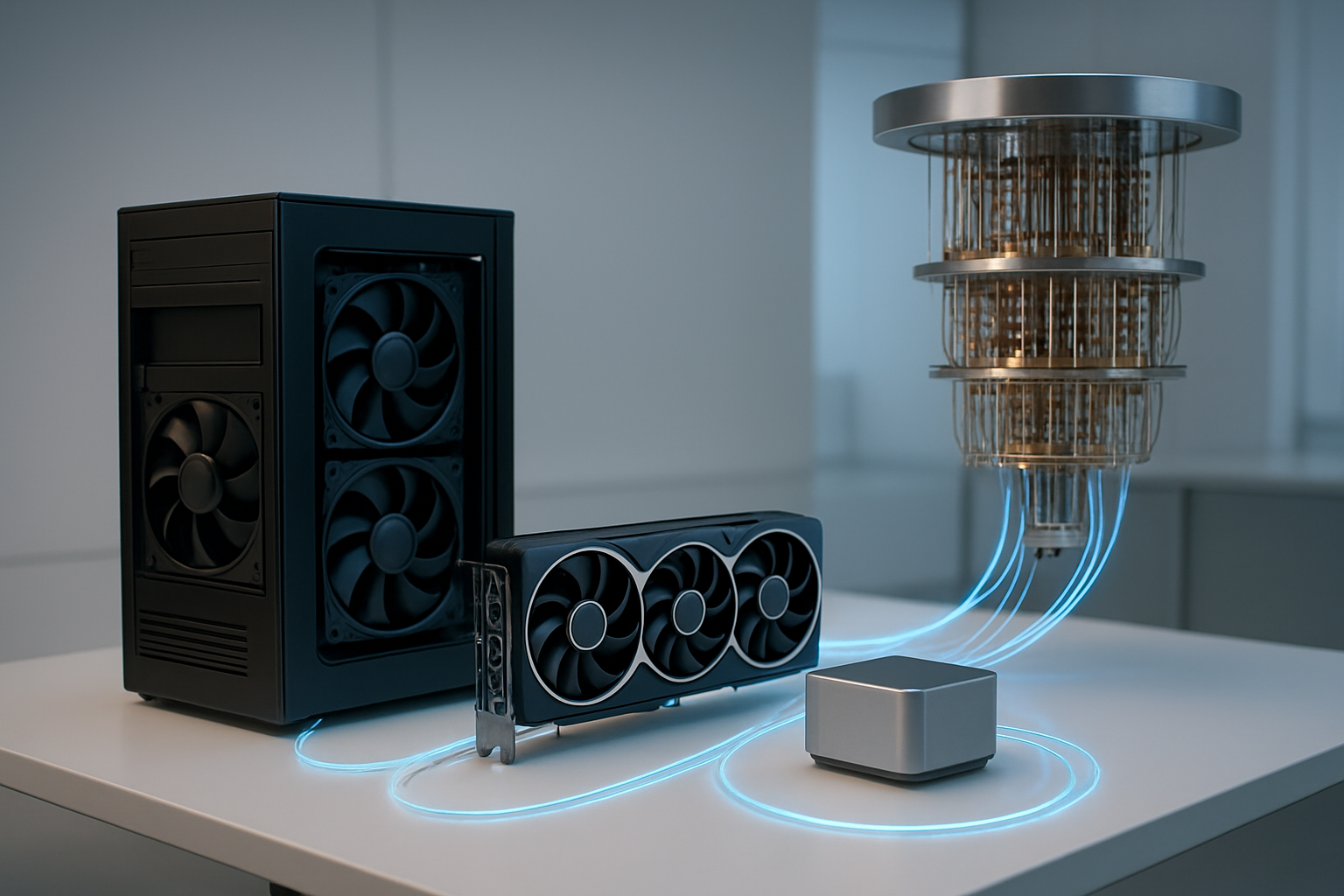

Choosing the right processor for your project can make or break your performance and budget. With CPU vs GPU battles dominating tech discussions and newer players like TPUs and quantum computing entering the scene, developers, data scientists, and tech decision-makers need a clear roadmap through the processor selection guide maze.

This guide breaks down the CPU GPU TPU differences in plain English, helping you understand when each technology shines. We’ll explore how parallel processing vs sequential processing impacts real-world performance, dive into quantum computing explained for practical applications, and show you the AI processor comparison that matters for your specific needs.

You’ll discover the core strengths of traditional CPU architecture, learn why GPU parallel computing revolutionized machine learning, and understand how TPU machine learning optimization changes the game for AI workloads. We’ll also examine quantum vs classical computing capabilities and walk through performance scenarios that help you pick the right tool for your computing challenges.

Understanding CPU Architecture and Core Functionality

How CPUs Process Instructions and Handle Multiple Tasks

CPUs operate through a carefully orchestrated sequence called the instruction cycle, which includes fetching, decoding, executing, and storing results. Each CPU core contains specialized units like the control unit, arithmetic logic unit (ALU), and cache memory that work together to process individual instructions. Modern CPUs feature sophisticated branch prediction and out-of-order execution capabilities, allowing them to anticipate which instructions will likely be needed next and rearrange instruction processing for maximum efficiency.

The magic happens through something called pipelining, where multiple instructions move through different processing stages simultaneously. Think of it like an assembly line where one instruction gets decoded while another executes and a third gets fetched from memory. This approach maximizes the utilization of each processing unit within the CPU core.

CPU cores also excel at context switching, rapidly jumping between different programs and tasks. The operating system scheduler allocates CPU time slices to various applications, creating the illusion that multiple programs run simultaneously on single-core systems. Multi-core processors take this further by literally running separate programs on different cores simultaneously.

Cache hierarchies play a crucial role in CPU performance. Level 1 cache sits closest to each core, providing ultra-fast access to frequently used data and instructions. Levels 2 and 3 caches offer progressively larger storage with slightly higher latencies, creating a memory hierarchy that balances speed with capacity.

Why CPUs Excel at Sequential Processing and Complex Logic

CPUs dominate sequential processing scenarios because their architecture prioritizes single-threaded performance and complex decision-making capabilities. Unlike GPUs that sacrifice individual core complexity for massive parallelism, CPU cores pack sophisticated logic units, large caches, and advanced prediction mechanisms that excel at handling branching code and conditional statements.

Sequential processing benefits enormously from CPU features like branch prediction, which uses historical execution patterns to guess which code paths will be taken. When programs contain lots of if-then statements, loops with variable iterations, or recursive functions, CPUs can adapt their processing strategy dynamically. This flexibility makes them ideal for operating systems, databases, web servers, and most business applications.

CPU cores also handle memory management more efficiently for complex workloads. Their multi-level cache systems and memory controllers optimize data locality, ensuring frequently accessed information stays close to processing units. This architecture particularly benefits applications with unpredictable memory access patterns or those requiring frequent communication between different parts of the program.

The CPU vs GPU comparison becomes clear when examining instruction-level parallelism versus data parallelism. CPUs extract parallelism from individual instruction streams through techniques like superscalar execution and speculative processing. They can execute multiple instructions per clock cycle from a single program thread, making them perfect for applications where tasks must complete in specific orders or depend on previous calculations.

Power Consumption and Heat Generation in Modern CPUs

Modern CPUs walk a delicate balance between performance and thermal efficiency, with power consumption varying dramatically based on workload characteristics and clock speeds. Dynamic voltage and frequency scaling (DVFS) allows CPUs to adjust their operating parameters in real-time, reducing power when full performance isn’t needed and ramping up for demanding tasks.

Thermal design power (TDP) ratings provide guidelines for cooling system requirements, typically ranging from 15 watts for mobile processors to over 200 watts for high-end desktop and server chips. These ratings represent average power consumption under sustained workloads, though actual power draw can spike significantly during peak processing periods.

Heat generation stems primarily from transistor switching activity and leakage current. As CPU cores increase their clock speeds or handle more intensive workloads, power consumption rises exponentially rather than linearly. This relationship explains why CPU manufacturers focus on architectural improvements and process node advancements rather than simply increasing clock speeds.

Modern CPUs employ various power management techniques including:

- Clock gating: Disabling clock signals to unused circuit sections

- Power gating: Completely shutting off power to idle cores or functional units

- Turbo boost: Temporarily increasing clock speeds when thermal and power budgets allow

- Idle states: Deep sleep modes that minimize power consumption during inactivity

Cooling solutions must account for both sustained thermal loads and transient heat spikes. Inadequate cooling leads to thermal throttling, where CPUs automatically reduce performance to prevent overheating. This thermal management becomes increasingly challenging as transistor densities increase and chip designers pack more functionality into smaller spaces.

GPU Technology Breakdown and Parallel Processing Power

Massive Parallel Architecture for Simultaneous Operations

Graphics Processing Units contain thousands of cores designed to handle multiple calculations at once, unlike CPUs that focus on sequential processing. A modern GPU can pack anywhere from 2,000 to 10,000 cores on a single chip, each capable of executing simple operations simultaneously. This massive parallelization makes GPUs incredibly powerful for tasks that can be broken down into smaller, independent calculations.

The architecture centers around streaming multiprocessors (SMs) that group cores together and share resources like memory and instruction units. Each SM can execute hundreds of threads concurrently, with threads organized into warps or wavefronts that execute the same instruction across multiple data points. This SIMD (Single Instruction, Multiple Data) approach excels when you need to perform identical operations on large datasets.

Memory hierarchy plays a crucial role in this parallel design. GPUs feature multiple levels of memory – from fast but limited registers and shared memory within each SM, to larger but slower global memory accessible by all cores. The memory system coordinates data flow across thousands of cores, ensuring each processing unit has access to the information it needs without creating bottlenecks.

Graphics Rendering vs General Purpose Computing Applications

Originally designed for rendering pixels and textures in video games and 3D applications, GPUs have evolved far beyond their graphics roots. Traditional graphics workloads involve transforming millions of vertices, calculating lighting effects, and applying textures – all highly parallel tasks that map perfectly to GPU architecture.

Graphics Applications:

- Real-time 3D rendering and animation

- Video encoding and decoding

- Image processing and filters

- Ray tracing for realistic lighting

General Purpose Computing (GPGPU):

- Machine learning model training

- Scientific simulations and modeling

- Cryptocurrency mining

- Data analysis and processing

The transition from graphics to general-purpose computing happened because many computational problems share similar characteristics with graphics rendering. Both involve applying the same operation to massive amounts of data, making them ideal candidates for parallel processing vs sequential processing approaches.

Modern frameworks like CUDA, OpenCL, and ROCm have made it easier for developers to harness GPU parallel computing power for non-graphics tasks. This shift has transformed GPUs into essential components for AI and scientific computing workloads.

Memory Bandwidth Advantages for Data-Intensive Tasks

GPU memory systems deliver significantly higher bandwidth compared to traditional CPU memory configurations. While CPUs typically offer 50-100 GB/s of memory bandwidth, high-end GPUs can achieve 500-1000 GB/s or more. This massive bandwidth advantage proves critical for data-intensive applications that need to move large amounts of information quickly.

| Component | Memory Bandwidth | Memory Type |

|---|---|---|

| High-end CPU | 50-100 GB/s | DDR4/DDR5 |

| Gaming GPU | 400-600 GB/s | GDDR6 |

| Data Center GPU | 900-2000 GB/s | HBM2/HBM3 |

The wide memory bus in GPUs (often 256-bit, 384-bit, or even 4096-bit for HBM) allows multiple memory transactions to occur simultaneously. This design philosophy prioritizes throughput over latency, which works perfectly for applications that process large datasets in batches rather than requiring immediate responses to individual requests.

High memory bandwidth becomes especially important for AI workloads where models need to access millions or billions of parameters repeatedly during training and inference. The ability to feed data to thousands of cores continuously prevents the processing units from sitting idle while waiting for information.

Energy Efficiency in High-Performance Computing Scenarios

GPUs deliver superior performance per watt compared to CPUs for many parallel computing tasks. This energy efficiency stems from their specialized architecture that dedicates most chip area to computation rather than complex control logic and cache systems. While individual GPU cores are simpler than CPU cores, the massive parallelism allows GPUs to complete suitable workloads using less total energy.

Modern data center GPUs incorporate advanced power management features including dynamic voltage and frequency scaling, allowing different parts of the chip to operate at optimal power levels based on workload demands. Some GPUs can selectively power down unused cores or reduce clock speeds in less demanding sections while maintaining peak performance where needed.

The energy efficiency advantage becomes particularly pronounced in AI training scenarios where the same mathematical operations repeat millions of times. Rather than using a few powerful CPU cores running at high frequencies, GPUs distribute the work across thousands of efficient cores running at moderate speeds, reducing overall power consumption while maintaining high throughput.

Liquid cooling systems and advanced thermal management allow modern GPUs to sustain high performance levels without thermal throttling, ensuring consistent energy efficiency across extended computing sessions. This thermal stability proves essential for data centers running continuous AI training or scientific simulation workloads.

TPU Specifications and AI-Optimized Design

Tensor Operations and Machine Learning Acceleration

TPUs excel at handling the massive matrix calculations that power modern AI systems. Unlike CPUs that process data sequentially or GPUs that handle graphics-focused parallel tasks, TPUs are custom-built for the specific mathematical operations that neural networks demand. Think of tensor operations as the mathematical building blocks of AI – they’re the repeated calculations that happen millions of times when training a machine learning model.

The magic happens in how TPUs process these operations. While a CPU might take several clock cycles to complete a single matrix multiplication, TPUs can perform hundreds of these calculations simultaneously. This specialized architecture means that training a deep learning model that might take weeks on traditional hardware can be completed in days or even hours.

Google designed TPUs with a focus on 8-bit and 16-bit integer operations, which are perfect for most AI workloads. This precision level provides the accuracy needed for machine learning while dramatically reducing power consumption and increasing processing speed compared to the 32-bit or 64-bit operations that CPUs and GPUs typically use.

Custom Silicon Architecture for Neural Network Training

TPUs represent a fundamental shift from general-purpose computing to application-specific integrated circuits (ASICs) designed exclusively for AI workloads. The architecture centers around a systolic array – a grid of processing elements that work together like a perfectly synchronized orchestra. Each processing element handles simple operations, but when thousands work together, they create enormous computational power.

The memory architecture in TPUs is equally impressive. Traditional processors often struggle with the memory bandwidth needed for large neural networks. TPUs solve this by placing high-bandwidth memory directly on the chip, eliminating the bottleneck that occurs when data has to travel between the processor and external memory.

Google’s fourth-generation TPUs pack 2.7 times more peak computing power than their predecessors, with each pod containing 4,096 TPU chips connected through a high-speed interconnect. This design allows researchers to train models with trillions of parameters – something that would be nearly impossible with conventional hardware setups.

Cost Benefits for Large-Scale AI Deployments

The economics of TPU deployment become compelling at enterprise scale. While the upfront costs might seem substantial, the total cost of ownership tells a different story. TPUs consume significantly less energy per operation compared to GPU clusters performing similar tasks, translating to lower electricity bills and reduced cooling requirements.

Training large language models or computer vision systems on TPUs can cost 60-80% less than equivalent GPU setups when you factor in both hardware and operational expenses. The speed improvements mean faster time-to-market for AI products, which often outweighs the initial hardware investment.

Cloud-based TPU access through Google Cloud Platform makes this technology accessible without massive capital expenditure. Companies can rent TPU time for specific projects, paying only for what they use rather than maintaining expensive hardware that might sit idle between training runs.

For organizations running continuous AI workloads, dedicated TPU infrastructure often pays for itself within 6-12 months through reduced training times and lower operational costs compared to traditional CPU GPU TPU differences in deployment scenarios.

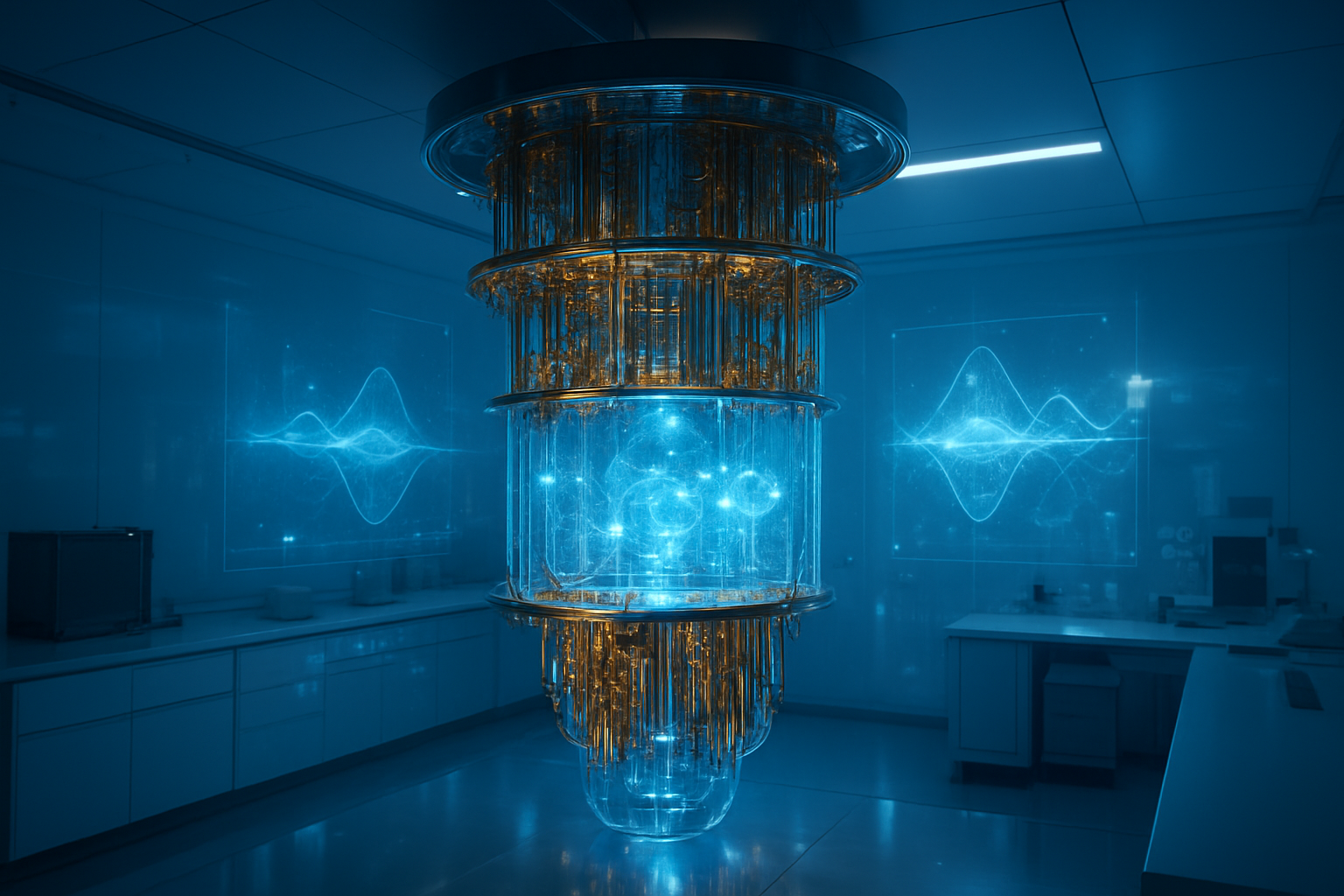

Quantum Computing Principles and Revolutionary Capabilities

Quantum Bits and Superposition for Exponential Processing

Quantum computing works completely differently from classical computers. Instead of traditional bits that exist as either 0 or 1, quantum computers use quantum bits (qubits) that can exist in multiple states simultaneously through superposition. Think of it like a coin spinning in the air – while spinning, it’s neither heads nor tails but both at the same time.

This superposition allows quantum computers to process information exponentially faster than traditional processors. Where a classical computer with 8 bits can represent only one of 256 possible states at any given moment, a quantum computer with 8 qubits can represent all 256 states simultaneously. This exponential scaling means that adding just one more qubit doubles the computational power.

The real magic happens when quantum algorithms leverage this parallel processing capability. Shor’s algorithm for factoring large numbers and Grover’s algorithm for searching unsorted databases demonstrate how quantum vs classical computing can solve certain problems exponentially faster than any CPU, GPU, or TPU.

Quantum Entanglement for Complex Problem Solving

Quantum entanglement creates mysterious connections between qubits that Einstein famously called “spooky action at a distance.” When qubits become entangled, measuring one instantly affects the other, regardless of physical distance. This phenomenon enables quantum computers to solve complex optimization problems that would take classical computers thousands of years.

Entanglement allows quantum processors to explore multiple solution paths simultaneously across interconnected problem spaces. For instance, in drug discovery, quantum computers can model molecular interactions by entangling qubits representing different atoms, enabling them to simulate complex chemical reactions that overwhelm traditional processors.

This capability makes quantum computing particularly powerful for:

- Cryptography and security applications

- Financial portfolio optimization

- Supply chain logistics

- Machine learning pattern recognition

- Climate modeling simulations

Current Limitations and Experimental Stage Challenges

Quantum computing remains largely experimental despite its revolutionary potential. Current quantum processors operate under extremely controlled conditions, requiring temperatures near absolute zero (-273°C) to maintain quantum states. These machines are massive, room-sized installations that cost millions of dollars and require specialized facilities.

The technology faces several practical barriers:

- Limited qubit stability: Quantum states are incredibly fragile and collapse easily

- High error rates: Current quantum processors have error rates thousands of times higher than classical computers

- Restricted problem domains: Only specific types of problems benefit from quantum acceleration

- Programming complexity: Quantum algorithms require completely different approaches than classical programming

Most quantum computers today can only sustain quantum states for microseconds before decoherence occurs. IBM’s quantum processors typically operate with 50-100 qubits, while Google’s Sycamore achieved quantum supremacy with 53 qubits, but practical applications still require thousands or millions of stable qubits.

Error Correction and Decoherence Management

Decoherence represents quantum computing’s biggest technical challenge. Environmental interference from electromagnetic radiation, vibrations, or temperature fluctuations causes qubits to lose their quantum properties and behave like classical bits. This happens incredibly quickly – often within microseconds.

Quantum error correction addresses this problem by using multiple physical qubits to represent one logical qubit. Current approaches require 1,000 to 10,000 physical qubits to create one error-corrected logical qubit. This massive overhead means today’s quantum computers spend most of their computational resources fighting errors rather than solving problems.

Leading quantum computing companies employ different error correction strategies:

| Company | Error Correction Approach | Physical Qubits per Logical Qubit |

|---|---|---|

| IBM | Surface code topology | ~1,000 |

| Surface code with optimized connectivity | ~1,000 | |

| IonQ | Trapped ion natural error correction | ~100 |

| Microsoft | Topological qubits (theoretical) | ~10 |

Advanced error correction techniques include real-time quantum error correction, where classical computers continuously monitor and correct quantum states without destroying them. Companies like Microsoft are developing topological qubits that are inherently more stable, potentially reducing error correction overhead significantly.

The race to achieve “fault-tolerant” quantum computing – where error correction works faster than errors accumulate – represents the next major milestone in quantum technology development.

Performance Comparison Across Different Computing Scenarios

Gaming and Graphics Workloads Performance Metrics

GPUs absolutely dominate the gaming landscape, delivering frame rates that CPUs simply can’t match. Modern graphics cards like the RTX 4090 push out 4K gaming at 120+ FPS, while even high-end CPUs struggle to maintain 60 FPS at 1080p without GPU acceleration. The parallel processing architecture of GPUs excels at rendering thousands of pixels simultaneously, making them perfect for real-time graphics.

CPU vs GPU performance in gaming scenarios:

| Workload Type | CPU Performance | GPU Performance | Winner |

|---|---|---|---|

| 3D Rendering | 15-30 FPS | 60-240 FPS | GPU |

| Ray Tracing | 5-10 FPS | 30-120 FPS | GPU |

| Physics Simulation | Moderate | Excellent | GPU |

| Game Logic | Excellent | Poor | CPU |

CPUs handle game logic, AI behavior, and system management while GPUs crunch through massive pixel calculations. This division of labor creates the sweet spot for gaming performance. TPUs and quantum computers don’t play in this space at all – they’re built for completely different purposes.

Scientific Computing and Simulation Requirements

Scientific workloads tell a different story. Complex simulations like weather modeling or molecular dynamics need different strengths from each processor type. CPUs excel at sequential calculations where each step depends on the previous result. Climate models running on supercomputers often use thousands of CPU cores working together.

GPUs shine in scientific computing when problems can be broken into parallel chunks. Computational fluid dynamics, protein folding simulations, and astronomical data processing see massive speedups on GPU clusters. NVIDIA’s Tesla and AMD’s Instinct series regularly deliver 10-100x performance improvements over CPU-only solutions.

Performance comparison for scientific applications:

- Finite Element Analysis: GPUs provide 20-50x speedup

- Monte Carlo Simulations: GPUs deliver 15-30x faster results

- Molecular Dynamics: GPU acceleration shows 25-100x improvement

- Quantum Mechanics Calculations: CPUs often preferred for precision

Quantum computers represent the future of certain scientific problems. They can solve specific computational challenges – like factoring large numbers or simulating quantum systems – exponentially faster than classical computers. However, current quantum systems remain experimental and limited to very specific use cases.

Artificial Intelligence Training and Inference Speed

AI workloads create the clearest distinction between processor types. TPUs were built specifically for machine learning, optimizing every circuit for tensor operations. Google’s TPU v4 can deliver 275 teraflops of bfloat16 performance, crushing traditional processors in AI training scenarios.

AI performance breakdown:

| Task | CPU | GPU | TPU | Best Choice |

|---|---|---|---|---|

| Training Large Models | Slow | Fast | Fastest | TPU/GPU |

| Real-time Inference | Good | Better | Best | TPU |

| Model Development | Excellent | Good | Limited | CPU/GPU |

| Edge AI | Good | Power-hungry | Efficient | TPU |

GPUs remain the workhorse for most AI development. CUDA ecosystem maturity and broad framework support make them accessible for researchers and companies. Training a GPT-style model on CPUs would take months instead of days on GPU clusters.

TPUs excel at production AI inference where speed and efficiency matter most. Google’s search algorithms, recommendation systems, and language models run primarily on TPU infrastructure. The specialized matrix multiplication units in TPUs process neural network operations 15-30x faster than comparable GPUs.

Quantum computers might revolutionize certain AI algorithms in the future. Quantum machine learning algorithms could potentially solve optimization problems that classical computers find intractable, but practical applications remain years away.

Database Operations and Web Server Performance

Traditional database operations favor CPUs due to their sequential processing strengths and complex instruction handling. Most database queries involve intricate logic, conditional statements, and memory management that CPUs handle efficiently. High-frequency trading systems and OLTP databases rely on CPU single-thread performance and large cache memories.

Database performance characteristics:

- OLTP Systems: CPUs with high clock speeds and large caches perform best

- Data Warehousing: GPU acceleration can speed up analytical queries 10-50x

- In-memory Databases: CPU memory bandwidth becomes the limiting factor

- Graph Databases: GPUs excel at traversing large connected datasets

Web servers processing thousands of simultaneous connections benefit from CPU multi-threading capabilities. Modern web applications serving dynamic content need the flexibility that CPU architectures provide. However, specific workloads like image processing, video transcoding, or machine learning inference within web applications can leverage GPU acceleration.

GPUs increasingly play a role in big data analytics and business intelligence. Apache Spark with GPU acceleration, RAPIDS framework, and GPU-native databases like BlazingSQL deliver dramatic performance improvements for analytical workloads. Complex aggregations across billions of records that take hours on CPU clusters complete in minutes on GPU systems.

TPUs don’t typically serve database workloads directly, but they power the AI features embedded in modern applications – recommendation engines, search relevance, and predictive analytics that enhance user experiences.

Optimal Use Cases for Each Processing Technology

When CPU Single-Threaded Performance Matters Most

CPUs excel when tasks require complex decision-making, sequential processing, and high single-core performance. Software that can’t be broken down into parallel chunks benefits most from CPU architecture’s sophisticated branch prediction and out-of-order execution capabilities.

Gaming represents a prime example where CPU vs GPU considerations favor the processor. While graphics cards handle rendering, CPUs manage game logic, physics calculations, and AI behaviors that must happen sequentially. Modern games with complex AI systems, real-time strategy elements, or detailed physics simulations depend heavily on strong single-threaded CPU performance.

Database operations and web servers also leverage CPU strengths. These applications handle numerous individual requests that can’t be easily parallelized. Each query requires complex logical operations, memory access patterns, and decision trees that CPUs handle efficiently through their large cache hierarchies and advanced instruction sets.

Legacy software applications built before parallel processing became mainstream run exclusively on CPU cores. Many business-critical applications, financial modeling software, and scientific simulations still rely on single-threaded execution paths where CPU performance directly impacts productivity.

Programming languages like Python, JavaScript, and many interpreted languages show significant performance gains on faster CPU cores. The Global Interpreter Lock in Python, for instance, prevents true parallelization, making single-threaded CPU speed the primary performance bottleneck.

GPU Applications for Cryptocurrency Mining and Video Editing

Graphics processing units shine in scenarios requiring massive parallel processing power. Their architecture, featuring thousands of smaller cores, makes them ideal for tasks that can be broken into many simultaneous operations.

Cryptocurrency mining perfectly demonstrates GPU parallel computing advantages. Mining algorithms like Ethereum’s hash functions can run thousands of calculations simultaneously. Each GPU core processes different hash attempts independently, dramatically outperforming CPUs in mining efficiency. Modern mining rigs often combine multiple high-end graphics cards to maximize hash rates and profitability.

Video editing and rendering showcase another GPU strength area. When applying effects, color correction, or encoding video files, graphics cards process multiple pixels simultaneously. Adobe Premiere Pro, DaVinci Resolve, and other professional editing software leverage CUDA and OpenCL frameworks to accelerate rendering times from hours to minutes.

3D modeling and animation benefit enormously from GPU acceleration. Blender, Maya, and Cinema 4D use graphics cards for viewport rendering, simulation calculations, and final frame output. Complex particle systems, fluid dynamics, and lighting calculations that would take days on CPUs complete in hours with proper GPU utilization.

Machine learning training represents a growing GPU application area. Neural networks require millions of mathematical operations that can run in parallel. Training deep learning models on image recognition, natural language processing, or computer vision tasks shows 10-100x speedups compared to CPU-only approaches.

TPU Advantages in Machine Learning Production Environments

Tensor Processing Units deliver specialized advantages for AI workloads, particularly in production environments where inference speed and energy efficiency matter most. Google designed these chips specifically for TensorFlow operations, creating optimized pathways for machine learning computations.

AI processor comparison shows TPUs excel at matrix multiplication operations fundamental to neural networks. Their systolic array architecture processes data in waves, moving information through the chip in synchronized patterns that maximize throughput for tensor operations. This design philosophy differs significantly from both CPU and GPU approaches.

Production machine learning deployments benefit from TPU advantages in several ways. Inference latency drops significantly compared to GPU solutions, enabling real-time applications like voice recognition, image classification, and recommendation systems. Major cloud services use TPUs to power search results, translation services, and content recommendations at massive scale.

Energy efficiency becomes crucial when running AI models continuously. TPUs consume less power per inference operation compared to GPUs, reducing operational costs in data centers. This efficiency advantage compounds when serving millions of requests daily across distributed systems.

TPU machine learning optimization extends to specific model types. Transformer architectures used in language models, convolutional neural networks for image processing, and recurrent networks for sequence data all show substantial performance improvements on TPU hardware compared to traditional processors.

Edge deployment scenarios also favor TPUs for certain applications. Edge TPUs bring AI processing closer to data sources, reducing latency and bandwidth requirements for applications like autonomous vehicles, smart cameras, and IoT devices requiring real-time decision-making capabilities.

Quantum Computing Potential for Cryptography and Optimization

Quantum vs classical computing represents a fundamental shift in computational approaches. Quantum computers use quantum mechanical phenomena like superposition and entanglement to process information in ways impossible for traditional processors.

Cryptography applications show the most immediate quantum computing potential. Shor’s algorithm could break current RSA encryption methods exponentially faster than classical computers. A sufficiently powerful quantum computer could factor large numbers in polynomial time, threatening current internet security protocols and requiring new quantum-resistant encryption standards.

Optimization problems represent another promising quantum computing application area. Traveling salesman problems, portfolio optimization, and supply chain logistics involve searching through massive solution spaces. Quantum algorithms like QAOA (Quantum Approximate Optimization Algorithm) show potential for finding better solutions faster than classical optimization methods.

Drug discovery and molecular simulation could benefit from quantum computing’s ability to model quantum mechanical systems naturally. Protein folding, chemical reaction pathways, and material science applications involve quantum effects that classical computers can only approximate. Quantum processors might simulate these systems directly, accelerating pharmaceutical research and materials development.

Financial modeling and risk analysis present additional quantum opportunities. Monte Carlo simulations, derivative pricing, and portfolio optimization require extensive computational resources. Quantum amplitude estimation could provide quadratic speedups for these financial calculations, enabling more sophisticated risk models and trading strategies.

Machine learning intersects with quantum computing through quantum machine learning algorithms. Quantum neural networks, quantum support vector machines, and quantum clustering algorithms might process certain data patterns more efficiently than classical AI approaches, though this field remains largely experimental with limited practical applications currently available.

Cost Analysis and Investment Considerations

Initial Hardware Investment and Setup Costs

The upfront costs for different processing technologies vary dramatically, making budget planning crucial for any organization. CPUs typically offer the most accessible entry point, with consumer-grade processors starting around $100-$300 and enterprise solutions ranging from $1,000-$10,000. A standard workstation equipped with high-end CPUs can cost between $2,000-$15,000 depending on specifications.

GPUs present a wider investment spectrum. Consumer graphics cards suitable for AI workloads start at $500-$1,500, while professional-grade GPUs like NVIDIA’s A100 or H100 can cost $10,000-$40,000 each. Building a comprehensive GPU cluster for machine learning requires substantial capital, often reaching $100,000-$500,000 for enterprise-level implementations.

TPU access follows Google’s cloud-based pricing model, eliminating massive upfront hardware costs. However, organizations requiring dedicated TPU pods face investments exceeding $1 million. The TPU v4 pods can cost several million dollars, making them viable only for large-scale AI operations.

Quantum computing represents the highest investment tier. IBM’s quantum systems start at several million dollars, while access through cloud services begins at $1.60 per second of quantum processor time. The supporting infrastructure, including specialized cooling systems and isolation chambers, adds significant overhead costs.

| Technology | Entry Level Cost | Enterprise Level Cost |

|---|---|---|

| CPU | $100-$300 | $2,000-$15,000 |

| GPU | $500-$1,500 | $100,000-$500,000 |

| TPU | Cloud-based | $1M+ for pods |

| Quantum | Cloud access | $5M+ for systems |

Ongoing Operational Expenses and Maintenance

Power consumption creates the most significant ongoing expense across all computing technologies. CPUs generally consume 15-250 watts, translating to modest electricity bills for most applications. Modern CPUs also benefit from mature power management features that reduce consumption during idle periods.

GPUs demand substantially more power, with high-end models consuming 300-700 watts each. Large GPU farms can require megawatts of power, creating monthly electricity bills reaching tens of thousands of dollars. Cooling costs add another 30-50% to power expenses, as GPUs generate significant heat requiring robust ventilation systems.

TPU operational costs depend entirely on cloud usage patterns. Google charges approximately $1.35-$8.00 per TPU hour, making costs predictable but potentially expensive for continuous workloads. Organizations running 24/7 AI applications might face monthly bills exceeding $50,000 for substantial TPU usage.

Quantum computing maintenance involves specialized expertise and materials. Dilution refrigerators required for quantum processors cost $200,000-$500,000 and consume substantial power continuously. Helium costs alone can reach $10,000-$30,000 annually per system.

Maintenance complexity varies significantly. CPUs require minimal specialized maintenance beyond standard IT support. GPUs need regular cleaning and thermal management but remain manageable with existing IT infrastructure. Quantum systems demand PhD-level expertise and specialized service contracts, often costing $100,000+ annually.

Scalability Options for Growing Computing Needs

CPU scaling follows traditional server expansion models. Organizations can add processors, increase core counts, or deploy additional servers relatively easily. Cloud providers offer flexible CPU scaling with pay-as-you-grow pricing models, making expansion straightforward for most applications.

GPU scalability presents unique challenges and opportunities. Adding GPUs to existing systems requires adequate power supplies, cooling capacity, and PCIe slots. Cloud GPU services provide more flexible scaling options, though costs escalate quickly. Multi-GPU configurations require careful software optimization to achieve linear performance scaling.

TPU scaling happens exclusively through Google Cloud Platform, offering seamless horizontal scaling. TPU pods provide massive parallel processing capabilities, but organizations become locked into Google’s ecosystem. Scaling TPU usage can happen instantly, though budget implications require careful monitoring.

Quantum computing scaling remains limited by current technology constraints. Most quantum systems offer fixed qubit counts, with scaling achieved through hybrid classical-quantum algorithms rather than adding more quantum hardware. Future quantum networking may enable distributed quantum computing, but current scalability options remain restricted.

The processor selection guide depends heavily on projected growth patterns. Organizations expecting gradual expansion might start with CPU-based solutions and migrate selectively. AI-focused companies often benefit from immediate GPU investment with cloud-based scaling options. TPU adoption makes sense for Google Cloud-committed organizations with substantial machine learning workloads.

Cost-effective scaling strategies often combine multiple technologies. Many organizations use CPUs for general computing, GPUs for training AI models, and TPUs for inference workloads, optimizing costs across different use cases while maintaining flexibility for future growth.

Understanding how different processors work isn’t just tech trivia—it’s about picking the right tool for the right job. CPUs excel at general tasks and complex decision-making, while GPUs shine when you need to crunch massive amounts of data simultaneously. TPUs take AI and machine learning workloads to the next level with their specialized design, and quantum computers promise to solve problems that would take traditional computers centuries.

Your choice really comes down to what you’re trying to accomplish and how much you’re willing to spend. For most everyday business needs, a solid CPU will do the trick. If you’re diving into graphics, gaming, or data science, GPUs are your best bet. Companies serious about AI should consider TPUs for their machine learning projects. Quantum computing is still experimental for most applications, but keeping an eye on developments could give you a competitive edge down the road. The key is matching your specific needs with the processor that handles those tasks most efficiently—and fits your budget.