Ever watched your ETL pipeline break at 2 AM, sending cascading failures through your data ecosystem while you frantically debug in your pajamas? You’re not alone.

Data engineers everywhere are discovering that traditional ETL approaches simply can’t handle today’s complex data demands. Building robust ETL pipelines with Snowflake has become essential for organizations drowning in data but starving for insights.

In this guide, we’ll walk through exactly how modern teams are constructing resilient pipelines that don’t collapse when your data volume spikes or your source systems hiccup. You’ll learn practical approaches that work in real environments—not just theoretical best practices.

But first, let’s address the elephant in the room: why do so many carefully designed ETL processes still fail spectacularly in production?

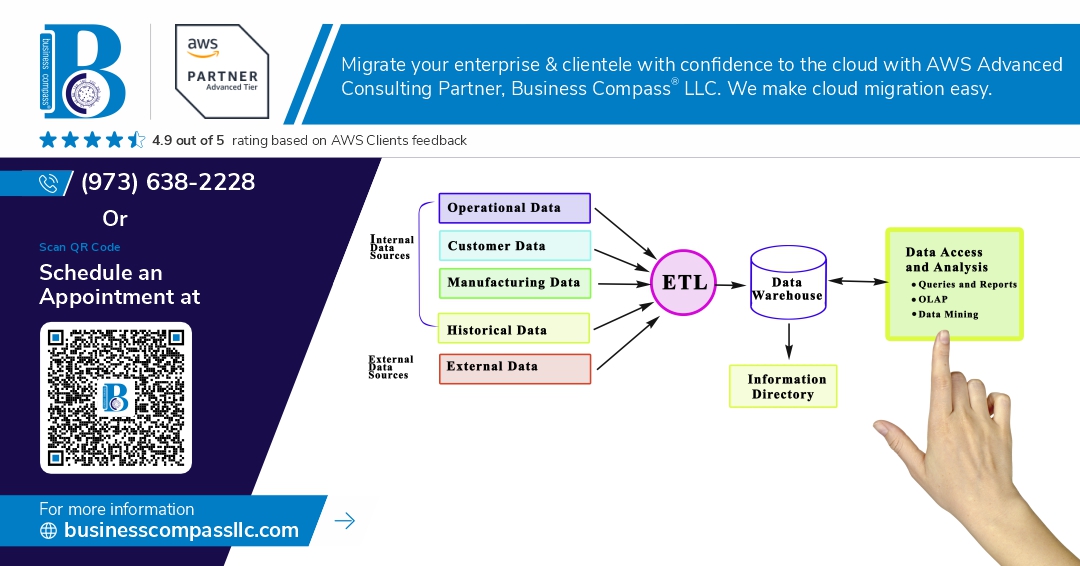

Understanding ETL Fundamentals in Modern Data Environments

A. Key components of effective ETL processes

Ever tried building a house without a foundation? That’s ETL without solid components. Effective ETL processes require robust data extraction methods, transformation logic that handles exceptions gracefully, and loading procedures that maintain data integrity. The real magic happens when these components work together seamlessly.

Snowflake’s Core Capabilities for ETL Processing

A. Leveraging Snowflake’s separation of storage and compute

Snowflake’s architecture is a game-changer for ETL workflows. By completely separating storage from compute resources, you can scale up processing power during heavy transformation jobs, then scale down when idle. This means you’re only paying for what you use, not maintaining expensive always-on infrastructure that sits unused most nights and weekends.

Designing Robust Data Extraction Strategies

A. Connecting to diverse data sources

Ever tried juggling data from ten different places at once? Modern ETL pipelines need to seamlessly connect with everything from legacy databases to cloud APIs. Snowflake shines here with pre-built connectors for SQL databases, SaaS platforms, and cloud storage services, eliminating the integration headaches that used to take weeks.

Building Efficient Transformation Pipelines

SQL-based transformations in Snowflake

Snowflake’s SQL capabilities make transformations a breeze compared to traditional tools. You can process millions of rows in seconds using simple SQL commands right where your data lives. No more shuffling data between systems just to transform it. That’s the beauty of modern ELT.

Modern Data Loading Best Practices

Optimizing bulk data loading operations

Ever tried loading millions of rows into Snowflake only to watch the clock tick away? Been there. The secret sauce is partitioning your files by date or region, compressing them properly, and using COPY commands with the right batch sizes. Parallelism is your friend here – leverage it.

Integrating with Modern Data Tools

Integrating with Modern Data Tools

A. Orchestration with Airflow, Dagster, or Prefect

Building ETL pipelines isn’t just about Snowflake. You need tools that schedule and monitor workflows too. Airflow gives you code-based DAGs. Dagster brings data-awareness to your pipelines. Prefect offers dynamic workflows with less boilerplate. Pick one that matches your team’s skills and pipeline complexity.

B. Enhancing ETL with dbt for transformations

dbt transforms your Snowflake data without moving it. Write SQL models, test them, and document everything in one place. The real magic? It handles dependencies automatically. Your analysts can finally contribute to transformations without being SQL wizards. Plus, those lovely lineage graphs make troubleshooting way easier.

C. Monitoring pipelines with observability tools

Your pipeline’s running great today, but what about tomorrow? Tools like Monte Carlo, Datadog, and Bigeye spot problems before your stakeholders do. They track freshness, volume anomalies, and schema changes. Set alerts for critical metrics and sleep better knowing you’ll catch issues before that 6 AM “data’s wrong” email hits your inbox.

D. Version control and CI/CD for ETL code

Gone are the days of “it works on my machine.” Git-based workflows mean changes are tracked, tested, and reproducible. Set up CI/CD pipelines to validate transforms before they hit production. Automated testing catches issues early, while infrastructure-as-code ensures your Snowflake resources stay consistent. No more manual deployments at midnight.

Performance Optimization Techniques

A. Warehouse sizing and auto-scaling strategies

Nailing your warehouse setup is crucial for ETL performance. Snowflake’s auto-scaling lets you ramp up compute during peak loads without manual intervention. Start small, monitor actual usage patterns, and adjust. Most ETL workloads benefit from larger warehouses rather than more concurrent small ones.

Real-world ETL Pipeline Architectures

A. Batch processing implementation patterns

Ever tried building a batch ETL pipeline in Snowflake? Here’s what works: time-based scheduling with Snowflake tasks, metadata-driven frameworks that adapt to schema changes, and incremental loading patterns that only process new data. Most enterprises combine these approaches based on data volume and freshness requirements.

The journey to building robust ETL pipelines requires mastering both fundamental concepts and advanced techniques. Snowflake’s powerful capabilities combined with modern data tools offer unprecedented flexibility for data extraction, transformation, and loading processes. By implementing strategic extraction methods, efficient transformation pipelines, and best practices for data loading, organizations can create scalable data infrastructure that meets today’s demanding business requirements.

As data volumes continue to grow exponentially, the optimization techniques and architectural patterns discussed provide a foundation for building reliable ETL solutions. Whether you’re enhancing existing pipelines or designing new ones, focus on creating flexible, maintainable systems that can adapt to changing business needs. Start applying these principles today to transform your data operations and unlock the full potential of your organization’s data assets.