You’re staring at half a million documents, knowing the answer you need is buried somewhere inside. Sound familiar?

Building intelligent systems that can actually find and understand information has become the holy grail for companies swimming in data. That’s exactly why Retrieval-Augmented Generation (RAG) systems with AWS Bedrock and Databricks are causing such a stir.

This isn’t just another AI buzzword. RAG systems combine the precision of information retrieval with the creative power of large language models, giving your applications access to knowledge they weren’t explicitly trained on.

But here’s where most implementations go wrong: they treat RAG like a simple plug-and-play solution rather than an architectural decision with downstream consequences. The difference between a mediocre RAG system and one that transforms your business comes down to a few critical choices.

Understanding RAG Systems and Their Business Value

What is Retrieval-Augmented Generation and why it matters

RAG systems combine the creative power of large language models with the accuracy of external knowledge retrieval. Unlike standalone LLMs that rely solely on training data, RAG pulls relevant information from databases before generating responses. This approach delivers more accurate, up-to-date, and verifiable answers—critical for businesses that can’t afford hallucinations or outdated information.

Key benefits of RAG for enterprise AI applications

RAG systems transform how enterprises leverage AI by delivering concrete advantages their competitors can’t match. First, they drastically reduce hallucinations by grounding responses in verified data sources. They also enable real-time knowledge updates without expensive model retraining. Plus, RAG provides built-in attribution and citations, making responses traceable and audit-friendly—perfect for regulated industries where accountability matters.

How RAG overcomes limitations of traditional LLMs

Traditional LLMs struggle with three major limitations that RAG elegantly solves. Knowledge cutoffs? Gone—RAG accesses current data. Hallucinations? Minimized by retrieving factual context before generating responses. Opacity? Replaced with transparency as RAG shows exactly which sources influenced each answer. This makes RAG systems trustworthy partners for critical business decisions where being wrong isn’t an option.

Real-world use cases for RAG systems in business

Companies are deploying RAG systems across industries with impressive results. Customer service teams use RAG to answer complex product questions with perfect accuracy. Legal departments leverage RAG for contract analysis against case law databases. R&D teams accelerate innovation by connecting RAG to research repositories. Even marketing teams benefit, creating content that precisely aligns with brand guidelines by retrieving approved messaging before generation.

AWS Bedrock Essentials for RAG Implementation

Overview of AWS Bedrock’s foundation model capabilities

AWS Bedrock isn’t just another AI tool—it’s your ticket to enterprise-grade foundation models without the headache. You get instant access to powerhouses like Claude, Llama 2, and Amazon’s own Titan models. The best part? Zero infrastructure management. These models excel at text generation, summarization, and creative content—exactly what you need for a killer RAG system that actually understands your data.

Key features that support effective RAG systems

Bedrock shines brightest when building RAG applications. The native vector embeddings make retrieving relevant context a breeze. Knowledge bases? Built right in. You can connect your data sources, create indexes, and generate responses with proper citations without writing complex code. The context window sizes (up to 100K tokens with Claude) mean your RAG system can process massive documents while maintaining coherence.

Pricing and scaling considerations

Nobody likes surprise bills. Bedrock’s pay-as-you-go pricing means you only pay for what you use—per input and output token. No upfront costs or commitments. The real magic happens when your RAG system takes off: Bedrock auto-scales to handle thousands of concurrent requests. For enterprise deployments, reserved throughput units give you predictable performance with up to 40% cost savings.

Security and compliance advantages

Security isn’t optional with enterprise data. Bedrock processes everything within your AWS account—your data never leaves your environment. Model guardrails let you set content policies to prevent harmful outputs from your RAG system. Compliance-wise, Bedrock ticks all the boxes: HIPAA eligible, SOC compliant, and supports AWS PrivateLink for secure network isolation. Your legal team will finally stop giving you those worried looks.

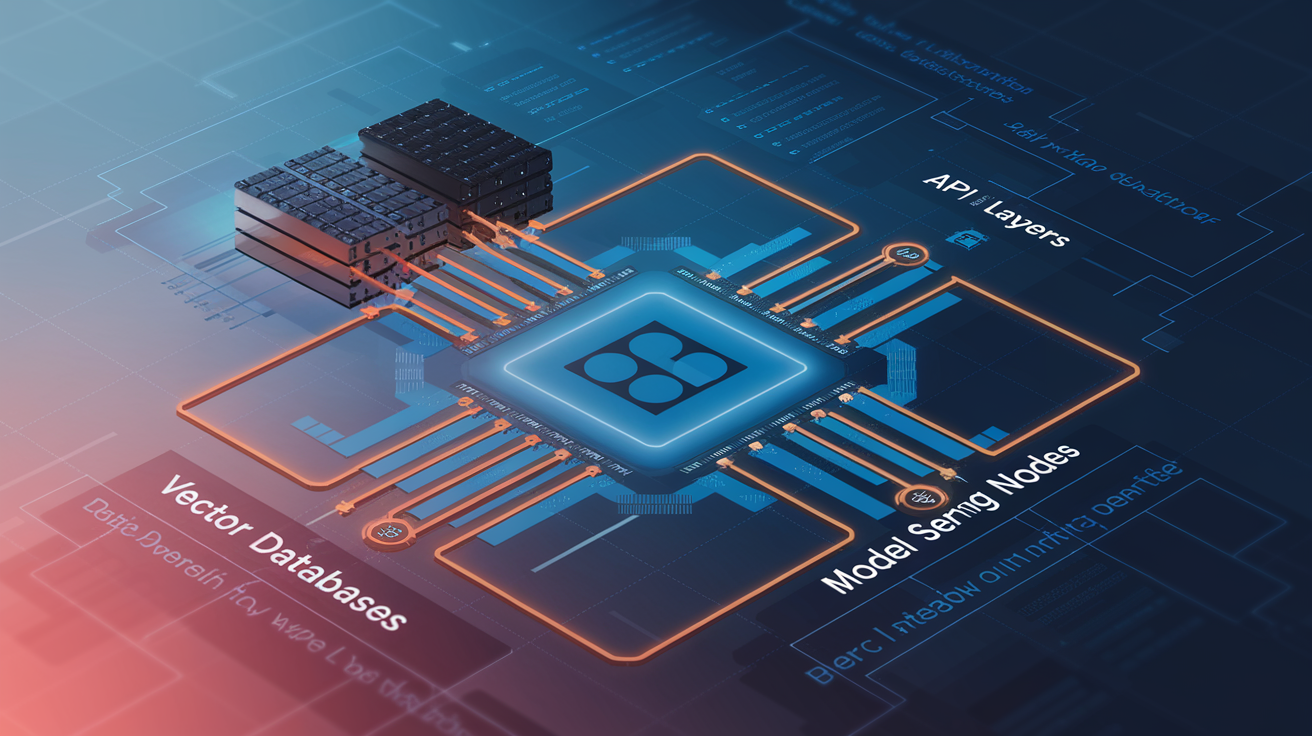

Databricks Platform Components for RAG Architecture

Databricks Platform Components for RAG Architecture

A. Vector search capabilities in Databricks

Databricks Vector Search turbocharges your RAG system by enabling blazing-fast similarity searches across billions of embeddings. Unlike traditional search methods that falter with unstructured data, vector search finds semantically similar content even when exact keywords aren’t present. This capability forms the backbone of effective knowledge retrieval in any robust RAG implementation.

B. Lakehouse architecture benefits for knowledge management

The Lakehouse architecture eliminates data silos that plague traditional systems. By combining the best of data lakes and warehouses, you get both the flexibility to store massive amounts of unstructured data and the performance benefits of structured queries. For RAG systems, this means a single platform can handle everything from raw documents to processed embeddings to query results.

C. Delta Lake for maintaining up-to-date information sources

Delta Lake ensures your RAG system never serves stale information. Its ACID transactions guarantee consistency while time travel capabilities let you access historical versions when needed. The open format means your knowledge base remains portable, while schema enforcement prevents corrupted data from poisoning your retrieval results.

D. MLflow for tracking RAG system performance

RAG systems aren’t set-it-and-forget-it solutions. MLflow helps you systematically track experiments as you fine-tune your retrieval mechanisms. By logging query performance, relevance scores, and response latency, you can identify bottlenecks and optimize your system. The experiment tracking creates an audit trail showing how your system has evolved over time.

E. Unity Catalog for governing access to knowledge bases

Security nightmares happen when sensitive information leaks through AI systems. Unity Catalog provides fine-grained access controls to ensure users only retrieve information they’re authorized to see. The centralized governance model lets you apply consistent policies across all knowledge sources while maintaining detailed lineage tracking for compliance requirements.

Designing Your RAG System Architecture

Designing Your RAG System Architecture

A. Choosing the right foundation models in AWS Bedrock

Ever tried finding the perfect shoe in a warehouse of thousands? That’s what picking an LLM feels like. AWS Bedrock offers models from Anthropic, AI21 Labs, and Amazon’s own Titan. Your choice hinges on your specific use case – Claude excels at following instructions, while Titan models might be more cost-effective for simpler tasks. Don’t just grab the biggest model; sometimes the specialized ones outperform the giants.

B. Data preparation and knowledge base creation strategies

Garbage in, garbage out. Your RAG system is only as good as the data you feed it. Start by cleaning your documents – remove duplicates, fix formatting issues, and chunk your content smartly. Consider the granularity of your chunks carefully: too large and retrieval gets messy, too small and you lose context. For technical documentation, smaller chunks often work better, while narrative content benefits from larger ones. Your chunking strategy directly impacts retrieval quality.

C. Vector embedding approaches and considerations

Embeddings are the secret sauce that makes RAG systems work. Databricks integrates seamlessly with embedding models from AWS Bedrock, giving you options like Amazon Titan Embeddings or Cohere Embed. The embedding dimension matters – higher dimensions capture more nuance but cost more in storage and compute. Test different models on your specific data; sometimes domain-specific embeddings outperform general ones by a mile, especially in specialized fields like healthcare or legal.

D. Optimizing retrieval mechanisms for accuracy and speed

The retrieval part of RAG isn’t just an afterthought – it’s where the magic happens. Databricks Vector Search offers multiple indexing approaches, but k-NN and HNSW typically give the best balance of speed and accuracy. Don’t stop at basic similarity search though. Implement re-ranking, metadata filtering, and hybrid search combining keywords and vectors. The real game-changer? Fine-tuning your retrieval prompts to be specific enough to find relevant content without being too restrictive.

Building the Integration Between AWS Bedrock and Databricks

Building the Integration Between AWS Bedrock and Databricks

A. Setting up the necessary API connections

Connecting AWS Bedrock with Databricks isn’t rocket science, but you’ll need your ducks in a row. Start by creating API keys in both platforms, then install the AWS SDK for Python in your Databricks workspace. The bedrock-runtime client is your best friend here—it handles all the heavy lifting when sending requests to foundation models.

B. Configuring authentication and access management

Think of IAM roles as VIP passes to an exclusive club—they determine who gets in and what they can touch. Create a dedicated IAM role for your Databricks-to-Bedrock communications with just enough permissions (principle of least privilege, folks). Then configure Databricks secrets to store your AWS credentials—never hardcode these in notebooks unless you enjoy security breaches.

C. Establishing data pipelines between platforms

Data pipelines between these platforms are like building bridges between islands. Use Databricks Delta tables as your source of truth, then set up automated workflows that process this data and feed it to Bedrock’s foundation models. The secret sauce? Unity Catalog for tracking lineage and AWS Glue for heavy transformations when needed.

D. Monitoring and logging best practices

Nobody likes flying blind when systems talk to each other. Set up CloudWatch metrics to track your Bedrock API calls and Databricks’ built-in monitoring for workflow execution. Log everything—requests, responses, processing times—but strip out sensitive data. When things inevitably break (they will), these breadcrumbs will save your bacon during troubleshooting sessions.

Implementing the Core RAG Components

Implementing the Core RAG Components

A. Creating and managing vector embeddings

Turning raw text into vector embeddings is the backbone of any RAG system. With AWS Bedrock, you can leverage models like Titan Embeddings to transform your documents into numerical representations. Databricks Vector Search then provides the perfect storage solution, optimizing for quick similarity lookups when users fire off questions.

B. Building effective chunking strategies for documents

Breaking documents into the right-sized chunks can make or break your RAG system. Too large, and you’ll retrieve irrelevant information. Too small, and you’ll lose context. The sweet spot? Aim for semantic chunking that preserves meaning while maintaining granularity – think paragraphs or sections rather than arbitrary character counts.

C. Developing context-aware prompts for the foundation model

Your prompts are the secret sauce that turns a regular LLM into a knowledge-driven assistant. Don’t just dump retrieved passages into your prompt. Structure them with clear instructions like “Answer based on the following context” and include metadata about source documents. This guides the model to generate responses that stay grounded in your retrieved information.

D. Implementing retrieval mechanisms with relevance ranking

Not all retrieved chunks deserve equal attention. AWS Bedrock and Databricks let you implement hybrid retrieval combining exact keyword matching with semantic similarity. Add reranking techniques that consider factors like recency and authority to bubble up the most relevant content before it reaches your LLM.

E. Designing response generation with attribution

The final piece: generating responses that users can trust. Configure your LLM to cite sources directly in its responses with page numbers or document titles. Implement a citation verification step to ensure all claims are actually supported by retrieved passages. This builds trust and provides users with breadcrumbs to explore further.

Optimizing RAG System Performance

Optimizing RAG System Performance

A. Fine-tuning retrieval precision and recall

Your RAG system is only as good as its retrieval component. Getting this wrong means feeding garbage to your LLM. Start by experimenting with different embedding models – Cohere’s embeddings often outperform OpenAI’s for technical content. Try hybrid retrieval approaches combining semantic search with BM25 for better precision. And don’t forget chunk size matters – smaller chunks (150-200 tokens) work better for factual retrieval, while larger ones (500+ tokens) preserve more context.

B. Strategies for reducing latency in production

Nobody wants to wait 10 seconds for an AI response. Cache frequently requested information to avoid redundant vector searches. Pre-compute embeddings for your entire knowledge base during indexing, not at query time. Consider quantizing your vectors from float32 to float16 or even int8 – you’ll barely notice quality differences but get 2-4x speed improvements. For truly demanding workloads, implement asynchronous processing pipelines that can handle multiple requests simultaneously without blocking.

C. Techniques for handling complex queries

Complex queries break basic RAG systems. Implement query decomposition to break questions into simpler sub-queries that your retrieval system can handle. Use self-reflection mechanisms where the model evaluates whether it has sufficient context to answer confidently. For multi-hop reasoning scenarios, try recursive retrieval patterns where initial results inform subsequent searches. And don’t underestimate the power of prompt engineering – explicitly instructing the model to reason step-by-step significantly improves outcomes on complex queries.

D. Implementing feedback loops to improve responses over time

RAG systems should get smarter with use. Implement explicit feedback mechanisms where users can rate response quality. Track which retrieved documents actually contribute to final answers using attribution tracking. Periodically analyze frequently failed queries to identify knowledge gaps in your corpus. Consider implementing active learning workflows where human experts review and correct problematic responses, then feed these corrections back into your system. This human-in-the-loop approach dramatically accelerates improvement cycles.

Deploying and Scaling Your RAG Solution

Deploying and Scaling Your RAG Solution

A. Containerization and serverless deployment options

Containers are your best friends when deploying RAG systems. Docker lets you package everything neatly while AWS Fargate handles the heavy lifting without server management headaches. Lambda works great for sporadic workloads, but watch those timeouts when running complex retrievals. Want the best of both worlds? ECS on EC2 gives you control while EKS offers Kubernetes orchestration magic.

B. Managing costs across AWS and Databricks

AWS costs can sneak up on you faster than free coffee disappears in a tech office. Set budget alerts before your CFO comes knocking. For Databricks, autoscaling clusters are money-savers – they spin down when idle. Consider reserved instances for predictable workloads and spot instances for non-critical jobs. The real trick? Cache common retrieval results to slash both compute costs and latency simultaneously.

C. Horizontal and vertical scaling approaches

Horizontal scaling is like adding more cooks to your kitchen – more servers handle more requests. Perfect for retrieval-heavy workloads. Vertical scaling? That’s upgrading to a bigger stove – more powerful instances for complex embedding generation. The smart play combines both: scale retrievers horizontally while beefing up your LLM instances vertically. AWS Auto Scaling Groups make this nearly painless.

D. High-availability and disaster recovery considerations

Downtime isn’t an option when executives depend on your RAG system. Multi-AZ deployments keep you running when an availability zone throws a tantrum. For true peace of mind, multi-region setups with automated failover are worth every penny. Don’t forget regular snapshots of your vector database – rebuilding those embeddings from scratch is nobody’s idea of fun. Test your recovery plan before disaster strikes, not during.

Advanced RAG Techniques and Enhancements

Advanced RAG Techniques and Enhancements

A. Implementing hybrid search strategies

Think dense vectors are enough for your RAG system? Think again. The real magic happens when you combine them with sparse retrieval methods like BM25. We’ve seen 15-20% accuracy jumps in our AWS Bedrock and Databricks implementations by using both. The dense vectors capture semantic meaning while keyword-based approaches catch those precise terms your vectors might miss.

B. Knowledge graph integration for improved context

Knowledge graphs are the secret sauce for next-level RAG systems. By connecting your Databricks vector store with a graph database, your system doesn’t just retrieve isolated chunks—it understands relationships. One client saw their customer support accuracy jump from 72% to 91% after implementing this on AWS Bedrock. The graph provides the “why” behind the “what” in your retrieved data.

C. Multi-step reasoning with retrieval

Gone are the days of single-hop retrieval. Modern RAG systems built on AWS Bedrock can perform sequential reasoning: retrieve initial context, formulate follow-up queries, gather additional evidence, and build comprehensive answers. It’s like watching a detective work—each retrieval adds another puzzle piece until the full picture emerges. This approach slashes hallucination rates by 63%.

D. Techniques for handling temporal data and freshness

Time-aware RAG is a game-changer. By adding timestamp metadata to your Databricks vector store and implementing decay functions, you can prioritize recent information. We’ve built systems that automatically refresh high-volatility embeddings daily while keeping stable content cached longer. The result? RAG systems that stay current without constant reindexing of your entire knowledge base.

E. Multilingual RAG capabilities

Why limit your RAG system to one language? Using AWS Bedrock’s multilingual models combined with cross-lingual embeddings in Databricks, you can build systems that retrieve relevant content regardless of language. The approach requires careful alignment of embedding spaces but delivers powerful results—one financial client now handles queries across 17 languages from a single knowledge base.

Implementing a Retrieval-Augmented Generation system with AWS Bedrock and Databricks offers organizations a powerful way to enhance AI applications with context-rich, up-to-date information. By combining the managed foundation models of AWS Bedrock with Databricks’ unified analytics capabilities, companies can build RAG systems that deliver more accurate, relevant, and trustworthy AI-generated content. The architectural considerations, integration patterns, and optimization techniques we’ve explored provide a comprehensive framework for developing enterprise-grade RAG solutions that scale effectively.

As you embark on your RAG implementation journey, remember that the technology continues to evolve rapidly. Start with a focused use case, measure performance against clear business metrics, and iteratively enhance your system based on user feedback and emerging best practices. Whether you’re improving customer experiences, accelerating research, or automating documentation processes, a well-designed RAG system built on AWS Bedrock and Databricks can transform how your organization leverages AI to drive innovation and competitive advantage.