Large language models are transforming how we work with information, but building a notebook LLM that truly understands and remembers context requires more than just deploying a pre-trained model. This comprehensive guide is designed for developers, AI engineers, and technical professionals who want to create intelligent notebook systems that can retrieve relevant information, maintain conversation history, and provide contextually aware responses.

We’ll walk through the essential components that make notebook LLMs effective, starting with retrieval augmented generation (RAG implementation) to help your system pull in relevant external knowledge when needed. You’ll also learn practical memory systems design techniques that allow your notebook AI to remember previous interactions and build on past conversations. Finally, we’ll cover context engineering strategies that optimize how your LLM processes and responds to complex queries within notebook environments.

By the end of this tutorial, you’ll have the knowledge to build a robust notebook LLM architecture that combines smart retrieval, persistent memory, and intelligent context handling to create a truly useful AI assistant for research, note-taking, and knowledge management tasks.

Understanding the Foundation of Notebook LLMs

Define notebook LLMs and their unique capabilities

Notebook LLMs represent a breakthrough in AI architecture that combines the interactive nature of computational notebooks with the reasoning power of large language models. Think of them as intelligent coding assistants that can remember what you’ve been working on, understand your project context, and help you build on previous work without starting from scratch each time.

Unlike traditional chatbots that treat each conversation as isolated interactions, notebook LLMs maintain persistent memory across sessions. They track your code execution history, remember variable states, understand your project goals, and can reference previous experiments or analyses you’ve conducted. This creates a collaborative environment where the AI becomes genuinely helpful for complex, multi-step projects.

The magic happens through their ability to blend code execution with natural language understanding. You can ask a notebook LLM to analyze data, generate visualizations, debug code, or explain complex concepts, and it will provide contextually relevant responses based on your current notebook state. This makes them particularly powerful for data science, research, and educational applications where iterative exploration is key.

Explore the differences between static and dynamic language models

Static language models operate like reference books – they contain vast knowledge but can’t update their understanding based on new information during a conversation. Once trained, their knowledge remains frozen in time, limited to their training data cutoff date.

Dynamic language models, which power notebook LLMs, can adapt and evolve during interactions. They maintain working memory that gets updated with each exchange, allowing them to build understanding incrementally. Here’s how they differ:

| Aspect | Static Models | Dynamic Models |

|---|---|---|

| Memory | No persistent memory between sessions | Maintains context across interactions |

| Learning | Fixed knowledge from training | Adapts to user patterns and preferences |

| Context | Limited to current conversation | Accumulates knowledge over time |

| Personalization | Generic responses | Tailored to individual workflows |

Dynamic models can learn your coding style, remember your project structure, and understand your domain-specific terminology. They create a personalized AI companion that grows more helpful as it learns your working patterns.

Identify key components that make notebook LLMs effective

Several core components work together to create effective notebook LLM systems:

Memory Architecture forms the backbone, consisting of short-term memory for immediate context and long-term memory for persistent knowledge. This dual-layer approach ensures the model can handle both quick queries and complex, ongoing projects.

Context Management handles the challenge of maintaining relevant information while discarding outdated details. Smart context engineering ensures the model focuses on what matters most for your current task without getting overwhelmed by irrelevant historical data.

Code Execution Environment provides a sandbox where the LLM can run code, test hypotheses, and see real results. This bridges the gap between theoretical knowledge and practical implementation.

RAG implementation allows the model to pull relevant information from external sources like documentation, previous notebooks, or domain-specific databases. This dramatically expands the model’s knowledge beyond its training data.

State Tracking monitors variables, imports, and execution history to maintain awareness of the current computational environment. This prevents errors and enables more intelligent suggestions.

Examine real-world applications and use cases

Notebook LLMs shine in scenarios requiring iterative problem-solving and continuous learning. Data scientists use them to explore datasets, test hypotheses, and document findings without losing context between analysis sessions. The AI remembers which visualizations worked best and can suggest similar approaches for new datasets.

Educational environments benefit tremendously from notebook LLMs. Students can ask questions about their code, get explanations for complex concepts, and receive personalized feedback based on their learning progress. The system adapts to each student’s pace and understanding level.

Research applications include literature review assistance, experiment planning, and result interpretation. The LLM can maintain awareness of research objectives across multiple sessions, helping researchers stay focused on their goals while exploring various approaches.

Business analysts leverage notebook LLMs for dashboard creation, report generation, and trend analysis. The persistent memory ensures consistency in metrics and methodologies across different reporting periods.

Software development teams use them for code review, documentation generation, and debugging assistance. The model learns team coding standards and can provide consistent feedback aligned with project requirements.

Implementing Retrieval-Augmented Generation (RAG)

Break down RAG architecture and core principles

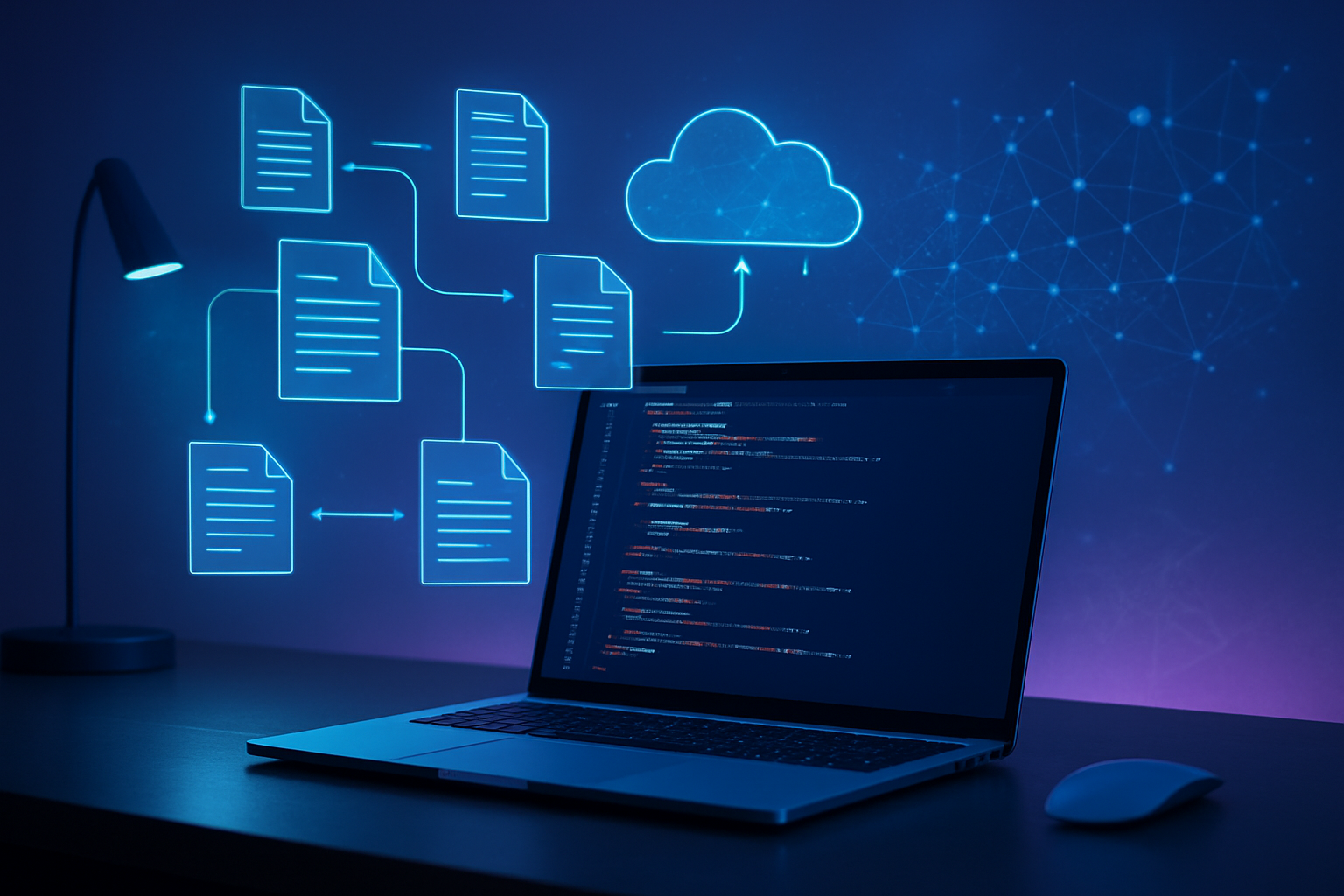

RAG implementation transforms your notebook LLM from a static knowledge system into a dynamic, information-rich assistant. The architecture operates on a simple yet powerful principle: when your model encounters a query, it first searches through external documents to find relevant context before generating a response.

The core RAG pipeline consists of three essential components working in harmony. First, the retriever component searches through your knowledge base using semantic similarity matching. Second, the reader component processes both the retrieved documents and the original query to understand context. Finally, the generator combines this information to produce accurate, contextually-aware responses.

Your RAG system needs two parallel workflows: indexing and querying. During indexing, documents get chunked into manageable pieces, converted to embeddings, and stored in a vector database. The querying workflow takes user input, converts it to embeddings, retrieves similar chunks, and feeds everything to your language model for response generation.

The magic happens in the embedding space where semantic relationships between concepts get captured as mathematical vectors. When someone asks “How do I optimize database performance?”, your system finds relevant chunks about indexing strategies, query optimization, and caching techniques, even if the exact words don’t match.

Build effective document retrieval systems

Document retrieval systems form the backbone of your notebook LLM’s knowledge access. Start by implementing a robust chunking strategy that preserves semantic coherence while maintaining optimal chunk sizes. Text chunks between 200-500 tokens typically work best, though this varies based on your content type and use case.

Your chunking approach should respect document structure. For technical documentation, break at section headers and code blocks. For research papers, chunk by paragraphs while keeping related concepts together. Overlapping chunks by 50-100 tokens helps maintain context continuity across boundaries.

Vector databases like Pinecone, Weaviate, or Chroma serve as your retrieval engine’s foundation. These systems excel at similarity search but require careful configuration:

| Database Type | Best For | Key Features |

|---|---|---|

| Pinecone | Production scale | Managed service, high performance |

| Weaviate | Complex schemas | GraphQL API, built-in ML models |

| Chroma | Local development | Easy setup, Python-native |

Implement hybrid retrieval combining dense and sparse methods. Dense retrieval using embeddings captures semantic similarity, while sparse methods like BM25 excel at exact keyword matching. A weighted combination often outperforms either method alone.

Consider implementing semantic routing to direct queries to specialized knowledge bases. Medical questions go to health documents, while coding queries access technical documentation. This targeted approach reduces noise and improves retrieval precision.

Optimize embedding strategies for better context matching

Embedding optimization directly impacts your notebook LLM’s ability to find relevant context. Choose embedding models that align with your domain and use case. General-purpose models like OpenAI’s text-embedding-ada-002 work well for broad applications, while domain-specific models excel in specialized areas.

Fine-tuning embeddings on your specific dataset dramatically improves retrieval quality. Create training pairs from your documents where semantically related chunks should have similar embeddings. Use techniques like contrastive learning to push relevant documents closer together in embedding space while separating unrelated content.

Implement multi-level embedding strategies for complex documents. Create embeddings for different granularities: document-level for broad topic matching, section-level for moderate specificity, and chunk-level for precise context retrieval. This hierarchical approach enables more nuanced retrieval decisions.

Pre-processing significantly affects embedding quality. Clean your text by removing excessive whitespace, normalizing formatting, and handling special characters consistently. For code documentation, preserve syntax highlighting markers that provide semantic meaning.

Consider embedding enrichment techniques:

- Add metadata like document type, creation date, and author information

- Include surrounding context from parent sections

- Append relevant keywords or tags to chunks

- Use prompt engineering to generate embedding-friendly summaries

Query expansion improves retrieval recall by generating variations of user queries. Use your language model to create synonyms, related concepts, and alternative phrasings before embedding the query. This technique helps catch relevant documents that might use different terminology.

Integrate external knowledge sources seamlessly

Knowledge source integration requires careful orchestration to maintain response quality and system performance. Start by categorizing your sources based on update frequency, authority level, and content type. Real-time sources like APIs need different handling than static documents or databases.

Build a unified ingestion pipeline that can handle diverse data formats. Your system should process PDFs, web pages, databases, APIs, and structured data formats like JSON or CSV. Use format-specific processors that preserve important structural information during conversion to text chunks.

Implement source attribution and citation tracking throughout your RAG pipeline. When your notebook LLM provides answers, users need to know where information originated. Track document IDs, chunk locations, and confidence scores to enable transparent sourcing in responses.

Create a knowledge freshness management system. Set up automated pipelines to detect when external sources update and trigger re-indexing. For rapidly changing content like news or stock prices, consider implementing real-time retrieval that bypasses the vector database entirely.

Design fallback strategies for when external sources become unavailable. Your notebook LLM should gracefully handle API failures, network timeouts, or corrupted data by falling back to cached information or alternative sources. Implement circuit breakers to prevent cascading failures across your knowledge infrastructure.

Source prioritization algorithms help manage conflicting information across multiple knowledge bases. Implement confidence scoring based on source authority, recency, and retrieval similarity scores. When multiple sources provide different answers, your system should present the most reliable information while noting potential conflicts.

Consider implementing incremental updates rather than full re-indexing for large knowledge bases. Track document changes at the chunk level and only update modified portions. This approach significantly reduces computational overhead while keeping your knowledge base current.

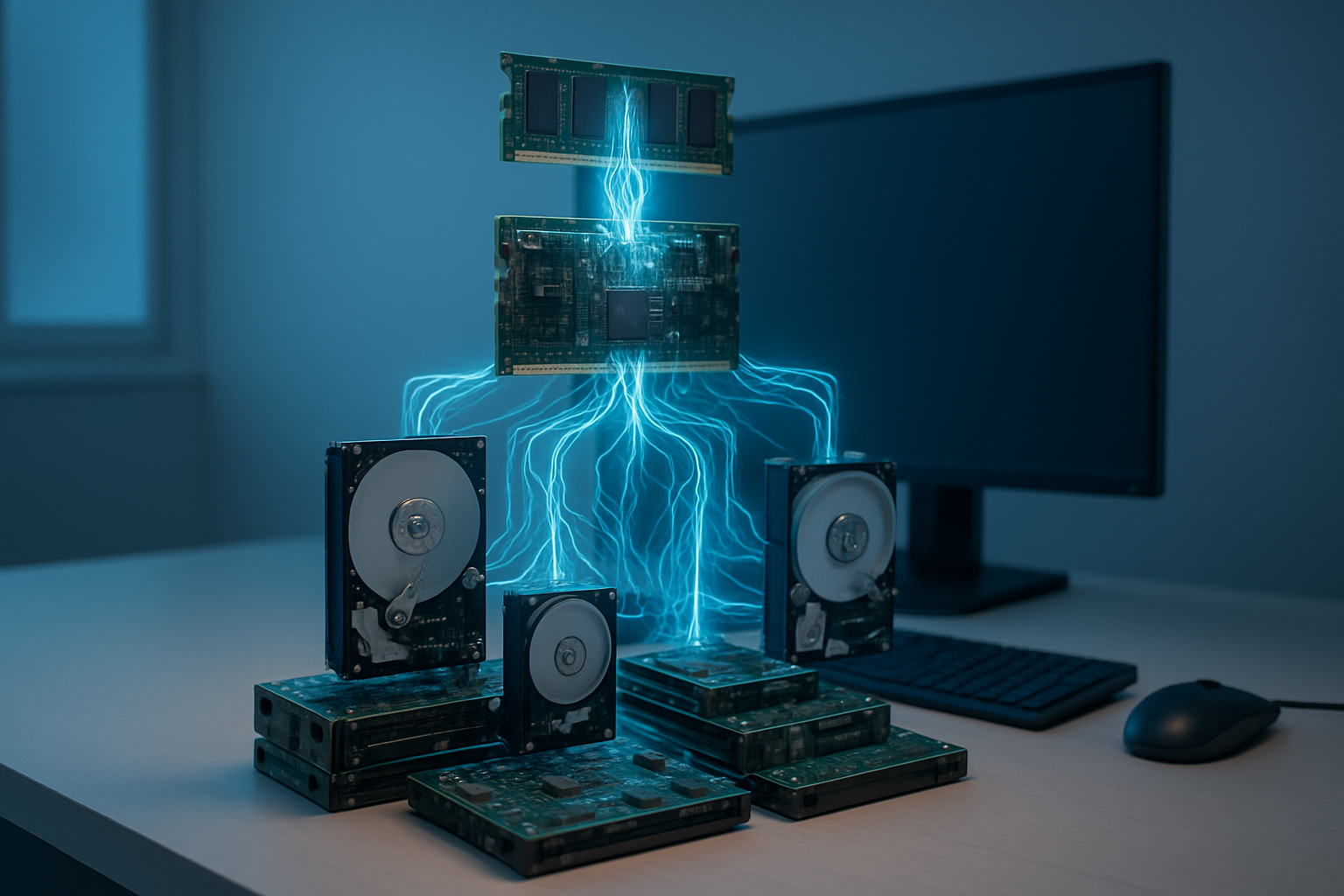

Designing Effective Memory Systems

Create Persistent Memory Storage Mechanisms

Effective memory systems design forms the backbone of any successful notebook LLM implementation. The key lies in establishing robust persistent memory storage mechanisms that can maintain context across sessions while ensuring data integrity and quick retrieval.

The foundation starts with choosing the right storage approach. Vector databases like Pinecone, Weaviate, or Chroma excel at storing and retrieving contextual information based on semantic similarity. These solutions automatically handle the complex mathematics behind embedding comparisons, making them ideal for notebook LLM applications where context relevance matters more than exact keyword matches.

For structured data storage, implement a hybrid approach combining relational databases for metadata and vector stores for embeddings. This dual-layer architecture ensures both fast retrieval and comprehensive context preservation:

| Storage Type | Use Case | Performance | Complexity |

|---|---|---|---|

| Vector DB | Semantic search, context retrieval | High | Medium |

| SQL Database | Metadata, relationships | Medium | Low |

| Document Store | Raw content, full conversations | Medium | Low |

| Memory Cache | Frequent access patterns | Very High | Medium |

Consider implementing memory compression techniques to optimize storage efficiency. Instead of storing every token, focus on extracting and preserving key concepts, entities, and relationship patterns that define the conversation’s essence.

Implement Conversation History Tracking

Building comprehensive conversation history tracking requires more than simply logging every exchange. Smart notebook LLMs maintain contextual threads that connect related discussions across different sessions and topics.

Start by implementing a conversation graph structure where each interaction becomes a node connected to previous relevant exchanges. This graph-based approach allows your RAG implementation to understand how topics evolve and branch throughout extended conversations.

Session management becomes critical for long-running notebook environments. Design a system that can:

- Segment conversations by topic clusters using embedding similarity

- Maintain user preference patterns across different notebook sessions

- Track concept evolution as ideas develop through multiple interactions

- Preserve decision trees showing how conclusions were reached

Memory indexing strategies should balance granularity with performance. Store conversation snippets at multiple levels – individual exchanges, topic blocks, and session summaries. This multi-resolution approach enables both precise context retrieval and broader thematic understanding.

The tracking system should also capture metadata beyond just text content. User interaction patterns, response quality ratings, and topic drift indicators provide valuable signals for memory prioritization algorithms.

Develop Memory Prioritization and Cleanup Strategies

Memory systems design isn’t complete without intelligent prioritization and cleanup mechanisms. Notebook LLMs accumulate vast amounts of conversational data, making selective retention essential for maintaining performance and relevance.

Implement a multi-factor scoring system for memory importance:

Recency weighting: Recent interactions carry higher relevance scores, but apply exponential decay rather than linear reduction. This ensures important older context doesn’t disappear too quickly while keeping recent exchanges prominent.

Frequency patterns: Conversations that reference similar concepts repeatedly indicate ongoing importance. Track concept co-occurrence across sessions to identify persistent themes worthy of long-term retention.

User engagement signals: High-quality exchanges marked by user satisfaction, follow-up questions, or explicit bookmarking deserve priority preservation. These signals often indicate breakthrough moments or particularly valuable insights.

Context interconnectedness: Memories that connect to multiple conversation threads serve as valuable bridges in your knowledge graph. Preserve these hub memories even if they’re older, as they enable better context engineering across diverse topics.

Cleanup strategies should operate on different timescales. Implement daily cleanup for obvious noise and low-value exchanges, weekly analysis for medium-term pattern recognition, and monthly deep cleaning for comprehensive memory optimization. This tiered approach prevents performance degradation while preserving valuable long-term context that makes notebook LLM interactions genuinely intelligent and personalized.

Advanced cleanup algorithms can also identify redundant information and consolidate similar memories into more compact representations without losing essential context. This compression maintains the knowledge while reducing storage requirements and retrieval latency.

Mastering Context Engineering Techniques

Craft optimal prompt structures for enhanced performance

Building effective prompts for your notebook LLM requires understanding how these models process information. The key lies in creating structured templates that guide the model toward consistent, high-quality responses while maintaining flexibility for different use cases.

Start with a clear role definition that establishes the LLM’s identity and expertise level. Follow this with explicit instructions that break down the task into manageable components. Include examples whenever possible – these serve as powerful anchors that help the model understand the expected output format and quality.

Role: Expert notebook assistant specializing in [domain]

Task: [Specific objective]

Context: [Relevant background information]

Format: [Expected output structure]

Examples: [1-2 concrete examples]

Chain-of-thought prompting works particularly well for notebook LLMs, as it mirrors the natural thinking process users expect. Encourage the model to show its reasoning steps, which not only improves accuracy but also helps users understand how conclusions were reached.

Manage context windows and token limitations effectively

Context window management becomes critical when building a notebook LLM that needs to maintain awareness across multiple interactions and documents. Most modern LLMs have context limits ranging from 4K to 200K tokens, and exceeding these limits results in information loss or processing errors.

Implement a token counting system that tracks usage throughout conversations. When approaching limits, prioritize the most recent interactions and the most relevant retrieved information. Create a hierarchy system where certain types of content (like user queries and direct responses) receive higher priority than background information.

| Priority Level | Content Type | Token Allocation |

|---|---|---|

| Critical | Current query & response | 20-30% |

| High | Recent conversation history | 25-35% |

| Medium | Retrieved relevant documents | 30-40% |

| Low | Background context | 10-15% |

Use compression techniques like summarization for older conversation segments. When context windows fill up, summarize earlier portions of the conversation instead of simply truncating them. This preserves the narrative flow while freeing up space for new information.

Design adaptive context switching mechanisms

Smart context switching allows your notebook LLM to seamlessly transition between different topics, projects, or modes of operation without losing relevant information. This requires building a context state manager that tracks what information remains relevant as conversations evolve.

Create context profiles for different types of interactions – research mode, creative writing, problem-solving, or data analysis. Each profile should define what information stays persistent and what can be deprioritized when switching contexts.

Implement trigger mechanisms that detect context shifts through keyword analysis, user explicit commands, or conversation flow patterns. When a shift occurs, the system should gracefully transition by:

- Saving the current context state

- Loading the appropriate new context profile

- Maintaining bridges between related topics

- Preserving user preferences and settings

Implement dynamic context expansion strategies

Dynamic context expansion allows your notebook LLM to pull in additional relevant information as conversations deepen or complexity increases. This goes beyond simple RAG retrieval by intelligently expanding the context based on the current discussion trajectory.

Build expansion triggers based on uncertainty detection, user questions that require deeper knowledge, or when the model identifies gaps in the available information. Use confidence scoring to determine when additional context would be beneficial.

Create expansion strategies that work at multiple levels:

- Document-level expansion: Pull in related sections from the same source

- Topic-level expansion: Retrieve information from related but different sources

- Temporal expansion: Include historical context or previous related discussions

- Cross-domain expansion: Connect insights from different knowledge areas

Monitor expansion effectiveness by tracking whether additional context leads to more accurate or helpful responses. Avoid over-expansion, which can dilute focus and waste computational resources.

Balance context relevance with computational efficiency

Achieving the right balance between comprehensive context and computational efficiency requires careful optimization of your retrieval and ranking systems. Not all context carries equal value, and including irrelevant information can actually hurt performance while wasting resources.

Implement relevance scoring that considers multiple factors: semantic similarity to the current query, recency of information, user interaction history, and document authority. Use these scores to create a dynamic threshold system that adapts based on query complexity and available computational resources.

Build a context quality feedback loop by monitoring user satisfaction with responses and correlating this with the context selection decisions. When users ask follow-up questions or express confusion, analyze whether better context selection might have prevented the issue.

Consider implementing a multi-tier context system where high-relevance information loads immediately while lower-priority context loads on-demand. This approach provides responsive initial responses while still making comprehensive information available when needed.

Use caching strategies to store frequently accessed context combinations, reducing the computational overhead of repeated retrievals. Monitor cache hit rates and adjust retention policies based on actual usage patterns rather than theoretical assumptions.

Building Your Notebook LLM Architecture

Select the right base models and frameworks

Choosing your base model sets the foundation for your entire notebook LLM architecture. Popular options include Llama 2, Mistral, and Code Llama for open-source solutions, while GPT-4 and Claude offer robust API-based alternatives. Consider your specific use case – Code Llama excels at programming tasks, while Mistral provides excellent general reasoning capabilities.

Framework selection depends on your deployment requirements and technical expertise. LangChain offers comprehensive RAG implementation tools with extensive community support, making it ideal for rapid prototyping. LlamaIndex specializes in document indexing and retrieval, perfect for knowledge-heavy notebook applications. For production environments, consider Haystack or custom implementations using Transformers and FAISS.

| Framework | Best For | Learning Curve | Production Ready |

|---|---|---|---|

| LangChain | Rapid development | Moderate | Yes |

| LlamaIndex | Document-heavy RAG | Low | Yes |

| Haystack | Enterprise deployment | High | Yes |

| Custom Build | Maximum control | Very High | Depends |

Design modular components for scalability

Your notebook LLM architecture should embrace modularity from day one. Break down functionality into distinct components: retrieval engines, memory managers, context processors, and response generators. This approach allows independent scaling and easier maintenance as your system grows.

Create clear interfaces between components using dependency injection patterns. Your retrieval component should expose standardized methods regardless of whether you’re using vector databases like Pinecone, Weaviate, or local FAISS indices. This flexibility lets you swap implementations without rewriting your entire codebase.

Design your memory system as a separate service that can handle both short-term conversation context and long-term user preferences. Use message queues like Redis or RabbitMQ to decouple components, enabling horizontal scaling when traffic increases.

Consider implementing a plugin architecture where new capabilities can be added without touching core functionality. This modular approach proves invaluable when integrating specialized tools like code execution environments or external APIs.

Implement data pipelines for continuous learning

Building effective data pipelines keeps your notebook LLM architecture current and responsive to new information. Design ingestion workflows that can handle multiple data sources – documents, code repositories, web content, and user interactions. Apache Airflow or Prefect can orchestrate these complex pipelines with proper scheduling and monitoring.

Your pipeline should include data validation, preprocessing, and embedding generation stages. Implement incremental updates rather than full rebuilds to maintain system responsiveness. Use change detection mechanisms to identify new or modified content, then update your vector store accordingly.

Create feedback loops that capture user interactions and system performance metrics. This data becomes crucial for fine-tuning and improving retrieval accuracy over time. Store interaction logs in structured formats that enable easy analysis and model improvement.

Set up automated quality checks throughout your pipeline. Monitor embedding quality, detect data drift, and flag potential issues before they impact user experience. Regular pipeline audits help maintain data freshness and system reliability.

Create robust error handling and fallback systems

Production notebook LLM systems need comprehensive error handling strategies. Network timeouts, API rate limits, and model failures happen regularly, so plan for graceful degradation rather than complete system failure.

Implement circuit breaker patterns for external API calls. When your primary model becomes unavailable, automatically switch to backup models or cached responses. This keeps your notebook LLM functional even during service disruptions.

Design your retrieval system with multiple fallback layers. If vector search fails, fall back to keyword search. If external knowledge bases are unreachable, use locally cached information. Always provide some response rather than leaving users with error messages.

Create comprehensive logging and monitoring systems that track system health, response times, and error rates. Use tools like Prometheus and Grafana to visualize system performance and identify issues before they impact users.

Build retry logic with exponential backoff for transient failures. Rate limiting protection prevents cascading failures when external services experience high load. These defensive programming practices ensure your notebook LLM architecture remains stable under various stress conditions.

Optimizing Performance and Reliability

Fine-tune retrieval accuracy and response quality

Getting your notebook LLM to consistently deliver accurate and relevant responses requires careful tuning of your retrieval mechanisms. Start by analyzing your retrieval metrics – precision, recall, and semantic similarity scores. Most production systems achieve optimal performance when retrieval precision stays above 85% and semantic similarity scores exceed 0.7.

Experiment with different embedding models to find the sweet spot for your domain. While general-purpose models like OpenAI’s text-embedding-ada-002 work well for broad content, specialized models often outperform on technical or domain-specific notebooks. Consider fine-tuning embeddings on your specific corpus using techniques like contrastive learning or triplet loss.

Query expansion dramatically improves retrieval quality. Implement multiple query formulations – synonyms, related terms, and paraphrases – then combine results using weighted ranking. This approach catches relevant content that might not match exact query terms.

Response quality improves when you implement relevance filtering. Set dynamic thresholds based on query complexity and confidence scores. Simple factual queries can use lower thresholds, while complex analytical questions need higher relevance bars to avoid hallucinations.

Regular A/B testing helps identify which retrieval strategies work best for your users. Track metrics like response accuracy, user satisfaction scores, and task completion rates to guide your optimization efforts.

Implement caching strategies for faster responses

Smart caching transforms sluggish notebook LLM performance into snappy, responsive interactions. Multi-level caching strategies work best – implement semantic caching for similar queries, result caching for identical requests, and embedding caching for frequently accessed documents.

Semantic caching offers the biggest performance gains. Instead of exact query matches, cache responses based on semantic similarity. When a new query comes in, check if you’ve already answered something similar (similarity threshold of 0.9+ works well). This approach can reduce LLM API calls by 60-80% in typical notebook environments.

Redis or Memcached serve as excellent caching backends for production systems. Store embeddings, retrieved chunks, and generated responses with appropriate TTL values. Document embeddings rarely change, so cache them for hours or days. Generated responses should expire more frequently to ensure freshness.

Implement cache warming for common queries and popular notebook sections. Precompute responses for frequently asked questions, standard code explanations, and common debugging scenarios. This proactive approach eliminates cold start latencies during peak usage.

Monitor cache hit rates and adjust strategies accordingly. Target 70%+ hit rates for optimal performance. Low hit rates suggest your semantic similarity thresholds might be too strict, while very high rates could indicate stale or overly broad caching.

Monitor system performance and identify bottlenecks

Effective monitoring reveals where your notebook LLM struggles before users notice problems. Track key performance indicators across your entire pipeline – from query processing to response generation.

Response latency metrics tell the complete story. Break down timing into components: query processing (should be <100ms), retrieval operations (<500ms), LLM inference (<2-3 seconds), and post-processing (<200ms). Any component exceeding these thresholds needs attention.

Set up comprehensive logging for every system component. Log query patterns, retrieval success rates, LLM token usage, and error frequencies. Tools like Elasticsearch or Datadog help visualize patterns and spot anomalies quickly.

Memory usage patterns reveal scaling challenges early. Monitor RAM consumption during peak loads, especially for embedding storage and model inference. Sudden spikes often indicate memory leaks or inefficient batch processing.

| Metric | Threshold | Action Required |

|---|---|---|

| Response Time | >5 seconds | Optimize retrieval or add caching |

| Error Rate | >2% | Review query handling and fallbacks |

| Memory Usage | >80% | Scale horizontally or optimize storage |

| Cache Hit Rate | <60% | Adjust semantic similarity thresholds |

Implement automated alerts for critical thresholds. Page-level errors, sustained high latency, or memory exhaustion require immediate attention. Less critical metrics can trigger daily or weekly reports.

Scale your notebook LLM for production environments

Production scaling requires architectural changes beyond simply adding more servers. Design your notebook LLM architecture with horizontal scaling in mind from day one.

Separate stateless and stateful components. Query processing, embedding generation, and response formatting should be stateless services that scale easily. Memory systems and user context require careful state management across multiple instances.

Load balancing strategies differ for LLM workloads compared to traditional web applications. Implement intelligent routing that considers current system load, model availability, and query complexity. Simple round-robin routing often creates performance hotspots with mixed query types.

Database scaling becomes critical as your notebook content grows. Partition vector databases by topic, date ranges, or user groups. This approach improves query performance and enables targeted scaling of high-demand content areas.

Consider hybrid deployment architectures combining cloud and edge computing. Deploy lightweight models at the edge for simple queries while routing complex requests to powerful cloud instances. This approach reduces latency and controls costs effectively.

Auto-scaling policies need fine-tuning for LLM workloads. Traditional CPU-based scaling doesn’t work well since GPU utilization and memory pressure are better indicators of resource needs. Implement custom metrics that trigger scaling based on queue depth, average response time, and model-specific resource usage.

Container orchestration platforms like Kubernetes excel at managing LLM workloads. Use resource quotas, quality-of-service classes, and pod disruption budgets to ensure stable performance during scaling events.

Creating your own notebook LLM brings together several powerful technologies that work in harmony. RAG helps your system pull in relevant information when needed, while smart memory design keeps track of important conversations and data over time. Context engineering makes sure your AI understands what’s happening in each interaction, and a solid architecture ties everything together for reliable performance.

The real magic happens when you combine these pieces thoughtfully. Start with a simple RAG setup, add memory features gradually, and focus on getting your context handling right before scaling up. Your notebook LLM will become more useful as you refine each component, creating an AI assistant that truly understands your needs and grows smarter with every conversation.