Machine learning teams waste countless hours managing complex workflows manually. AWS Step Functions changes this by automating your entire ML pipeline from data ingestion to model deployment.

This practical guide is designed for data engineers, ML engineers, and DevOps professionals who want to build reliable, scalable machine learning workflows using AWS serverless orchestration. You’ll learn how to replace fragile scripts and manual processes with robust, visual workflows that handle failures gracefully and scale automatically.

We’ll start by walking through setting up your first ML pipeline with Step Functions, showing you how to connect AWS services like Lambda, SageMaker, and S3 into a seamless workflow. You’ll see exactly how to automate data processing and feature engineering tasks that typically require constant babysitting.

Next, we’ll dive into model training orchestration and ML deployment pipeline creation. You’ll discover how to coordinate distributed training jobs, handle model versioning, and automate deployment across different environments without writing complex orchestration code.

Finally, we’ll explore advanced patterns for real-time ML inference and batch processing workflows. You’ll master Step Functions best practices for production environments, including proper error handling, cost optimization, and ML pipeline monitoring strategies that keep your workflows running smoothly at scale.

Understanding AWS Step Functions for Machine Learning Workflows

Core concepts and visual workflow orchestration benefits

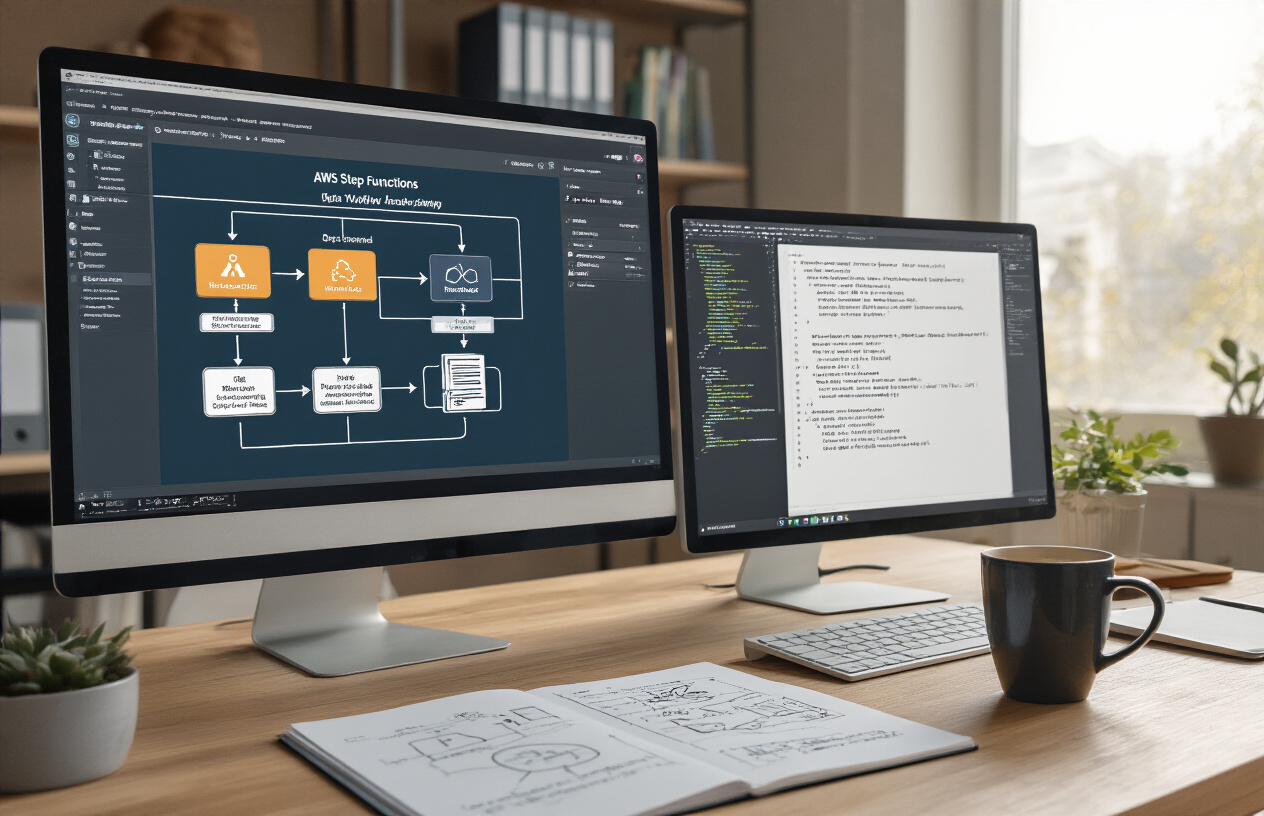

AWS Step Functions transforms how engineers approach machine learning workflows by providing a visual, state machine-based orchestration platform. Think of it as a conductor for your ML orchestra, coordinating every component from data ingestion to model deployment with precision.

The service uses Amazon States Language (ASL) to define workflows as JSON documents, creating clear, maintainable pipelines that anyone on your team can understand at a glance. Each state in your workflow represents a specific task – whether that’s calling a Lambda function for data preprocessing, triggering a SageMaker training job, or sending notifications when processes complete.

The visual workflow designer makes debugging and optimization straightforward. You can see exactly where bottlenecks occur, which steps consume the most time, and how data flows between different services. This visibility proves invaluable when troubleshooting production ML pipelines or explaining system architecture to stakeholders.

Step Functions handles error management gracefully through built-in retry logic, catch blocks, and failure handling patterns. Your ML workflows become resilient by default, automatically recovering from transient failures or routing to alternative processing paths when needed.

Serverless architecture advantages for ML pipelines

The serverless nature of AWS Step Functions eliminates infrastructure management overhead completely. You don’t provision servers, manage scaling, or worry about capacity planning for your ML workflow orchestration layer.

Your pipelines scale automatically based on demand. Whether processing a single data sample or orchestrating hundreds of parallel training jobs, Step Functions adjusts seamlessly without manual intervention. This elasticity proves especially valuable for ML workloads with unpredictable or bursty patterns.

The pay-per-execution model aligns costs directly with usage. You’re charged only for state transitions, not idle time or reserved capacity. For ML teams running intermittent batch processing jobs or experimental workflows, this creates significant cost savings compared to maintaining dedicated orchestration infrastructure.

Serverless architecture also reduces operational complexity. Your team focuses on workflow logic rather than system administration, patching, or monitoring underlying infrastructure health. Updates and improvements happen transparently without requiring maintenance windows or service disruptions.

Integration capabilities with AWS ML services

Step Functions integrates natively with the entire AWS machine learning ecosystem, creating seamless data pipelines without complex API management or custom integration code.

Direct Service Integration:

- SageMaker: Launch training jobs, create endpoints, run batch transform operations

- Lambda: Execute custom preprocessing, feature engineering, or validation logic

- Glue: Trigger ETL jobs for large-scale data preparation

- Batch: Handle compute-intensive processing tasks with optimal resource allocation

- EMR: Orchestrate big data analytics and distributed ML workloads

Data Movement and Storage:

- S3: Manage data lifecycle, trigger workflows on file uploads, organize model artifacts

- DynamoDB: Store metadata, experiment tracking, and pipeline state information

- RDS/Aurora: Access structured training data or store model performance metrics

Monitoring and Notifications:

- CloudWatch: Track pipeline metrics and set up alerting rules

- SNS: Send notifications when workflows complete or encounter failures

- EventBridge: React to AWS service events and trigger dependent workflows

This tight integration means your ML workflows use native AWS service APIs rather than fragile custom connectors or third-party tools that might break during updates.

Cost optimization through pay-per-use execution model

Step Functions’ pricing structure aligns perfectly with ML workflow patterns, where processing happens in discrete bursts rather than continuous operation. You pay per state transition, typically costing $0.025 per 1,000 state transitions.

For typical ML pipelines, this translates to remarkably low orchestration costs. A comprehensive workflow with data validation, preprocessing, training, evaluation, and deployment might involve 50-100 state transitions, costing less than $0.01 per execution.

Cost Optimization Strategies:

- Parallel Processing: Execute independent tasks simultaneously to reduce total workflow time

- Conditional Logic: Skip unnecessary processing steps based on data characteristics or business rules

- Batch Operations: Group multiple items together to reduce the number of state transitions

- Smart Checkpointing: Resume workflows from failure points rather than restarting completely

The model becomes even more cost-effective when compared to maintaining dedicated orchestration infrastructure. Traditional workflow engines require persistent compute resources, storage, and operational overhead regardless of actual usage patterns.

Long-running workflows don’t incur additional state transition charges while waiting for external processes like SageMaker training jobs to complete. You only pay for the orchestration logic, not the idle time between steps.

Setting Up Your First ML Pipeline with Step Functions

Creating state machines for data preprocessing workflows

Building your first AWS Step Functions machine learning workflow starts with creating a state machine that handles data preprocessing tasks. The state machine acts as your workflow orchestrator, coordinating different AWS services to transform raw data into ML-ready formats.

When designing your preprocessing workflow, think about breaking down complex data transformations into discrete, manageable steps. A typical data preprocessing state machine might include states for data validation, cleaning, feature extraction, and data splitting. Each state represents a specific processing task that can be executed independently.

Start by identifying your data sources and processing requirements. You might pull data from S3 buckets, RDS databases, or streaming sources like Kinesis. Your state machine can trigger Lambda functions for lightweight transformations, invoke AWS Batch jobs for compute-intensive processing, or call SageMaker Processing jobs for specialized ML preprocessing tasks.

Consider implementing error handling and retry logic early in your design. Data preprocessing workflows often encounter issues like missing files, malformed data, or temporary service unavailability. Step Functions provides built-in retry mechanisms and catch blocks that can gracefully handle these scenarios without breaking your entire pipeline.

The visual workflow editor in the AWS console makes it easy to design and visualize your preprocessing pipeline. You can drag and drop different state types, configure transitions, and test your workflow before deploying it to production.

Configuring IAM roles and permissions for ML services

Setting up proper IAM roles and permissions forms the security backbone of your ML pipeline automation. Step Functions needs specific permissions to orchestrate various AWS services, and each service in your workflow requires appropriate access rights to function correctly.

Create a dedicated execution role for your Step Functions state machine. This role needs permissions to invoke the services you’ll use in your workflow – Lambda, SageMaker, Batch, or EMR. Start with the AWSStepFunctionsFullAccess managed policy, then narrow down permissions based on your specific use case.

Your Lambda functions require their own execution roles with permissions to access data sources and write to destinations. If you’re processing data in S3, your Lambda role needs s3:GetObject and s3:PutObject permissions for the relevant buckets. For database connections, include the necessary RDS or DynamoDB permissions.

SageMaker services have specific permission requirements. SageMaker Processing jobs need access to input and output S3 locations, while training jobs require additional permissions for model artifacts and container images in ECR. The SageMaker execution role should include the AmazonSageMakerFullAccess managed policy as a starting point.

Set up resource-based policies when needed. S3 bucket policies can restrict access to specific roles, adding an extra layer of security. Use IAM policy conditions to limit access based on request context, such as source IP addresses or time of day.

Document your permission structure clearly. Complex ML workflows often involve multiple roles and services, making it easy to lose track of who can access what. Regular permission audits help maintain security and compliance.

Defining JSON-based state definitions for your pipeline

Step Functions uses Amazon States Language (ASL) to define workflow logic through JSON documents. Writing effective state definitions requires understanding the different state types and how they connect to build robust ML pipeline automation.

Start with a simple Pass state to test your basic workflow structure. Pass states don’t perform any work but help you understand the flow and data transformation patterns. Your initial definition might look like a sequence of Pass states representing each major step in your preprocessing pipeline.

Task states form the core of your ML workflow, invoking AWS services to perform actual work. Configure Task states to call Lambda functions, start SageMaker jobs, or trigger Batch processing. Each Task state includes a Resource field specifying the service ARN and optional parameters for service-specific configuration.

{

"DataValidation": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:validate-data",

"Parameters": {

"InputPath.$": "$.input_data_location",

"ValidationRules.$": "$.validation_config"

},

"Next": "DataCleaning"

}

}

Use Choice states to implement conditional logic in your pipeline. You might check data quality scores, file sizes, or processing results to determine the next steps. Choice states evaluate input data against defined rules and route execution accordingly.

Parallel states allow simultaneous execution of independent processing tasks. Feature engineering often involves multiple transformations that can run concurrently, significantly reducing your overall pipeline execution time.

Map states process arrays of data items, perfect for batch processing scenarios where you need to apply the same transformation to multiple datasets. The Map state automatically handles parallelization and error handling for array processing.

Include proper error handling with Retry and Catch blocks. Retry blocks automatically retry failed states with configurable backoff strategies, while Catch blocks redirect execution to error handling states when retries are exhausted.

Test your JSON definitions using the Step Functions console simulator before deploying. The simulator validates your ASL syntax and helps identify potential issues in your workflow logic.

Data Processing and Feature Engineering Automation

Orchestrating ETL processes with parallel execution

Step Functions excels at breaking down complex ETL workflows into smaller, manageable tasks that run simultaneously. Instead of processing data sequentially through each transformation step, you can design your AWS Step Functions to execute multiple data processing tasks in parallel, dramatically reducing overall processing time.

The Parallel state becomes your best friend for data processing automation. You can split incoming data streams across different Lambda functions or Glue jobs, each handling specific transformation tasks. For example, while one branch cleans customer data, another can simultaneously process transaction records, and a third handles product catalog updates.

{

"DataProcessing": {

"Type": "Parallel",

"Branches": [

{

"StartAt": "ProcessCustomerData",

"States": { ... }

},

{

"StartAt": "ProcessTransactionData",

"States": { ... }

}

]

}

}

This parallel execution pattern works particularly well with feature engineering automation, where you need to calculate different feature sets simultaneously. You can process numerical features, categorical encodings, and text embeddings in parallel branches, then merge results before feeding them into your ML model.

Implementing error handling for data quality checks

Data quality issues can derail entire machine learning workflows if not caught early. Step Functions provides robust error handling mechanisms that let you build resilient pipelines with comprehensive data validation checkpoints.

The Retry and Catch states help you handle transient failures gracefully. When a data validation Lambda function encounters corrupted records, you can configure automatic retries with exponential backoff. If retries fail, the Catch state can route the workflow to a data cleaning branch or send alerts to your team.

{

"ValidateData": {

"Type": "Task",

"Resource": "arn:aws:lambda:...:validateDataQuality",

"Retry": [

{

"ErrorEquals": ["States.TaskFailed"],

"IntervalSeconds": 2,

"MaxAttempts": 3,

"BackoffRate": 2.0

}

],

"Catch": [

{

"ErrorEquals": ["DataQualityError"],

"Next": "HandleBadData"

}

]

}

}

You can implement multi-level validation checks where each stage validates different aspects of your data. Schema validation runs first, followed by business rule checks, then statistical outlier detection. This layered approach catches different types of data issues at appropriate stages.

Managing large dataset transformations efficiently

Processing massive datasets requires careful orchestration to avoid timeouts and memory issues. Step Functions helps you break large datasets into smaller chunks and process them systematically through your ML pipeline automation.

The Map state is perfect for processing large datasets in batches. You can split a huge dataset stored in S3 into smaller partitions, then process each partition independently. This approach keeps individual Lambda functions within memory limits while processing terabytes of data efficiently.

For datasets that exceed Lambda’s processing capabilities, Step Functions can orchestrate AWS Batch jobs or EMR clusters. You can spin up compute resources on-demand, process your data, then tear down resources to control costs.

| Processing Option | Best For | Key Benefits |

|---|---|---|

| Lambda Functions | < 15 minute tasks, moderate memory | Fast startup, serverless scaling |

| AWS Batch | Long-running jobs, high memory | Custom containers, flexible compute |

| EMR Clusters | Big data frameworks | Spark/Hadoop ecosystem |

AWS serverless orchestration through Step Functions lets you chain these different compute services based on your data processing needs. Start with Lambda for quick transformations, escalate to Batch for heavy lifting, then return to Lambda for final formatting steps.

Connecting to S3, Redshift, and other data sources

Step Functions integrates seamlessly with AWS data services, creating a unified orchestration layer for your data pipeline. The service includes native integrations that eliminate the need for wrapper Lambda functions in many cases.

For S3 operations, Step Functions can directly trigger data processing jobs when new files arrive. You can set up EventBridge rules that start your workflow whenever data lands in specific S3 buckets. The workflow can then read file metadata, determine processing requirements, and route data through appropriate transformation steps.

Redshift integration works through the Data API, allowing Step Functions to execute SQL queries directly against your data warehouse. You can run complex analytical queries, export results to S3, then trigger downstream ML training jobs – all orchestrated through a single Step Functions workflow.

{

"QueryRedshift": {

"Type": "Task",

"Resource": "arn:aws:states:::aws-sdk:redshiftdata:executeStatement",

"Parameters": {

"ClusterIdentifier": "my-cluster",

"Database": "analytics",

"Sql": "SELECT * FROM features WHERE created_date > current_date - 1"

}

}

}

Other data sources like RDS, DynamoDB, and external APIs can be accessed through Lambda functions or direct service integrations. The key is designing your workflow to handle different data source characteristics – batch exports from databases, streaming data from Kinesis, or API calls with rate limiting requirements.

Cross-service error handling becomes critical when dealing with multiple data sources. Network timeouts, credential issues, or service limits can interrupt your workflow. Implement circuit breaker patterns where persistent failures with one data source don’t block processing from other sources.

Model Training and Deployment Orchestration

Automating SageMaker Training Jobs with Step Functions

Step Functions provides a powerful way to orchestrate SageMaker training jobs through AWS serverless orchestration. By creating state machines, you can trigger training jobs automatically based on data availability, schedule training runs, or chain multiple experiments together.

The integration works through the SageMaker service integration, where you define training job parameters directly in your state machine definition. Your Step Functions workflow can automatically handle job submission, monitor training progress, and react to completion or failure states. This eliminates manual intervention and creates reproducible ML pipeline automation.

Here’s how to configure a basic training job step:

{

"Type": "Task",

"Resource": "arn:aws:states:::sagemaker:createTrainingJob.sync",

"Parameters": {

"TrainingJobName.$": "$.training_job_name",

"AlgorithmSpecification": {

"TrainingImage": "382416733822.dkr.ecr.us-east-1.amazonaws.com/xgboost:latest",

"TrainingInputMode": "File"

},

"InputDataConfig": [

{

"ChannelName": "training",

"DataSource": {

"S3DataSource": {

"S3DataType": "S3Prefix",

"S3Uri.$": "$.training_data_path"

}

}

}

],

"OutputDataConfig": {

"S3OutputPath.$": "$.output_path"

},

"ResourceConfig": {

"InstanceType": "ml.m4.xlarge",

"InstanceCount": 1,

"VolumeSizeInGB": 30

},

"RoleArn": "arn:aws:iam::123456789012:role/SageMakerRole",

"StoppingCondition": {

"MaxRuntimeInSeconds": 3600

}

}

}

Managing Hyperparameter Tuning Workflows

Hyperparameter tuning often requires running multiple training jobs with different parameter combinations. Step Functions excels at managing these complex workflows by orchestrating SageMaker hyperparameter tuning jobs and handling the results systematically.

You can create workflows that automatically start tuning jobs when new data arrives, monitor progress across multiple experiments, and select the best performing models based on your defined metrics. The service integration with SageMaker makes this seamless.

A typical hyperparameter tuning workflow includes these components:

- Parameter space definition: Define ranges and categories for hyperparameters

- Objective metric specification: Set the metric to optimize (accuracy, F1-score, etc.)

- Resource management: Control instance types and job concurrency

- Results processing: Extract best parameters and model artifacts

Step Functions can also implement custom tuning strategies beyond SageMaker’s built-in options. You might create a workflow that runs Bayesian optimization, grid search with custom logic, or multi-stage tuning where early results influence later parameter choices.

Implementing Model Validation and Testing Stages

Model validation becomes critical in production ML workflow management. Step Functions enables you to build comprehensive validation pipelines that test models across multiple dimensions before deployment.

Your validation workflow can include:

- Data quality checks: Validate input data distribution and quality

- Model performance evaluation: Test against holdout datasets and benchmarks

- Bias and fairness testing: Check for discriminatory patterns

- Integration testing: Verify API endpoints and response formats

- A/B testing preparation: Set up experiments for production comparison

Each validation stage can have its own pass/fail criteria, and Step Functions can route the workflow based on these results. Failed validations can trigger notifications, rollback procedures, or alternative deployment paths.

{

"Type": "Choice",

"Choices": [

{

"Variable": "$.validation_score",

"NumericGreaterThan": 0.85,

"Next": "DeployToProduction"

},

{

"Variable": "$.validation_score",

"NumericGreaterThan": 0.75,

"Next": "DeployToStaging"

}

],

"Default": "RejectModel"

}

Streamlining Deployment to Multiple Environments

Modern ML systems require deployment across multiple environments – development, staging, and production. Step Functions simplifies this by orchestrating deployments in sequence or parallel, handling environment-specific configurations automatically.

Your deployment workflow can manage:

- Environment-specific endpoints: Different instance types and scaling policies per environment

- Configuration management: Environment variables, security settings, and resource allocations

- Rollback capabilities: Automatic reversion if deployment validation fails

- Blue-green deployments: Zero-downtime updates with traffic shifting

Parallel deployment branches allow you to deploy to non-production environments simultaneously while maintaining strict controls for production deployments. Each environment can have its own validation steps and approval gates.

Version Control Integration for Model Artifacts

Integrating version control for model artifacts ensures reproducibility and enables reliable rollbacks. Step Functions can automatically tag model versions, store metadata, and maintain lineage between training data, code versions, and resulting models.

Key version control practices include:

- Automated tagging: Generate version numbers based on training timestamps or Git commits

- Metadata storage: Track training parameters, data sources, and performance metrics

- Artifact management: Store models in S3 with consistent naming conventions

- Lineage tracking: Maintain connections between data versions and model outputs

Your Step Functions workflow can integrate with Git repositories to link model versions with code changes, ensuring complete traceability from source code modifications through model training to deployment.

Model registry integration allows you to maintain approved model versions, track deployment status across environments, and implement governance policies for model promotion between stages.

Real-time Inference and Batch Processing Workflows

Building Responsive Prediction Pipelines

Modern machine learning applications demand systems that can handle varying inference loads while maintaining consistent performance. AWS Step Functions excels at orchestrating these responsive prediction pipelines by integrating multiple AWS services into cohesive workflows.

When building real-time ML inference pipelines, Step Functions acts as the traffic controller, routing prediction requests through different processing stages. You can configure your pipeline to preprocess incoming data, invoke multiple model versions, and aggregate results before returning predictions.

{

"Comment": "Real-time inference pipeline",

"StartAt": "ValidateInput",

"States": {

"ValidateInput": {

"Type": "Task",

"Resource": "arn:aws:states:::lambda:invoke",

"Next": "InvokeModel"

},

"InvokeModel": {

"Type": "Task",

"Resource": "arn:aws:states:::sagemaker:createEndpoint",

"End": true

}

}

}

The key advantage lies in the pipeline’s ability to handle failures gracefully. When a model endpoint becomes unavailable, Step Functions can automatically retry requests or route them to backup models. This resilience ensures your prediction service maintains high availability even during peak traffic periods.

Express workflows work particularly well for real-time scenarios since they execute synchronously and return results within seconds. Standard workflows better suit complex inference tasks requiring longer processing times or sophisticated error handling.

Scaling Inference Workloads Based on Demand

Serverless machine learning architectures shine when dealing with unpredictable traffic patterns. Step Functions provides automatic scaling capabilities that adjust your inference capacity based on incoming request volume without manual intervention.

The scaling strategy depends on your specific requirements:

| Scaling Method | Best For | Response Time | Cost Efficiency |

|---|---|---|---|

| Lambda Functions | Variable loads | < 1 second | High |

| SageMaker Endpoints | Consistent traffic | < 100ms | Medium |

| Batch Transform | Large datasets | Minutes to hours | Very High |

For dynamic scaling, configure your Step Functions to monitor CloudWatch metrics and trigger scaling actions. When request volume increases, the workflow can automatically provision additional SageMaker endpoint instances or increase Lambda concurrency limits.

# Example scaling configuration

scaling_config = {

"target_capacity": 10,

"max_capacity": 100,

"scale_in_cooldown": 300,

"scale_out_cooldown": 60

}

Cold start optimization becomes critical in scaling scenarios. Pre-warm your Lambda functions and maintain minimum endpoint capacity to ensure consistent response times. Step Functions can orchestrate these warm-up procedures during low-traffic periods, preparing your infrastructure for upcoming demand spikes.

AWS batch processing integration allows you to handle large inference jobs efficiently. When dealing with thousands of predictions, Step Functions can partition the workload across multiple parallel branches, process each batch independently, and aggregate results.

Implementing A/B Testing for Model Comparison

A/B testing reveals which model performs better in production environments with real user traffic. AWS Step Functions ML tutorial patterns show how to split incoming requests between different model versions systematically.

The workflow splits traffic using weighted routing rules. You can direct 90% of requests to your current production model while sending 10% to a new candidate model. Step Functions tracks performance metrics for both versions, collecting data needed for statistical comparison.

{

"Type": "Choice",

"Choices": [

{

"Variable": "$.experiment_group",

"StringEquals": "control",

"Next": "ProductionModel"

},

{

"Variable": "$.experiment_group",

"StringEquals": "treatment",

"Next": "CandidateModel"

}

]

}

Multi-armed bandit algorithms work well within Step Functions workflows. The system can dynamically adjust traffic allocation based on real-time performance feedback. When the new model demonstrates superior accuracy or speed, the workflow gradually increases its traffic share.

Feature flagging integrates seamlessly with your A/B testing framework. Step Functions can evaluate feature flags to determine experiment participation, allowing you to control which users see which model version. This granular control helps you roll out new models safely to specific user segments.

Performance tracking requires comprehensive logging. Your Step Functions workflow should capture prediction latency, accuracy metrics, and user engagement data for both model versions. Integration with CloudWatch and custom metrics enables detailed analysis of experiment results.

The testing framework should handle edge cases gracefully. When model comparison reveals significant performance differences, Step Functions can automatically pause experiments and route all traffic to the better-performing model, protecting user experience while you investigate issues.

Monitoring, Debugging and Performance Optimization

CloudWatch Integration for Pipeline Visibility

CloudWatch serves as your command center for monitoring AWS Step Functions ML workflows, providing comprehensive visibility into every aspect of your pipeline execution. When you set up CloudWatch integration, you gain access to detailed execution logs, performance metrics, and real-time status updates that make tracking your machine learning workflows straightforward.

The integration automatically captures execution events, state transitions, and duration metrics for each step in your ML pipeline. You can create custom dashboards that display key performance indicators like processing times, success rates, and resource utilization across your entire workflow. This visibility becomes crucial when managing complex ML pipelines that might involve data preprocessing, model training, validation, and deployment stages.

CloudWatch Logs capture detailed execution traces, including input and output data for each state, making it easy to track data flow through your pipeline. You can set up log groups specifically for your Step Functions workflows and use CloudWatch Insights to query and analyze execution patterns. For example, you might query for all failed executions in the past week or identify bottlenecks by analyzing state duration metrics.

Setting up alarms based on execution metrics helps you proactively monitor your ML workflows. You can create alarms for failed executions, long-running processes, or unusual patterns in your data processing pipeline. These alarms can trigger automated responses or notifications to your team when issues arise.

Error Handling Strategies and Retry Mechanisms

Robust error handling transforms fragile ML pipelines into resilient production systems. Step Functions provides several built-in error handling mechanisms that you can leverage to create fault-tolerant machine learning workflows.

Retry configurations allow you to automatically recover from transient failures common in ML workflows, such as temporary API throttling, network timeouts, or resource unavailability. You can configure retry attempts with exponential backoff and jitter to avoid overwhelming downstream services. For example, when calling SageMaker training jobs or Lambda functions for data preprocessing, temporary failures shouldn’t derail your entire pipeline.

{

"Retry": [

{

"ErrorEquals": ["Lambda.ServiceException", "Lambda.AWSLambdaException"],

"IntervalSeconds": 2,

"MaxAttempts": 3,

"BackoffRate": 2.0

}

]

}

Catch blocks provide a way to handle specific error types gracefully. In ML workflows, you might want different error handling strategies for different types of failures. Data validation errors might require immediate pipeline termination and human intervention, while resource allocation failures might trigger alternative processing paths or resource scaling.

The Choice state can implement conditional error handling based on the type of error or the current state of your workflow. You can route failed training jobs to alternative model architectures or switch to batch processing when real-time inference encounters capacity issues. This approach ensures your ML pipelines remain operational even when individual components fail.

Performance Tuning for Faster Execution Times

Optimizing Step Functions performance requires understanding the execution model and identifying bottlenecks in your ML workflow. The key areas for performance improvement include state transition optimization, parallel processing, and efficient resource utilization.

Parallel states dramatically reduce execution time for independent ML tasks. Instead of running feature engineering steps sequentially, you can process different feature sets simultaneously. Similarly, model validation across multiple datasets or hyperparameter combinations can run in parallel, significantly reducing overall pipeline duration.

Map states excel at processing large datasets by distributing work across multiple concurrent executions. When handling batch ML inference or data preprocessing tasks, Map states can process thousands of items simultaneously. You can configure concurrency limits to balance performance with resource constraints and downstream service capacity.

State transition overhead adds up in complex workflows. Minimizing the number of states and combining related operations into single Lambda functions or container tasks reduces execution time. For example, instead of separate states for data validation, transformation, and feature extraction, consider combining these into a single, well-optimized processing step.

Express workflows offer improved performance for high-frequency, short-duration ML tasks like real-time feature engineering or model serving. These workflows provide faster execution and lower latency compared to standard workflows, making them ideal for real-time ML inference patterns.

Resource right-sizing plays a crucial role in performance optimization. Ensure your Lambda functions have adequate memory allocation for ML processing tasks, and configure SageMaker instances appropriately for your model training requirements. Under-provisioned resources create unnecessary bottlenecks in your pipeline.

Cost Monitoring and Optimization Techniques

Managing costs effectively in Step Functions ML workflows requires understanding the pricing model and implementing optimization strategies across your entire pipeline. Step Functions charges based on state transitions, making workflow design choices directly impact your costs.

Express workflows cost significantly less than standard workflows for high-volume, short-duration tasks. If your ML pipeline includes frequent feature preprocessing or real-time inference orchestration, Express workflows can reduce costs by up to 90% compared to standard workflows. However, they have limitations in execution duration and history retention that you need to consider.

Optimizing state transitions reduces your primary cost driver. Combine related operations into single states where possible, and avoid unnecessary Pass states or overly granular state machines. Each state transition incurs a charge, so efficient workflow design directly translates to cost savings.

| Optimization Strategy | Cost Impact | Use Case |

|---|---|---|

| Express Workflows | 90% reduction | High-frequency ML tasks |

| State Consolidation | 30-50% reduction | Complex preprocessing pipelines |

| Parallel Processing | Faster execution = lower duration costs | Independent ML operations |

| Right-sized Resources | Variable savings | Lambda and SageMaker optimization |

Resource scheduling helps minimize costs for training workflows. Schedule expensive model training during off-peak hours when compute resources are cheaper. Use Spot instances for SageMaker training jobs when your pipeline can handle potential interruptions.

Monitor your Step Functions costs through AWS Cost Explorer and set up billing alerts for unexpected cost spikes. Create cost allocation tags for different ML projects or environments to track spending patterns and identify optimization opportunities. Regular cost reviews help you spot trends and adjust your workflow design for better cost efficiency.

CloudWatch metrics help you identify cost optimization opportunities by revealing underutilized resources or inefficient execution patterns. Look for workflows with high failure rates, excessive retry attempts, or long idle times that might indicate resource misallocation or workflow design issues.

Advanced Patterns and Best Practices for Production

Implementing Human-in-the-Loop Workflows

Human-in-the-loop workflows become essential when your machine learning models need human oversight for critical decisions. AWS Step Functions provides several patterns to seamlessly integrate human approval or review steps into your automated ML pipelines.

The most common approach involves using Step Functions’ Wait state combined with Amazon SNS notifications or AWS Lambda functions that trigger approval workflows. When your ML model produces predictions below a confidence threshold, the workflow can pause and send notifications to subject matter experts through email, Slack, or custom dashboards.

For sensitive applications like medical diagnosis or financial fraud detection, you can implement a callback pattern where the Step Functions execution token gets stored in DynamoDB. Human reviewers access a web interface, review the model’s predictions alongside relevant data, and make approval decisions. Once approved or rejected, the callback resumes the Step Functions execution with the human decision.

Key implementation strategies include:

- Conditional branching: Use

Choicestates to route high-confidence predictions directly to production while sending uncertain cases for human review - Timeout handling: Set reasonable timeouts for human review steps to prevent workflows from hanging indefinitely

- Parallel processing: Process multiple review requests simultaneously using

Parallelstates - Audit trails: Log all human decisions in CloudWatch or DynamoDB for compliance and model improvement

Consider implementing tiered approval systems where different types of decisions require different levels of human oversight. Junior reviewers handle routine cases while escalating complex scenarios to senior experts.

Multi-region Deployment Strategies

Production ML workflows often require multi-region deployment for disaster recovery, latency optimization, and regulatory compliance. AWS Step Functions supports several patterns for building resilient, geographically distributed machine learning workflows.

Active-passive deployment represents the most straightforward approach. Your primary region handles all ML workflow execution while maintaining a standby region with identical infrastructure. Use AWS CloudFormation StackSets or AWS CDK to deploy consistent Step Functions state machines across regions. Store your ML models in S3 with cross-region replication enabled, ensuring model artifacts remain synchronized.

Active-active deployment provides better performance by distributing workloads across multiple regions based on user location or data residency requirements. Route53 health checks can automatically redirect traffic to healthy regions when issues arise. Each region runs independent Step Functions workflows while sharing common resources like model registries through services like Amazon SageMaker Model Registry.

Data locality considerations play a crucial role in multi-region strategies. Processing data close to its origin reduces latency and transfer costs. Design your Step Functions workflows to detect data location and automatically route processing tasks to the appropriate region.

Cross-region coordination patterns:

| Pattern | Use Case | Benefits | Complexity |

|---|---|---|---|

| Independent execution | Regional data processing | Low latency, simple | Low |

| Cross-region callbacks | Global model updates | Consistency across regions | Medium |

| Federated workflows | Distributed training | Resource optimization | High |

Monitor cross-region workflows using CloudWatch dashboards that aggregate metrics from all regions. Set up alerts for region-specific failures and implement automatic failover mechanisms where appropriate.

Security Considerations for Sensitive ML Data

Protecting sensitive data throughout your ML workflows requires implementing security controls at every stage. AWS Step Functions provides multiple mechanisms to secure your machine learning workflows while maintaining operational efficiency.

Data encryption at rest and in transit forms the foundation of any secure ML pipeline. Enable encryption for all S3 buckets storing training data, model artifacts, and intermediate results using AWS KMS keys. Configure Step Functions to use encrypted parameters in AWS Systems Manager Parameter Store for sensitive configuration values like API keys or database credentials.

IAM roles and policies should follow the principle of least privilege. Create specific IAM roles for different workflow stages – data preprocessing roles only need read access to raw data buckets, while model training roles require broader permissions. Use resource-based policies to restrict access to specific S3 prefixes or DynamoDB tables based on the execution context.

Secrets management becomes critical when workflows interact with external systems or databases. Store database passwords, API tokens, and other sensitive credentials in AWS Secrets Manager. Reference these secrets in your Step Functions workflows using dynamic references rather than hardcoding values.

Network security patterns:

- Deploy Step Functions in VPC mode when processing highly sensitive data

- Use VPC endpoints to keep traffic within AWS network boundaries

- Implement security groups that restrict access to only necessary ports and protocols

- Consider AWS PrivateLink for secure access to AWS services

Data masking and tokenization techniques help protect sensitive information during processing. Implement these transformations early in your workflow, replacing personally identifiable information with tokens that can be safely processed by downstream ML models.

Audit and compliance requirements often mandate detailed logging of data access and processing activities. Enable CloudTrail logging for all Step Functions executions and configure CloudWatch Logs to capture detailed execution history. Use AWS Config to monitor configuration changes and ensure compliance with organizational policies.

Regular security assessments and penetration testing help identify vulnerabilities in your ML workflows. Implement automated security scanning using tools like Amazon Inspector or third-party solutions that integrate with your Step Functions workflows through APIs.

AWS Step Functions transforms machine learning workflows from chaotic, error-prone processes into reliable, automated pipelines. We’ve covered everything from setting up your first ML pipeline to orchestrating complex model training and deployment workflows. The platform’s visual workflow designer makes it easy to map out data processing steps, automate feature engineering, and handle both real-time inference and batch processing with confidence. When issues arise, the built-in monitoring and debugging tools help you quickly identify bottlenecks and optimize performance.

Start small with a simple data processing workflow and gradually build complexity as your team gets comfortable with the service. The investment in learning Step Functions pays off quickly when you’re managing multiple models, handling different data sources, and need rock-solid reliability in production. Your ML projects will run smoother, your team will spend less time firefighting, and you’ll have the scalability to handle whatever your business throws at you next.