Amazon Bedrock AgentCore serves as Amazon’s centralized management platform for deploying and governing AI agents at enterprise scale. This comprehensive guide targets IT leaders, cloud architects, and AI engineers who need to understand how AgentCore streamlines AI agent deployment while maintaining strict governance controls.

AgentCore addresses the growing challenge of managing multiple AI agents across enterprise environments. Traditional AI deployments often struggle with consistency, security, and scalability issues. Amazon Bedrock AgentCore solves these problems by providing a unified architecture that standardizes how organizations build, deploy, and monitor their AI agents.

This tutorial will walk you through the core architecture that powers Amazon Bedrock AI agents and explain why enterprise AI governance becomes simpler with AgentCore’s built-in controls. You’ll also get a practical AI agent deployment guide with step-by-step instructions for getting your first agents running in production environments.

Understanding Amazon Bedrock AgentCore Architecture

Core Components and Service Integration

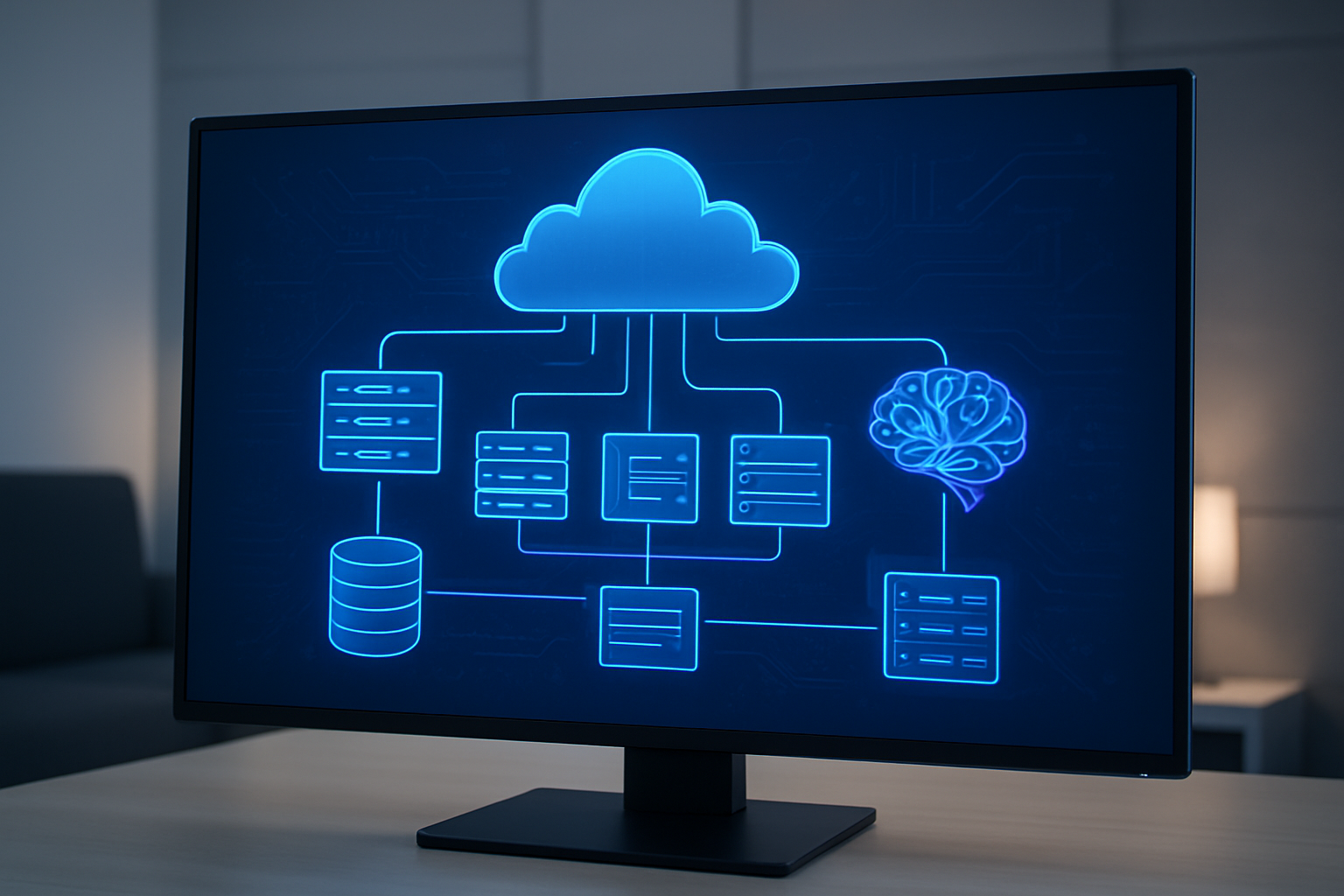

Amazon Bedrock AgentCore operates as a sophisticated orchestration layer that brings together multiple AWS services to create a unified AI agent platform. The architecture centers around three primary components: the Agent Runtime Engine, the Knowledge Base Integration Layer, and the Action Execution Framework.

The Agent Runtime Engine serves as the brain of the operation, handling natural language processing, reasoning chains, and decision-making workflows. This engine connects directly with foundation models available through Amazon Bedrock, allowing agents to leverage powerful language models like Claude, Titan, and other cutting-edge AI systems.

Knowledge Base Integration forms the second pillar, enabling agents to access and process information from various data sources. This component seamlessly integrates with Amazon OpenSearch, Amazon S3, and other AWS storage services, creating a dynamic knowledge retrieval system that keeps agents informed and contextually aware.

The Action Execution Framework represents the hands-on component of Amazon Bedrock AgentCore, translating agent decisions into real-world actions. This framework connects with AWS Lambda functions, API Gateway endpoints, and external systems, allowing agents to perform tasks like sending emails, updating databases, or triggering business workflows.

Service integration extends beyond these core components to include Amazon CloudWatch for monitoring, AWS IAM for security management, and Amazon EventBridge for event-driven architectures. This comprehensive integration creates a robust ecosystem where AI agents can operate securely and efficiently at enterprise scale.

Relationship with AWS Bedrock Ecosystem

Amazon Bedrock AgentCore represents a natural evolution within the broader AWS Bedrock ecosystem, acting as the specialized component designed specifically for building and managing intelligent agents. While Amazon Bedrock provides the foundational layer for accessing large language models and managing AI workloads, AgentCore extends these capabilities into the realm of autonomous AI agents.

The relationship between these services creates a synergistic effect where AgentCore leverages Bedrock’s model management capabilities while adding its own layer of agent-specific functionality. Bedrock handles model provisioning, fine-tuning, and inference optimization, while AgentCore focuses on agent orchestration, memory management, and task execution.

This tight integration means that Amazon Bedrock AI agents built through AgentCore automatically benefit from Bedrock’s security features, compliance controls, and model governance capabilities. Organizations can apply consistent policies across their AI infrastructure, whether they’re running simple model inference tasks or complex multi-step agent workflows.

The ecosystem approach also enables seamless scaling and resource optimization. When an agent requires additional computational resources, AgentCore can dynamically allocate capacity through Bedrock’s infrastructure, ensuring consistent performance without manual intervention.

Key Differences from Traditional AI Frameworks

Traditional AI frameworks typically require extensive custom development to create agent-like behaviors, often resulting in brittle systems that struggle with real-world complexity. Amazon Bedrock AgentCore takes a fundamentally different approach by providing pre-built agent capabilities that organizations can configure rather than code from scratch.

Memory management represents one of the most significant differences. While traditional frameworks often require developers to manually implement conversation history and context retention, AgentCore provides built-in memory systems that automatically track user interactions, maintain context across sessions, and learn from previous encounters.

The planning and reasoning capabilities of AgentCore agents operate at a higher level of abstraction compared to traditional rule-based systems. Instead of programming specific if-then logic, developers define goals and constraints, allowing the underlying AI to determine the best approach for achieving objectives.

Error handling and recovery in traditional frameworks often requires extensive exception handling code. AgentCore agents can adapt to unexpected situations, recover from errors, and even learn from failures to improve future performance. This resilience comes built-in rather than requiring custom implementation.

Integration complexity also differs dramatically. Traditional AI frameworks often require significant effort to connect with external systems and data sources. AgentCore provides native connectors and integration patterns that simplify the process of building agents that can interact with existing business systems.

Technical Requirements and Prerequisites

Organizations planning to deploy Amazon Bedrock AgentCore need to meet specific technical requirements that ensure optimal performance and security. The foundation starts with appropriate AWS account permissions and IAM roles configured for agent operations.

Compute requirements vary based on agent complexity and expected workload. Basic agents can operate effectively on standard EC2 instances, while more sophisticated agents with complex reasoning chains may require higher-performance compute resources. The architecture supports both on-demand scaling and reserved capacity planning.

Network configuration plays a critical role in agent deployment. Organizations need properly configured VPCs, security groups, and subnet arrangements that allow secure communication between AgentCore components and external systems. API Gateway endpoints require specific routing configurations to enable agent actions.

Data storage requirements include Amazon S3 buckets for knowledge base storage, Amazon OpenSearch clusters for vector search capabilities, and appropriate backup and versioning strategies. The storage architecture should support both structured and unstructured data formats that agents might need to access.

Security prerequisites encompass encryption at rest and in transit, proper key management through AWS KMS, and comprehensive audit logging through CloudTrail. Organizations must also implement appropriate access controls and monitoring systems to maintain security standards across their AI agent infrastructure.

Development environment setup requires AWS CLI configuration, appropriate SDK installations, and testing frameworks that support agent development workflows. Teams should also establish CI/CD pipelines that can handle agent deployment and version management processes.

Governance Benefits for Enterprise AI Management

Enhanced Security and Compliance Controls

Amazon Bedrock AgentCore transforms enterprise AI governance by providing robust security frameworks that protect sensitive data while maintaining operational flexibility. The platform implements multi-layered security protocols, including encrypted data transmission, secure API endpoints, and granular access controls that align with industry standards like SOC 2, HIPAA, and GDPR.

Organizations can configure role-based permissions that restrict AI agent access to specific datasets, ensuring that only authorized personnel can deploy or modify agents handling critical business information. The system automatically encrypts all data at rest and in transit, while providing detailed logging of every interaction for comprehensive audit trails.

Compliance teams benefit from built-in policy templates that automatically configure agents to meet regulatory requirements. These templates include data retention policies, privacy controls, and automated data masking capabilities that protect personally identifiable information without disrupting AI operations.

Centralized AI Agent Monitoring and Oversight

The centralized monitoring capabilities of Amazon Bedrock AgentCore give administrators complete visibility into AI agent performance, resource usage, and operational patterns across the entire organization. Real-time dashboards display critical metrics including response times, success rates, and resource consumption, allowing teams to identify bottlenecks before they impact business operations.

The platform aggregates logs from multiple AI agents into a unified interface, making it easy to track agent behavior patterns and identify anomalies. Administrators can set custom alerts for specific events, such as unusual query volumes or failed authentication attempts, enabling proactive response to potential issues.

Version control features track all agent modifications, creating an auditable history of changes that helps teams understand how AI behavior evolves over time. This centralized approach eliminates the complexity of managing individual agent instances while providing the detailed oversight necessary for enterprise-grade AI governance.

Cost Optimization Through Resource Management

Amazon Bedrock AgentCore delivers significant cost savings through intelligent resource allocation and automated scaling capabilities. The platform continuously monitors AI agent usage patterns and automatically adjusts compute resources to match actual demand, preventing over-provisioning that leads to unnecessary expenses.

Budget controls allow organizations to set spending limits for different departments or projects, with automatic alerts when usage approaches defined thresholds. The system provides detailed cost breakdowns by agent, team, or business unit, giving finance teams the visibility needed for accurate budgeting and cost allocation.

Resource pooling capabilities enable multiple AI agents to share compute infrastructure efficiently, maximizing utilization while minimizing idle resources. The platform automatically schedules resource-intensive tasks during off-peak hours when compute costs are lower, further reducing operational expenses.

Automated Policy Enforcement Capabilities

Policy enforcement automation eliminates the manual overhead of ensuring AI agents comply with organizational standards and regulatory requirements. Amazon Bedrock AgentCore automatically applies predefined policies to new agent deployments, preventing non-compliant configurations from reaching production environments.

The system includes customizable policy templates covering data access restrictions, output filtering, and behavioral constraints that align with specific industry regulations. Automated policy validation checks run continuously, flagging any agents that drift from approved configurations and triggering immediate remediation actions.

Integration with existing enterprise governance tools ensures that AI agent policies remain synchronized with broader organizational security standards. The platform can automatically update agent configurations when policy changes occur, maintaining compliance without requiring manual intervention from IT teams.

Risk Mitigation and Audit Trail Features

Comprehensive audit trails capture every AI agent interaction, configuration change, and policy enforcement action, creating an immutable record for compliance reporting and risk assessment. These detailed logs include user authentication data, query parameters, response content, and system performance metrics that provide complete visibility into AI operations.

Risk assessment tools analyze agent behavior patterns to identify potential security threats or operational risks before they impact business operations. The system flags unusual activity patterns, such as unexpected data access requests or abnormal response generation, enabling security teams to respond quickly to potential issues.

Automated backup and recovery features protect against data loss while maintaining business continuity during system updates or unexpected failures. The platform creates regular snapshots of agent configurations and training data, ensuring that critical AI capabilities can be restored quickly if problems occur.

How Amazon Bedrock AgentCore Functions

Agent Lifecycle Management Process

Amazon Bedrock AgentCore manages AI agents through a comprehensive lifecycle that spans from initial creation to eventual retirement. The platform automatically handles agent initialization, configuration, and deployment without requiring extensive manual intervention. When you create a new agent, AgentCore provisions the necessary resources and establishes secure communication channels with your selected foundation models.

The lifecycle begins with agent registration, where you define the agent’s purpose, capabilities, and operational parameters. AgentCore then validates these configurations against enterprise governance policies before moving to the deployment phase. During runtime, the platform continuously monitors agent performance, resource consumption, and response quality through built-in telemetry systems.

Version control plays a crucial role in agent management. AgentCore maintains multiple agent versions simultaneously, enabling seamless rollbacks when issues arise. The platform automatically archives older versions while keeping active deployments running smoothly. Health checks run continuously, detecting anomalies in agent behavior or performance degradation.

When agents reach end-of-life, AgentCore orchestrates graceful shutdowns, ensuring no data loss or service interruptions. The platform archives conversation logs and performance metrics for compliance purposes while safely decommissioning resources.

Model Integration and Selection Mechanisms

Amazon Bedrock AgentCore provides sophisticated model selection capabilities that adapt to specific use cases and performance requirements. The platform supports multiple foundation models including Claude, Titan, and Jurassic, allowing organizations to choose the most suitable model for their AI agent deployment.

The model selection process operates through intelligent routing algorithms that consider factors like query complexity, response time requirements, and cost optimization. AgentCore analyzes incoming requests and automatically directs them to the most appropriate model based on predefined criteria and real-time performance metrics.

Dynamic model switching represents a key feature of AgentCore’s architecture. The platform can switch between different models mid-conversation if performance thresholds aren’t met or if the query complexity changes. This flexibility ensures optimal response quality while maintaining cost efficiency.

Integration happens through standardized APIs that abstract the complexity of different model interfaces. Developers don’t need to learn model-specific implementations – AgentCore handles the translation between your agent logic and the underlying foundation models. The platform manages authentication, rate limiting, and error handling across all integrated models.

Custom model integration is also supported for organizations with specialized requirements. AgentCore provides extension points for connecting proprietary models while maintaining the same governance and monitoring capabilities available for native integrations.

Real-time Processing and Response Generation

AgentCore processes requests through a sophisticated pipeline designed for low-latency response generation. When users submit queries, the platform immediately analyzes the input for intent classification, entity extraction, and context understanding. This preprocessing happens in parallel with security validation and governance checks.

The processing engine maintains conversation context across multiple turns, enabling natural dialogue flows. AgentCore stores relevant conversation history in memory-optimized structures that provide instant access during response generation. The platform intelligently manages context windows to balance response quality with processing efficiency.

Response generation leverages streaming capabilities that deliver partial results as they become available. Users see responses appearing in real-time rather than waiting for complete processing. This streaming approach significantly improves perceived performance and user satisfaction.

Caching mechanisms accelerate responses for frequently asked questions or similar queries. AgentCore identifies patterns in user interactions and precomputes responses for common scenarios. The cache invalidation system ensures users always receive current information while benefiting from improved response times.

Load balancing distributes processing across multiple compute instances based on current demand. AgentCore automatically scales resources up or down to maintain consistent performance during traffic spikes. The platform includes circuit breakers that prevent cascading failures and maintain service availability even when individual components experience issues.

Step-by-Step Deployment Guide for AI Agents

Initial Setup and Configuration Requirements

Before deploying Amazon Bedrock AI agents, you need to establish the proper foundation in your AWS environment. Start by ensuring your AWS account has the necessary permissions for Amazon Bedrock AgentCore services. Create an IAM role with policies that grant access to Bedrock runtime APIs, S3 buckets for knowledge bases, and CloudWatch for monitoring.

Your AWS region selection matters significantly for performance and compliance. Choose a region where Bedrock services are fully available and align with your data residency requirements. Configure your VPC settings if you plan to deploy agents within a private network environment.

Set up the required dependencies including:

- AWS CLI version 2.x or higher

- Python SDK (boto3) with Bedrock support

- Appropriate security groups and network ACLs

- S3 buckets for storing agent artifacts and knowledge bases

- CloudWatch log groups for monitoring agent activities

Environment variables should include your AWS credentials, region settings, and any custom configuration parameters. Consider using AWS Secrets Manager to store sensitive information like API keys or database credentials that your agents might need.

Agent Creation and Customization Process

Creating effective Amazon Bedrock AI agents starts with defining clear objectives and use cases. Begin in the AWS console by navigating to the Bedrock service and selecting “Agents” from the sidebar menu. Click “Create Agent” to launch the configuration wizard.

Name your agent descriptively and provide a clear purpose statement. This helps with organization when managing multiple agents across your enterprise AI governance framework. Select the appropriate foundation model based on your specific requirements – Claude, Titan, or other available models each have different strengths.

The instruction field requires careful attention. Write clear, specific instructions that define how your agent should behave, what tone to use, and any constraints it should follow. Include examples of desired responses to help the model understand your expectations.

Knowledge base integration transforms your agent from a general AI assistant into a domain-specific expert. Upload relevant documents to S3 and create a knowledge base that your agent can reference. Supported formats include PDF, Word documents, and text files. The system automatically chunks and indexes this content for retrieval.

Action groups enable your agents to perform specific tasks beyond conversation. Define functions your agent can call, such as database queries, API calls, or file operations. Each action group needs a clear description and defined parameters.

Configure guardrails to ensure responsible AI behavior. Set content filters, topic restrictions, and response guidelines that align with your enterprise AI governance policies.

Testing and Validation Procedures

Comprehensive testing ensures your Amazon Bedrock AI agents perform reliably before production deployment. Start with unit testing individual components like knowledge base retrieval and action group functions. Use the built-in test console to validate basic conversational capabilities.

Create test scenarios that cover:

- Happy path conversations where everything works as expected

- Edge cases with unusual or ambiguous user inputs

- Error handling when external systems are unavailable

- Knowledge base accuracy for domain-specific queries

- Action group execution with various parameter combinations

Performance testing reveals how your agent handles concurrent users and complex queries. Monitor response times, token usage, and resource consumption during load testing. The Amazon Bedrock tutorial documentation provides benchmarking guidelines for different use cases.

Security validation confirms your agent respects access controls and data privacy requirements. Test with different user roles to ensure proper authorization. Verify that sensitive information doesn’t leak through responses and that guardrails function correctly.

Document all test results and create a validation checklist for future agent deployments. This becomes part of your enterprise AI scalability strategy as you roll out additional agents.

Production Deployment Best Practices

Deploying Amazon Bedrock AI agents to production requires careful orchestration and monitoring setup. Use infrastructure as code tools like CloudFormation or Terraform to ensure consistent deployments across environments. This approach supports your cloud AI governance solutions by maintaining audit trails and version control.

Implement gradual rollouts using feature flags or traffic splitting. Start with a small percentage of users to validate performance in real-world conditions. Monitor key metrics including response latency, error rates, and user satisfaction scores.

Set up comprehensive monitoring using CloudWatch dashboards that track:

- Agent invocation frequency and success rates

- Knowledge base query performance

- Action group execution times

- Cost metrics for foundation model usage

- User engagement patterns

Configure alerts for abnormal behavior like sudden spikes in errors or unusual response patterns. Create runbooks for common issues so your team can respond quickly to problems.

Establish regular maintenance windows for updating agent configurations, refreshing knowledge bases, and applying security patches. Your AI agent management platform should include automated backup procedures for agent configurations and associated data.

Plan for scaling by monitoring usage patterns and adjusting provisioned capacity accordingly. Amazon Bedrock AgentCore automatically handles many scaling requirements, but understanding your specific usage patterns helps optimize costs and performance.

Implement proper logging that captures both technical metrics and business-relevant data while respecting privacy regulations. This data becomes valuable for improving agent performance and understanding user needs over time.

Optimizing Performance and Scalability

Performance Monitoring and Analytics Tools

Amazon Bedrock AgentCore provides comprehensive monitoring capabilities that help you track your AI agent performance in real-time. The platform integrates seamlessly with Amazon CloudWatch, giving you detailed metrics on response times, throughput rates, and error frequencies. You can set up custom dashboards to visualize key performance indicators like agent invocation counts, model inference latency, and resource utilization patterns.

The built-in analytics tools offer deeper insights into agent behavior and user interactions. Track conversation flows, identify bottlenecks in your agent workflows, and monitor the effectiveness of different knowledge bases. Amazon Bedrock AgentCore automatically logs detailed execution traces, making it easy to debug issues and optimize agent responses. These logs capture everything from initial user queries to final agent outputs, including intermediate reasoning steps.

Setting up alerts becomes straightforward with CloudWatch integration. Configure notifications for threshold breaches, unusual traffic spikes, or performance degradation. The platform also provides cost optimization recommendations based on usage patterns, helping you balance performance requirements with budget constraints.

Scaling Strategies for High-Volume Workloads

Enterprise AI scalability demands careful planning and the right scaling strategies. Amazon Bedrock AgentCore supports both horizontal and vertical scaling approaches to handle varying workload demands. Auto-scaling capabilities automatically adjust resources based on incoming request volumes, ensuring consistent performance during traffic spikes.

Load balancing distributes requests across multiple agent instances, preventing any single instance from becoming overwhelmed. The platform supports regional deployment strategies, allowing you to position AI agents closer to your users for reduced latency. Consider implementing caching mechanisms for frequently accessed knowledge base content to improve response times and reduce computational overhead.

For high-volume deployments, implement request queuing and rate limiting to manage traffic flow effectively. Amazon Bedrock AgentCore provides built-in throttling mechanisms that protect your agents from being overwhelmed while maintaining service availability. Batch processing capabilities allow you to handle multiple requests simultaneously, improving overall throughput.

Memory management becomes critical at scale. Configure appropriate memory allocation for your agents based on knowledge base size and expected concurrent users. The platform offers guidance on optimal configurations for different use cases, from lightweight customer service bots to complex enterprise assistants.

Integration with Existing AWS Services

Amazon Bedrock AgentCore works smoothly with your existing AWS infrastructure, creating a unified AI ecosystem. Direct integration with Amazon S3 allows your agents to access vast knowledge repositories stored in data lakes. Connect to Amazon DynamoDB for real-time data retrieval, enabling agents to provide up-to-date information about inventory, customer records, or business metrics.

API Gateway integration enables secure exposure of your AI agents to external applications and third-party systems. This creates opportunities for embedding agent capabilities into existing workflows, mobile applications, or web platforms. The platform supports both REST and WebSocket protocols for different interaction patterns.

Lambda functions can extend agent capabilities with custom business logic. Trigger specific Lambda functions based on agent decisions, integrate with proprietary systems, or perform complex calculations that supplement agent responses. Amazon Bedrock AgentCore also connects with Amazon Comprehend for enhanced natural language processing and Amazon Translate for multi-language support.

Security integration with AWS Identity and Access Management (IAM) ensures proper access controls across all connected services. Use AWS Secrets Manager to securely store API keys and connection strings needed for external integrations. CloudTrail integration provides comprehensive audit trails for compliance and security monitoring.

Amazon EventBridge enables event-driven architectures where agent actions can trigger downstream processes across your AWS environment. This creates powerful automation possibilities where AI agent decisions can initiate workflows, update databases, or notify other systems in real-time.

Amazon Bedrock AgentCore brings powerful capabilities to enterprises looking to manage their AI initiatives with better control and oversight. The platform’s architecture gives you the foundation to build reliable AI agents while maintaining proper governance standards. You get clear benefits like enhanced security, streamlined compliance, and centralized management that makes scaling your AI operations much easier.

Getting your AI agents up and running doesn’t have to be complicated when you follow the right deployment steps and optimization practices. Start small with a pilot project to test the waters, then expand your AgentCore implementation as your team becomes more comfortable with the platform. The key is to focus on your specific business needs and take advantage of the built-in governance features that will save you headaches down the road.