Managing multiple AWS environments can quickly turn into a messy nightmare without proper standardization. Terraform AWS solutions offer a clear path to transform your chaotic infrastructure into organized, repeatable systems that your entire team can understand and maintain.

This guide is designed for DevOps engineers, cloud architects, and infrastructure teams who want to implement AWS environment standardization using Infrastructure as Code principles. Whether you’re managing development, staging, and production environments or handling multi-client deployments, you’ll learn practical strategies to streamline your AWS operations.

We’ll walk through creating reusable infrastructure modules that eliminate configuration drift and reduce deployment errors across your environments. You’ll also discover how to build automated AWS deployment pipelines that make infrastructure changes as simple as pushing code to your repository. Finally, we’ll cover Terraform best practices for managing multiple AWS environments without losing your sanity or breaking your budget.

Benefits of Terraform for AWS Environment Management

Eliminate Configuration Drift Across Multiple Environments

Configuration drift becomes a nightmare when managing AWS environments manually. One developer makes a quick security group change in production, another adjusts load balancer settings in staging, and suddenly your environments look completely different. Terraform AWS management solves this by defining your entire infrastructure in code, ensuring every environment gets built from the same blueprint.

When you use Infrastructure as Code with Terraform, your development, staging, and production environments stay identical in structure. The same Terraform configuration that creates your VPC, subnets, and security groups in development will create them exactly the same way in production. This consistency eliminates those frustrating “it works on my machine” moments that plague teams using manual provisioning.

Reduce Manual Provisioning Errors and Deployment Time

Manual AWS console clicks are error-prone and time-consuming. A typical web application setup might involve creating dozens of resources – VPCs, subnets, route tables, security groups, load balancers, EC2 instances, RDS databases, and S3 buckets. Doing this manually for multiple environments means hours of repetitive clicking and high chances of misconfiguration.

Terraform AWS automation transforms this process into a single command execution. What used to take hours of manual work now completes in minutes with zero human error. Your infrastructure gets provisioned exactly as specified in your Terraform modules, every single time. The deployment speed improvement alone saves development teams days of work each month.

Enable Version-Controlled Infrastructure Changes

Treating infrastructure like application code revolutionizes how teams manage AWS environments. Every infrastructure change gets committed to version control, creating a complete audit trail of who changed what and when. You can see exactly how your production environment evolved over time, roll back problematic changes instantly, and review infrastructure modifications through pull requests.

This version control approach brings the same collaboration benefits that developers enjoy with application code to infrastructure management. Team members can propose infrastructure changes through pull requests, discuss modifications, and ensure changes meet standards before applying them to AWS environments.

Scale Infrastructure Consistently Across Teams

Growing organizations struggle with infrastructure inconsistency across different teams and projects. One team might use t3.micro instances while another uses t2.small for similar workloads. Security configurations vary between projects, making compliance audits difficult and security vulnerabilities more likely.

AWS infrastructure automation through Terraform modules solves this scaling challenge. Teams share standardized modules for common patterns like three-tier web applications, microservices architectures, or data processing pipelines. When your organization has fifty projects, all using the same vetted, secure infrastructure patterns, management becomes straightforward rather than chaotic.

Terraform best practices encourage creating reusable modules that encode your organization’s standards and security requirements. New projects start with proven infrastructure patterns instead of reinventing the wheel, accelerating development while maintaining consistency.

Essential Terraform Components for AWS Standardization

Configure AWS provider and authentication methods

Setting up the AWS provider correctly forms the foundation of any successful Terraform AWS implementation. The provider block defines which version of the AWS API you’ll use and how Terraform connects to your AWS account. Start by specifying the provider version using a versions constraint to ensure consistency across your team and environments.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = var.aws_region

}

Authentication methods vary based on your deployment environment. For local development, AWS CLI credentials work well, while production environments benefit from IAM roles. EC2 instances can use instance profiles, and CI/CD pipelines should leverage temporary credentials through assumed roles. Environment variables offer another secure option for containerized deployments.

Configure multiple provider aliases when working across different AWS regions or accounts. This approach enables you to deploy resources in multiple locations from a single Terraform configuration:

provider "aws" {

alias = "us-east-1"

region = "us-east-1"

}

provider "aws" {

alias = "eu-west-1"

region = "eu-west-1"

}

Structure modules for reusable infrastructure components

Terraform modules serve as the building blocks for AWS infrastructure standardization. Think of modules as blueprints that define common patterns your organization uses repeatedly. A well-structured module contains three essential files: main.tf for resources, variables.tf for inputs, and outputs.tf for return values.

Create focused modules that handle specific infrastructure components rather than monolithic configurations. For example, separate your VPC module from your database module. This granular approach makes modules easier to test, maintain, and reuse across different projects.

modules/

├── networking/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

├── database/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

└── compute/

├── main.tf

├── variables.tf

└── outputs.tf

Module inputs should be flexible enough to accommodate different use cases while maintaining sensible defaults. Use validation blocks to catch configuration errors early and provide clear error messages. Document your modules thoroughly with descriptions for each variable and output.

Publish stable modules to a private registry or version control system with proper tagging. This practice allows teams to reference specific module versions, preventing unexpected changes from breaking existing infrastructure. Module versioning becomes especially important when multiple teams share common infrastructure patterns.

Implement variable management for environment-specific configurations

Variable management separates configuration from code, enabling the same Terraform modules to work across development, staging, and production environments. Structure your variables hierarchically, starting with global defaults, then environment-specific overrides, and finally component-specific values.

Use terraform.tfvars files for each environment to maintain clean separation:

environments/

├── dev/

│ └── terraform.tfvars

├── staging/

│ └── terraform.tfvars

└── production/

└── terraform.tfvars

Variable types and validation rules prevent configuration mistakes before deployment. Define complex variables using objects and maps to group related settings together. For instance, group all database configuration into a single variable rather than scattering individual properties throughout your code.

variable "database_config" {

description = "Database configuration settings"

type = object({

instance_class = string

allocated_storage = number

multi_az = bool

})

validation {

condition = var.database_config.allocated_storage >= 20

error_message = "Database storage must be at least 20 GB."

}

}

Sensitive variables require special handling to prevent accidental exposure in logs or state files. Mark sensitive variables explicitly and use AWS Parameter Store or Secrets Manager for truly confidential data like database passwords or API keys. This approach keeps secrets out of your Terraform code while maintaining infrastructure automation capabilities.

Environment-specific variable files should include resource naming conventions, sizing parameters, and feature flags. Use consistent naming patterns across environments to make configurations predictable and maintainable. Consider using data sources to pull environment-specific information dynamically rather than hardcoding values wherever possible.

Creating Reusable Infrastructure Modules

Design modular VPC configurations with standardized networking

Building a solid foundation starts with creating standardized VPC modules that can be reused across different environments. Your Terraform modules should define consistent network architectures that include public and private subnets, route tables, internet gateways, and NAT gateways. When you design these modules, think about making them flexible enough to handle different subnet counts and CIDR blocks while maintaining the same basic structure.

A well-designed VPC module accepts variables for environment names, availability zones, and subnet configurations. This approach lets you deploy the same network pattern for development, staging, and production environments without copying code. Your module should automatically handle subnet calculations, create appropriate route tables, and establish security group rules that follow your organization’s standards.

Consider implementing naming conventions within your modules that include environment tags and resource types. This makes it easy to identify resources and manage costs across different AWS environments. Your VPC module can also include outputs that other modules can reference, creating a clear dependency chain in your Terraform AWS infrastructure.

Build standardized compute resource templates

Creating reusable compute templates eliminates the guesswork when launching EC2 instances across environments. Your Terraform modules should define standard instance types, AMI selections, and security configurations that align with your organization’s requirements. Build templates for different workload types like web servers, application servers, and database instances.

Each compute module should include user data scripts for initial configuration, security patching schedules, and monitoring agent installation. Make your modules flexible by accepting parameters for instance size, key pairs, and environment-specific configurations while keeping security settings consistent.

Auto Scaling Groups work well within standardized templates, allowing your modules to handle capacity planning automatically. Include load balancer configurations and health check settings as part of your compute modules to ensure consistent application deployment patterns across all environments.

Establish consistent security group and IAM role patterns

Security consistency across environments becomes manageable when you create standardized security group and IAM role modules. Design security group templates for common patterns like web tier access, database connections, and internal service communication. Your modules should define the minimum required access while allowing customization for specific use cases.

IAM role modules should follow the principle of least privilege, creating roles for specific functions like EC2 instance profiles, Lambda execution roles, and cross-account access patterns. Build these roles with policy attachments that can be customized per environment while maintaining core security requirements.

Document your security patterns within the module code using clear variable descriptions and examples. This helps team members understand the intended use and reduces the chance of security misconfigurations when deploying Infrastructure as Code across multiple environments.

Package database and storage configurations

Database and storage modules require careful planning to balance standardization with performance requirements. Create modules for RDS instances that include backup schedules, maintenance windows, and security group configurations. Your database modules should handle parameter groups, option groups, and subnet group creation automatically.

Storage modules should cover S3 bucket configurations with appropriate access policies, versioning settings, and lifecycle rules. Include EBS volume templates with encryption enabled by default and snapshot scheduling configured based on environment requirements.

Consider creating separate modules for different database engines and storage types while maintaining consistent backup and security practices. Your modules can accept variables for storage size, performance requirements, and retention policies while ensuring all databases follow your organization’s compliance requirements.

Managing Multiple AWS Environments Effectively

Implement workspace strategies for development, staging, and production

Terraform workspaces provide a powerful mechanism for managing multiple AWS environments within a single configuration. Each workspace maintains its own state file, allowing you to deploy identical infrastructure across development, staging, and production environments while keeping their resources completely isolated.

Creating dedicated workspaces starts with understanding your environment hierarchy. Most organizations follow a three-tier approach: development for feature testing, staging for pre-production validation, and production for live workloads. Initialize workspaces using terraform workspace new dev, terraform workspace new staging, and terraform workspace new prod commands.

The real power emerges when you reference the current workspace within your Terraform configurations. Use terraform.workspace to conditionally set resource parameters:

resource "aws_instance" "web" {

instance_type = terraform.workspace == "prod" ? "t3.large" : "t3.micro"

tags = {

Environment = terraform.workspace

Name = "${terraform.workspace}-web-server"

}

}

This approach ensures your production environment receives appropriately sized resources while development and staging environments use cost-effective alternatives. Workspace-aware configurations also enable automatic scaling adjustments, where production environments might deploy multiple availability zones while development environments use single-zone deployments.

Configure environment-specific variable files and backends

Environment-specific variable files eliminate hardcoded values and make your Terraform AWS configurations truly portable. Create separate .tfvars files for each environment: dev.tfvars, staging.tfvars, and prod.tfvars. These files contain environment-specific values like instance counts, database sizes, and region preferences.

Structure your variable files logically:

# prod.tfvars

environment = "production"

instance_count = 3

db_instance_class = "db.t3.large"

multi_az = true

backup_retention = 30

# dev.tfvars

environment = "development"

instance_count = 1

db_instance_class = "db.t3.micro"

multi_az = false

backup_retention = 7

Backend configuration requires special attention for AWS infrastructure automation. Configure separate S3 buckets for each environment’s state files to prevent accidental cross-environment modifications. Use workspace-specific state file paths:

terraform {

backend "s3" {

bucket = "company-terraform-state"

key = "environments/${terraform.workspace}/terraform.tfstate"

region = "us-west-2"

}

}

DynamoDB table locking prevents concurrent modifications across all environments while maintaining state file isolation. This setup supports your Terraform best practices by ensuring each environment maintains independent state management.

Establish naming conventions and tagging standards

Consistent naming conventions and comprehensive tagging standards form the backbone of effective AWS environment standardization. Develop naming patterns that immediately identify the environment, application, and resource type. A typical pattern follows: {environment}-{application}-{resource-type}-{identifier}.

Implement naming conventions through Terraform locals:

locals {

name_prefix = "${var.environment}-${var.application}"

common_tags = {

Environment = var.environment

Application = var.application

ManagedBy = "terraform"

Owner = var.team_name

CostCenter = var.cost_center

Project = var.project_name

}

}

Apply these standards consistently across all resources. EC2 instances become prod-webapp-ec2-01, RDS databases transform into staging-api-rds-primary, and S3 buckets follow dev-assets-s3-storage patterns. This systematic approach simplifies resource identification during troubleshooting and cost analysis.

Tagging standards should capture both technical and business metadata. Technical tags include Environment, Application, and ManagedBy, while business tags cover Owner, CostCenter, and Project. Mandatory tags enforced through Terraform modules ensure no resource escapes without proper classification.

Create tag validation rules within your Terraform modules to enforce compliance:

variable "required_tags" {

description = "Required tags for all resources"

type = map(string)

default = {}

validation {

condition = contains(keys(var.required_tags), "Environment")

error_message = "Environment tag is required."

}

}

These Infrastructure as Code practices create self-documenting infrastructure where every resource clearly identifies its purpose, owner, and environment context.

Implementing Infrastructure as Code Best Practices

Structure Terraform projects with proper file organization

Creating a clean, logical project structure sets the foundation for maintainable Infrastructure as Code. Start with a consistent directory layout that separates concerns and makes navigation intuitive for your team. Place environment-specific configurations in dedicated folders like environments/dev, environments/staging, and environments/prod, while keeping shared modules in a modules/ directory.

Your root directory should contain essential files like main.tf for primary resource definitions, variables.tf for input parameters, outputs.tf for return values, and terraform.tfvars for variable assignments. Break complex configurations into logical files – separate networking resources into network.tf, security components into security.tf, and compute resources into compute.tf.

Establish naming conventions early and stick to them religiously. Use descriptive names that clearly indicate purpose and scope. For AWS resources, include the environment and resource type in names like prod-web-server-sg for security groups or dev-app-vpc for VPCs.

Version control becomes critical with proper gitignore configurations. Never commit .tfstate files, .terraform/ directories, or files containing sensitive data. Use .tfvars.example files to show required variables without exposing actual values.

Validate configurations using automated testing frameworks

Terraform configurations need rigorous testing to catch errors before they impact your AWS infrastructure. Implement multiple validation layers starting with basic syntax checking using terraform validate and terraform fmt to ensure code consistency.

Static analysis tools like tflint catch common mistakes and enforce best practices specific to AWS resources. Configure tflint rules to check for deprecated arguments, invalid instance types, and security misconfigurations. Integrate these checks into your development workflow through pre-commit hooks that run automatically before code commits.

For deeper validation, use testing frameworks like Terratest or kitchen-terraform. These tools provision actual AWS resources in isolated test environments, verify expected outcomes, and clean up afterwards. Write tests that validate resource creation, proper networking configurations, and security group rules.

Policy validation with tools like Open Policy Agent (OPA) or AWS Config rules ensures your Terraform configurations comply with organizational standards. Define policies that prevent oversized EC2 instances, enforce encryption requirements, or mandate specific tagging strategies.

Consider implementing continuous validation in your CI/CD pipeline. Run terraform plan on every pull request to show proposed changes, making code reviews more effective. Use tools like Atlantis or Terraform Cloud to automate this process while maintaining security through proper IAM controls.

Implement state file management and remote backend storage

Terraform state files contain sensitive information about your AWS infrastructure and require careful management. Never store state files locally in production environments – they become single points of failure and create security risks.

Configure remote backends using AWS S3 with DynamoDB for state locking. This setup prevents concurrent modifications that could corrupt your infrastructure state. Create dedicated S3 buckets with versioning enabled and strict access controls. Use server-side encryption with AWS KMS keys to protect state data at rest.

terraform {

backend "s3" {

bucket = "your-terraform-state-bucket"

key = "environments/prod/terraform.tfstate"

region = "us-west-2"

dynamodb_table = "terraform-state-locks"

encrypt = true

}

}

Implement state file isolation by environment. Each environment should have separate state files to prevent accidental cross-environment impacts. Use workspace functionality or different backend configurations to achieve this separation.

Regular state file backups protect against corruption or accidental deletion. S3 versioning provides automatic backups, but consider additional backup strategies for critical environments. Document state recovery procedures and test them periodically.

Monitor state file access and modifications through CloudTrail logs. Set up alerts for unusual access patterns or unauthorized state modifications. This monitoring helps maintain security and provides audit trails for compliance requirements.

Create documentation and runbooks for team adoption

Documentation transforms Infrastructure as Code from individual knowledge to team capability. Create comprehensive README files for each Terraform project explaining purpose, prerequisites, and deployment procedures. Include examples of common operations and troubleshooting steps.

Develop standardized runbooks covering routine operations like environment provisioning, scaling procedures, and disaster recovery steps. Document variable definitions, their expected values, and the impact of changes. Use clear, step-by-step instructions that team members can follow without prior Terraform experience.

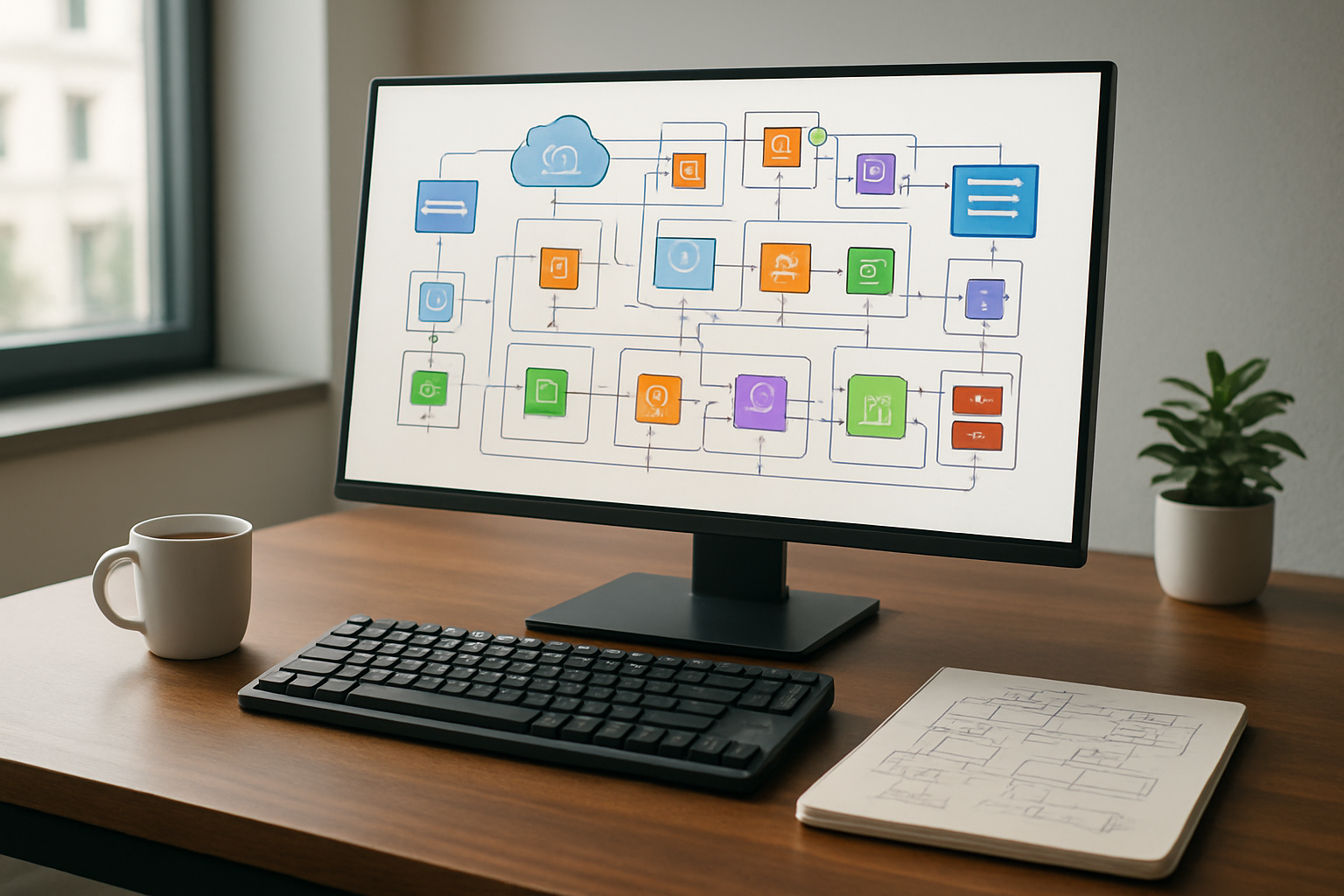

Architecture diagrams help team members understand infrastructure relationships and dependencies. Generate these automatically using tools like terraform-docs or maintain them manually for complex setups. Include network diagrams, security boundaries, and data flows.

Create onboarding guides for new team members covering your Terraform AWS management practices, coding standards, and approval processes. Include information about required tools, access procedures, and common pitfalls to avoid.

Maintain change logs documenting infrastructure modifications, their rationale, and any operational impacts. This historical record helps with troubleshooting and provides context for future changes. Use semantic versioning for infrastructure releases to track compatibility and breaking changes.

Establish knowledge sharing sessions where team members present new patterns, lessons learned, and improvements to your Terraform best practices. Regular reviews of documentation ensure it stays current with evolving requirements and team needs.

Automating Deployment Pipelines with Terraform

Integrate Terraform with CI/CD platforms for automated deployments

Modern AWS infrastructure automation thrives when Terraform AWS management integrates seamlessly with your CI/CD pipeline. Popular platforms like GitHub Actions, GitLab CI/CD, and Jenkins provide robust frameworks for automating your Infrastructure as Code deployments.

Setting up GitHub Actions for Terraform workflows starts with creating workflow files that trigger on pull requests and merges. Your pipeline should include steps for initializing Terraform, running validation checks, and executing plans. Store your Terraform state files in remote backends like AWS S3 with DynamoDB locking to prevent concurrent modifications.

For GitLab CI/CD users, leverage built-in Terraform integration features that automatically handle state management and provide visual diff outputs directly in merge requests. Configure your .gitlab-ci.yml file with appropriate stages for different environments, ensuring production deployments require manual approval.

Jenkins users benefit from the Terraform plugin ecosystem, which provides dedicated build steps and integration with HashiCorp Vault for secrets management. Create parameterized builds that accept environment variables, making your pipeline flexible across different AWS regions and account configurations.

Container-based CI/CD approaches using Docker images with pre-installed Terraform tools ensure consistent execution environments. This eliminates version conflicts and provides reproducible builds across different team members’ local environments.

Implement approval workflows for production changes

Production AWS environments require careful change management to prevent costly mistakes. Terraform deployment pipeline automation should include multi-stage approval processes that protect critical infrastructure while maintaining development velocity.

Branch protection rules form the foundation of your approval workflow. Require pull requests for all changes to main branches, and enforce code reviews from designated infrastructure team members. Configure branch policies that prevent direct pushes and require status checks to pass before merging.

Implement environment-specific approval gates where development and staging changes can proceed automatically, but production deployments pause for human review. Use conditional logic in your pipeline configuration to trigger different approval flows based on target environments.

Slack or Microsoft Teams integration enhances your approval process by notifying relevant stakeholders when production changes await approval. Include Terraform plan summaries in these notifications, showing exactly what resources will be created, modified, or destroyed.

Role-based access controls ensure only authorized personnel can approve production changes. Define clear approval hierarchies where junior team members can approve minor changes, but significant modifications require senior engineer or architect approval.

Emergency deployment procedures should bypass normal approval flows when critical fixes are needed. Document these processes clearly and include automated notifications to ensure proper audit trails even during urgent situations.

Configure automated plan and apply processes

AWS infrastructure automation reaches peak efficiency when Terraform plans and applies execute automatically while maintaining safety guardrails. Your AWS deployment pipeline should balance speed with reliability through well-configured automation.

Automated planning triggers on every pull request, providing immediate feedback about proposed changes. Configure your CI/CD system to run terraform plan against the target environment and post results as pull request comments. This visibility helps reviewers understand infrastructure changes before code merges.

Plan artifacts should be stored securely between pipeline stages to ensure the exact same changes get applied that were previously reviewed. Use your CI/CD platform’s artifact storage or AWS S3 to preserve plan files with appropriate retention policies.

Conditional apply logic prevents accidental deployments by checking branch names, commit messages, or manual triggers. Production applies should only execute after successful deployment to staging environments and completion of automated testing suites.

Error handling becomes critical in automated processes. Configure your pipeline to capture detailed logs, send failure notifications, and potentially roll back changes when applies fail. Implement retry logic for transient failures while avoiding infinite loops.

Terraform AWS best practices include using workspace-specific variable files and remote state configurations. Your automation should dynamically select appropriate variable files based on target environments and validate all required variables before execution.

State locking mechanisms prevent concurrent modifications that could corrupt your infrastructure state. Configure automatic state cleanup processes that remove locks after failed pipeline runs to prevent permanent blocks on future deployments.

Terraform transforms how teams manage AWS infrastructure by turning complex cloud environments into manageable, repeatable code. By creating reusable modules and following infrastructure as code best practices, organizations can eliminate configuration drift, reduce manual errors, and ensure consistent deployments across development, staging, and production environments. The ability to version control infrastructure changes and automate deployment pipelines makes scaling AWS resources both predictable and reliable.

Start small by identifying a single AWS service or environment that could benefit from Terraform automation. Build your first module, test it thoroughly, and gradually expand your infrastructure as code approach across your organization. The investment in learning Terraform and establishing these standardized practices will pay dividends as your AWS footprint grows and your team gains confidence in managing infrastructure through code rather than clicking through consoles.