Building a solid test automation architecture can make or break your development workflow. This guide is for software engineers, QA professionals, and development teams who want to create reliable testing strategies that catch bugs early and ship code with confidence.

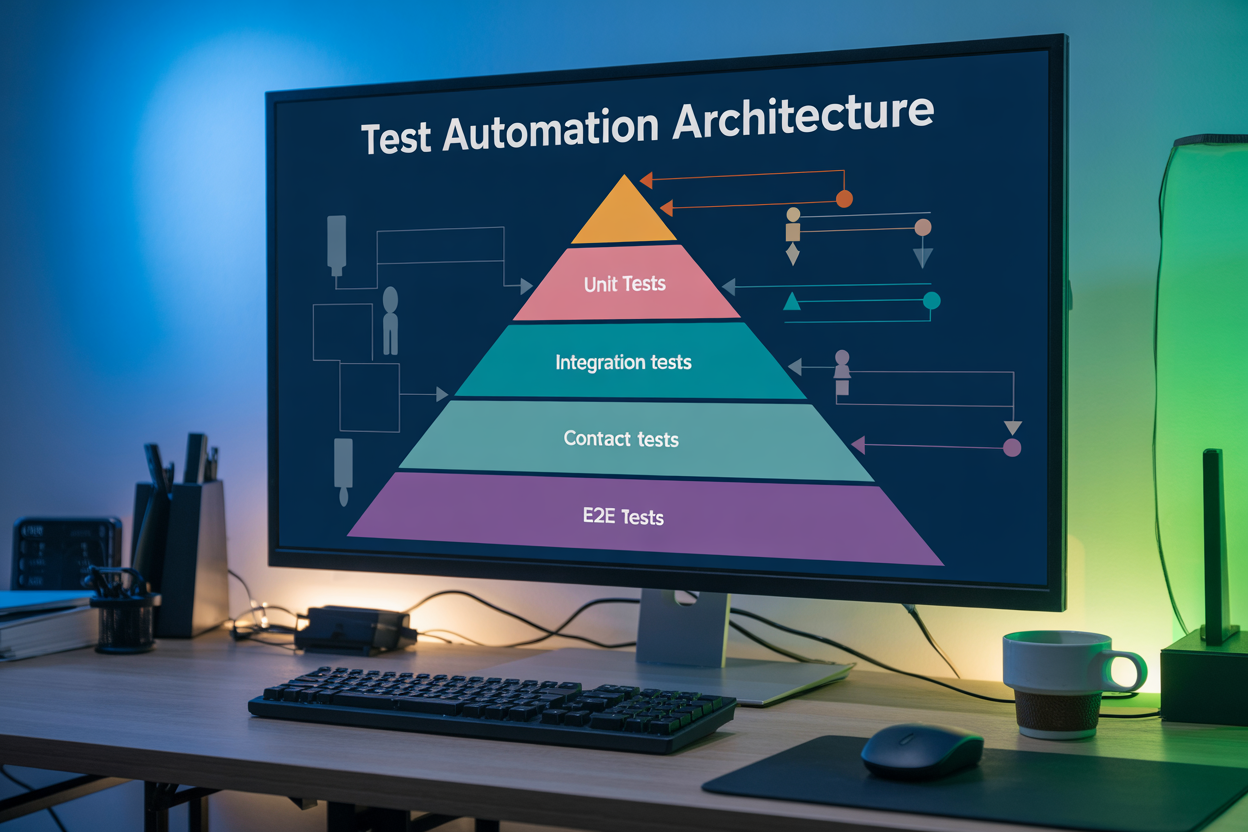

A well-designed test automation framework combines four essential testing layers that work together like a safety net. Unit testing forms your foundation by validating individual components quickly. Integration testing ensures your services communicate properly. End-to-end testing verifies complete user workflows. Contract testing keeps microservices in sync without breaking changes.

We’ll walk through building each layer of the test pyramid implementation, starting with unit testing best practices that speed up your development cycles. You’ll learn how to design an integration testing strategy that catches system-level issues before they reach production. Finally, we’ll cover how contract testing microservices prevents the communication breakdowns that plague distributed systems.

Build a Robust Test Automation Foundation

Design Scalable Test Automation Patterns

Building a successful test automation architecture starts with choosing the right patterns that grow with your application. The Page Object Model stands as one of the most effective patterns, encapsulating page elements and behaviors in dedicated classes. This separation keeps test logic clean and makes maintenance much easier when UI changes occur.

Consider implementing the Builder pattern for complex test data creation. Instead of constructing objects with numerous parameters, builders provide a fluent interface that’s both readable and flexible. When testing an e-commerce checkout flow, you can chain methods like OrderBuilder.withProduct("laptop").withQuantity(2).withShippingAddress(address).build().

The Factory pattern works exceptionally well for creating different test environments and configurations. A TestDriverFactory can instantiate the appropriate browser driver based on configuration settings, while keeping your tests environment-agnostic.

| Pattern | Use Case | Benefits |

|---|---|---|

| Page Object Model | UI test organization | Reduced duplication, better maintainability |

| Builder Pattern | Complex test data | Improved readability, flexible construction |

| Factory Pattern | Environment setup | Configuration flexibility, easier scaling |

Establish Clear Testing Boundaries and Responsibilities

Defining what each test layer should and shouldn’t do prevents overlap and confusion. Unit tests focus exclusively on individual components in isolation, using mocks and stubs for dependencies. They should run in milliseconds and never touch external systems like databases or APIs.

Integration tests verify that multiple components work together correctly. These tests can interact with real databases and external services, but should be limited to specific integration points. A payment processing integration test might verify that your service correctly communicates with a payment gateway, but shouldn’t test the gateway’s internal logic.

End-to-end tests validate complete user workflows from the browser perspective. They’re expensive to maintain and slow to execute, so reserve them for critical user journeys that represent your application’s core value proposition.

Contract testing sits between services, ensuring APIs maintain their agreements without requiring full system integration. Producer services define contracts that consumer services can verify against, catching breaking changes early in the development cycle.

Implement Effective Test Data Management Strategies

Test data management often becomes the biggest headache in test automation architecture. Smart strategies here save countless hours of debugging and maintenance.

Create separate datasets for different test scenarios rather than sharing data across tests. Shared test data leads to brittle tests that fail when other tests modify the data unexpectedly. Each test should either create its own data or use read-only reference data that never changes.

Database seeding scripts should be idempotent and version-controlled. Your test environment should start with a known, consistent state every time. Use database migrations to manage test schema changes, and consider using Docker containers to ensure consistent database states across different environments.

For API testing, implement data factories that generate realistic test data programmatically. JSON Schema Faker or similar tools can create varied, realistic datasets based on your API schemas. This approach scales better than maintaining static test files and catches edge cases you might miss with hand-crafted data.

Consider implementing test data cleanup strategies. Some teams prefer cleaning data after each test, while others reset entire environments between test runs. Choose the approach that balances test isolation with execution speed for your specific context.

Create Maintainable Test Code Structures

Well-structured test code follows the same principles as production code. Apply DRY (Don’t Repeat Yourself) principles by extracting common setup and teardown logic into reusable utilities. Create helper methods for repetitive actions like user authentication or data creation.

Organize tests in a logical folder structure that mirrors your application architecture. Group related tests together and use descriptive naming conventions. A test named should_create_order_when_valid_payment_provided tells you exactly what it does, while test_order_1 tells you nothing.

Keep test methods focused on single behaviors. Long tests that verify multiple scenarios become difficult to debug when they fail. Break complex workflows into smaller, focused tests that each verify one specific aspect of the behavior.

Implement consistent error handling and logging in your test framework. When tests fail in CI/CD pipelines, good error messages and logs make the difference between quick fixes and hours of investigation. Capture screenshots for UI tests, log API responses for integration tests, and include relevant context in failure messages.

Version control your test automation framework alongside your application code. Test code deserves the same code review standards and refactoring attention as production code. Regular refactoring keeps technical debt from accumulating and makes the test suite easier to maintain as your application evolves.

Master Unit Testing for Faster Development Cycles

Write focused tests that validate individual components

Unit testing forms the foundation of any solid test automation architecture by targeting the smallest testable parts of your application. Each unit test should examine one specific behavior or function, making failures easy to identify and fix. When writing unit tests, focus on testing public methods and their expected outcomes rather than internal implementation details.

The key to effective unit testing lies in the Arrange-Act-Assert pattern. First, set up your test data and conditions. Next, execute the specific functionality you’re testing. Finally, verify the results match your expectations. This approach creates readable tests that clearly communicate their purpose to other developers.

Consider testing edge cases and error conditions alongside happy path scenarios. A well-designed unit test suite catches bugs before they reach integration testing phases, saving valuable development time. Your tests should run quickly—ideally under a few milliseconds each—enabling rapid feedback during development.

Achieve optimal code coverage without over-testing

Code coverage metrics provide valuable insights into your testing effectiveness, but chasing 100% coverage often leads to diminishing returns. Aim for 80-90% coverage on critical business logic while accepting lower coverage on simple getters, setters, and configuration code.

Focus your testing efforts on:

- Complex business logic that drives core functionality

- Conditional branches where bugs commonly hide

- Error handling and exception scenarios

- Public APIs that other components depend on

Avoid testing trivial code like simple property assignments or framework-generated methods. These tests add maintenance overhead without meaningful protection against defects. Instead, prioritize testing code paths that directly impact user experience and business outcomes.

Quality trumps quantity in unit testing. Ten well-crafted tests covering critical scenarios provide more value than fifty tests checking every minor detail. Review coverage reports regularly, but use them as guides rather than absolute targets.

Mock dependencies for isolated test execution

Mocking external dependencies ensures your unit tests remain fast, reliable, and focused on the component under test. When your code interacts with databases, web services, file systems, or other components, replace these dependencies with mock objects that simulate expected behaviors.

Popular mocking frameworks simplify this process:

| Framework | Language | Key Features |

|---|---|---|

| Mockito | Java | Annotation-based mocking, argument matching |

| Jest | JavaScript | Built-in mocking, snapshot testing |

| Moq | C# | Fluent API, LINQ-based setup |

| unittest.mock | Python | Standard library, flexible patching |

Mock objects should return predictable responses that match real-world scenarios. Create different mock configurations to test various conditions—successful responses, network timeouts, authentication failures, and data validation errors. This approach helps you verify how your code handles different situations without relying on external systems.

Remember that mocking adds complexity to your tests. Mock only what you need to isolate the component under test. Over-mocking can make tests brittle and harder to maintain, defeating the purpose of having a reliable unit testing best practices foundation.

Implement Integration Testing for System Reliability

Verify component interactions and data flow

Integration testing sits at the heart of any robust test automation architecture, bridging the gap between isolated unit tests and comprehensive end-to-end scenarios. Unlike unit tests that examine individual components in isolation, integration tests focus on the communication pathways between different modules and services.

When testing component interactions, start by mapping out the critical data flows in your application. Identify the key handoff points where one component passes information to another. These integration points represent your highest-risk areas and deserve the most attention in your testing strategy.

Data transformation becomes a major concern during integration testing. Components might format, validate, or enrich data differently, leading to unexpected behaviors when systems connect. Create test scenarios that specifically target these transformation points, ensuring data maintains its integrity as it moves through your application layers.

Memory management and resource sharing between components can reveal issues that never surface in unit tests. Monitor how components handle shared resources like database connections, file handles, or cache entries when working together.

Test database connections and external service calls

Database integration testing requires a different approach than testing business logic. Your integration testing strategy must account for connection pooling, transaction boundaries, and data consistency across multiple operations.

Set up dedicated test databases that mirror your production schema but contain controlled datasets. This approach lets you test real database interactions without risking production data or dealing with unpredictable external dependencies.

External service calls present unique challenges in integration testing. Network latency, service availability, and API changes can make tests flaky and unreliable. Use service virtualization or mock services to simulate external dependencies while maintaining predictable test conditions.

| Testing Approach | Pros | Cons | Best Use Case |

|---|---|---|---|

| Real Services | Most accurate | Slow, unreliable | Critical integrations |

| Mock Services | Fast, predictable | May miss real issues | Development phase |

| Service Virtualization | Realistic, controllable | Setup complexity | Pre-production testing |

Connection timeout scenarios deserve special attention in your test suite. Simulate slow network conditions and service outages to verify your application handles these situations gracefully.

Validate API endpoints and message queues

API endpoint validation goes beyond simple request-response testing. Your integration tests should verify authentication mechanisms, rate limiting, error handling, and data validation across different endpoint combinations.

Test API versioning scenarios where multiple versions might coexist in your system. Ensure backward compatibility works correctly and that version negotiation happens as expected between components.

Message queue testing requires understanding both the producer and consumer sides of your messaging system. Test message ordering, delivery guarantees, and error handling when messages can’t be processed successfully.

Dead letter queues and retry mechanisms need specific test scenarios. Simulate message processing failures and verify that your system handles poison messages appropriately without blocking the entire queue.

Asynchronous processing adds complexity to integration testing. Your tests must account for eventual consistency and timing issues that don’t exist in synchronous systems.

Handle test environment configuration challenges

Test environment configuration often becomes the biggest bottleneck in integration testing implementation. Different environments require different connection strings, service endpoints, and security configurations.

Environment-specific configuration should be externalized and easily swappable. Use environment variables, configuration files, or configuration management tools to avoid hardcoding environment details in your test code.

Test data management across environments requires careful planning. Develop strategies for seeding test data, cleaning up after tests, and maintaining data consistency between test runs.

Database migration and schema changes need consideration in your test environment setup. Your tests should run against the correct schema version and handle migration scenarios appropriately.

Container orchestration tools like Docker can simplify environment configuration by packaging dependencies and ensuring consistent runtime environments across different testing phases. This approach reduces the “works on my machine” problems that plague integration testing efforts.

Deploy End-to-End Testing for Complete User Journeys

Automate Critical User Workflows and Business Processes

Start with the most important user journeys that directly impact your business. Think about what your customers do most often – signing up, making purchases, logging in, or completing key tasks in your application. These workflows should be your priority for an end-to-end testing framework because they represent real value to your users and your business.

Map out each workflow step by step, including all the different paths users might take. Don’t just focus on the happy path where everything goes perfectly. Users will encounter errors, they’ll change their minds halfway through, and they’ll interact with your system in ways you never expected. Your test automation architecture needs to account for these scenarios too.

Create user personas for your automated tests. A new user behaves differently than a power user, and your tests should reflect this. Build workflows that represent different user types, from first-time visitors to returning customers with complex needs. This approach gives you better coverage of real-world usage patterns.

Select Appropriate E2E Testing Tools and Frameworks

Your tool choice depends heavily on your application’s technology stack and team expertise. Selenium WebDriver remains popular for web applications, but newer tools like Playwright and Cypress offer better developer experience and more reliable test execution. Mobile applications need specialized tools like Appium or platform-specific solutions.

Consider these factors when choosing your end-to-end testing framework:

- Programming language alignment with your development team’s skills

- Browser and platform support for your target audience

- Maintenance overhead and learning curve for your team

- Integration capabilities with your existing CI/CD pipeline

- Debugging and reporting features for troubleshooting failures

Don’t lock yourself into a single tool. Different parts of your application might benefit from different testing approaches. A hybrid strategy often works best, where you use the right tool for each specific testing need.

Manage Test Execution Time and Flaky Test Issues

Long-running E2E tests can kill your development velocity. Break down large test suites into smaller, focused groups that can run in parallel. Use techniques like test sharding to distribute tests across multiple machines or containers. This parallel execution dramatically reduces overall test run time.

Flaky tests are the biggest enemy of reliable automated testing workflow. They fail randomly, causing developers to lose trust in the entire test suite. Combat flakiness by:

- Adding explicit waits instead of fixed delays

- Stabilizing test data before each test run

- Implementing retry mechanisms with proper failure analysis

- Isolating tests to prevent interference between them

- Using stable locators that don’t change with minor UI updates

Track flaky test patterns over time. Tests that fail intermittently often indicate underlying issues in your application or infrastructure that need attention.

Implement Cross-Browser and Cross-Device Compatibility Testing

Your users access your application from dozens of different browsers, operating systems, and devices. Your E2E tests need to reflect this reality. Create a test matrix that covers the most popular combinations your users actually use – don’t try to test every possible configuration.

Use cloud-based testing platforms like BrowserStack, Sauce Labs, or AWS Device Farm to access a wide range of real devices and browsers without maintaining your own device lab. These platforms integrate well with most test automation frameworks and provide detailed logs and screenshots for debugging.

Focus your cross-platform testing on critical user flows rather than trying to run every test on every platform. Different browsers handle JavaScript, CSS, and user interactions slightly differently, so prioritize the workflows that are most sensitive to these variations.

Create Realistic Test Data Scenarios

Test data quality makes or breaks your E2E testing strategy. Real-world data is messy, incomplete, and constantly changing. Your test data should reflect this complexity. Create data sets that include edge cases like special characters, very long text strings, empty fields, and international characters.

Build data generation utilities that create realistic test scenarios on demand. This approach is better than using static test data files that become outdated or corrupted over time. Dynamic data generation also helps prevent tests from becoming dependent on specific data values.

Consider data privacy regulations when working with test data. Never use real customer data in your test environments. Create synthetic data that mimics production patterns without exposing sensitive information. Tools like Faker libraries can generate realistic but fake data for testing purposes.

Set up proper data cleanup procedures to reset your test environment between runs. Tests should start with a known, clean state to ensure consistent results. This cleanup process is just as important as the test execution itself for maintaining a reliable test automation architecture.

Leverage Contract Testing for Microservices Architecture

Define and maintain service contracts effectively

Contract testing establishes clear communication boundaries between services by documenting expected interactions through formal API contracts. These contracts serve as blueprints that specify request and response formats, data types, and expected behaviors between microservices.

Effective contract definition starts with choosing the right contract format. OpenAPI specifications work well for REST APIs, while tools like Protobuf handle gRPC services. The contract becomes your source of truth, describing exactly what data each service expects and returns.

Version your contracts alongside your code. When service interfaces evolve, contracts must reflect these changes immediately. Store contracts in a centralized repository where all teams can access the latest versions. This prevents the common problem of outdated documentation causing integration failures.

Contract Management Best Practices:

- Use semantic versioning for contract changes

- Document breaking vs non-breaking modifications

- Implement contract validation in development environments

- Create mock servers from contract definitions

- Maintain backwards compatibility when possible

Prevent breaking changes in distributed systems

Breaking changes in microservices architecture cascade through multiple services, causing widespread system failures. Contract testing catches these issues before they reach production by validating service interactions against predefined agreements.

Set up automated validation that runs whenever someone modifies a service interface. This validation checks if changes break existing contracts with dependent services. The system should flag potential problems immediately, allowing developers to address compatibility issues during development rather than after deployment.

Implement contract evolution strategies that minimize disruption. Support multiple contract versions simultaneously during transition periods. This approach lets dependent services migrate at their own pace while maintaining system stability.

Breaking Change Prevention Strategies:

| Strategy | Description | Impact Level |

|---|---|---|

| Additive Changes | Add new fields without removing existing ones | Low |

| Optional Fields | Make new requirements optional initially | Medium |

| Deprecation Periods | Gradually phase out old contract versions | Medium |

| Parallel Versioning | Run multiple contract versions simultaneously | High |

Implement consumer-driven contract testing

Consumer-driven contract testing flips the traditional approach by letting service consumers define what they expect from providers. This method ensures that services deliver exactly what their consumers need, reducing over-engineering and unnecessary features.

Start by having each consumer service create contract tests that specify their exact requirements. These tests define the minimum viable interface needed for successful integration. The provider service then implements functionality that satisfies all consumer contracts.

Popular tools like Pact facilitate this approach by generating contracts from consumer tests and verifying them against provider implementations. The consumer writes tests describing their expected interactions, and Pact creates shareable contracts that providers can validate against.

Consumer-Driven Testing Workflow:

- Consumer defines expected interactions in test code

- Framework generates contract files from consumer tests

- Provider validates implementation against all consumer contracts

- Failed validations prevent deployment of breaking changes

- Contract broker manages and versions all agreements

This approach prevents providers from making changes that break consumer expectations while ensuring consumers only test against realistic provider capabilities.

Integrate contract tests into CI/CD pipelines

Automated contract validation in CI/CD pipelines catches compatibility issues before they affect production systems. Set up pipeline stages that verify contracts during both consumer and provider deployments.

Configure your pipeline to run contract tests whenever someone pushes code changes. Consumer pipelines should generate updated contracts and publish them to a central broker. Provider pipelines must validate implementations against all published consumer contracts before allowing deployment.

Implement can-i-deploy checks that verify compatibility across service versions. These checks prevent deploying services that would break existing integrations. The pipeline should block deployments when contract violations exist and provide clear feedback about required fixes.

Pipeline Integration Steps:

- Pre-commit hooks: Validate local contract changes

- Build stage: Generate contracts from consumer tests

- Test stage: Verify provider compliance with contracts

- Pre-deployment: Run can-i-deploy compatibility checks

- Post-deployment: Update contract broker with new versions

Use contract testing microservices strategies that align with your deployment patterns. Blue-green deployments can validate contracts against both environments before switching traffic. Rolling deployments should verify contracts incrementally as new instances come online.

Contract brokers like Pact Broker or PactFlow centralize contract management and provide deployment safety nets. These tools track which service versions are compatible and prevent incompatible deployments from reaching production environments.

Optimize Test Execution Strategy and Performance

Balance test coverage across all testing levels

Getting your test automation architecture right means finding the sweet spot between comprehensive coverage and execution efficiency. The test pyramid implementation serves as your north star here, with unit tests forming the foundation (70-80% of total tests), integration tests in the middle layer (15-20%), and end-to-end tests at the top (5-10%).

Start by mapping your application’s critical paths and business logic to determine where each type of test adds the most value. Unit tests should cover complex algorithms, edge cases, and business rules within individual components. Integration tests focus on data flow between services, API contracts, and database interactions. Reserve end-to-end tests for core user journeys that directly impact revenue or user satisfaction.

Monitor test coverage metrics across all levels to identify gaps and redundancies. A robust automated testing workflow includes coverage reports that show not just what code is tested, but which scenarios and user paths are validated at each level.

| Test Level | Coverage Target | Focus Areas |

|---|---|---|

| Unit | 80-90% | Business logic, edge cases, algorithms |

| Integration | 60-70% | Service interactions, data flow |

| E2E | 40-50% | Critical user journeys, workflows |

Implement parallel test execution for faster feedback

Speed kills in modern development cycles, and your test automation framework must deliver results fast. Parallel execution transforms testing from a bottleneck into an accelerator by running tests simultaneously across multiple environments, browsers, or devices.

Design your test suite with parallelization in mind from day one. Break tests into independent, atomic units that don’t share state or resources. Use containerization technologies like Docker to create isolated test environments that can spin up and down quickly. Cloud-based testing platforms offer elastic scaling capabilities that let you run hundreds of tests simultaneously without maintaining expensive infrastructure.

Implement smart test distribution algorithms that balance load across available resources. Group tests by execution time, complexity, or resource requirements to maximize throughput. For microservices testing strategy, assign dedicated test runners to different service boundaries to avoid resource conflicts.

Consider these parallel execution patterns:

- Test class parallelization: Run entire test classes on separate threads

- Test method parallelization: Execute individual test methods concurrently

- Browser parallelization: Run tests across multiple browser instances

- Environment parallelization: Execute tests on different staging environments simultaneously

Create efficient test reporting and monitoring systems

Your software testing architecture design isn’t complete without comprehensive visibility into test results and system health. Build reporting systems that provide actionable insights, not just pass/fail statistics.

Real-time dashboards should display test execution progress, failure rates, and performance trends across all test levels. Implement intelligent alerting that notifies teams immediately when critical tests fail or when failure rates exceed acceptable thresholds. Include screenshots, logs, and stack traces in failure reports to speed up debugging.

Track key metrics that matter to your team:

- Test execution time trends

- Flaky test identification and rates

- Code coverage evolution over time

- Test maintenance overhead

- Mean time to detection (MTTD) for bugs

Integrate reporting with your development workflow through tools like Slack, Microsoft Teams, or custom webhooks. Automated test reports should include contextual information like the git commit hash, branch name, and deployment environment to help developers quickly identify the root cause of failures.

Set up monitoring for test infrastructure health, including resource utilization, queue depths, and execution environment status. This proactive approach prevents test failures due to infrastructure issues rather than actual code problems, maintaining trust in your test automation architecture.

Building a solid test automation architecture isn’t just about checking boxes—it’s about creating a safety net that lets your team move fast without breaking things. When you combine unit tests for quick feedback, integration tests for system confidence, end-to-end tests for user experience validation, and contract tests for service boundaries, you’re setting up a testing strategy that actually works. Each layer serves a specific purpose, and together they give you the coverage and speed you need to ship quality software.

The real magic happens when you optimize how these tests run together. Start small with a strong unit testing foundation, then gradually build up your integration and contract testing capabilities. Save the heavy end-to-end tests for your most critical user paths, and always keep performance in mind. Your future self will thank you when deployments become routine instead of nail-biting experiences, and your team can focus on building features instead of firefighting bugs.