Ever spent hours optimizing your system only to realize you’ve been chasing the wrong metric? That was me last month, knee-deep in server logs, optimizing for throughput when latency was actually killing our user experience.

System design isn’t just about making things work—it’s about making the right things work right. Understanding the critical differences between latency vs throughput can literally make or break your architecture.

I’m going to break this down in plain English: latency is about speed (how fast), throughput is about volume (how much). Sounds simple, but the implications are huge.

And here’s where most engineers get it wrong: optimizing one often comes at the expense of the other. But does it have to? The answer might surprise you.

Fundamentals of System Performance Metrics

A. Defining Latency: The Wait Time Explained

Latency is that frustrating pause between action and response in your system. Think of it as waiting for your coffee order – you want it now, not in five minutes. In technical terms, it’s the time delay between initiating a request and receiving the first response. Lower latency means a snappier, more responsive system that keeps users happy.

B. Understanding Throughput: Measuring System Capacity

Throughput is all about volume – how much stuff your system can handle in a given timeframe. It’s like comparing a two-lane country road to an eight-lane highway. Measured in operations per second (or data per second), throughput tells you the maximum workload your system can process without falling apart. Higher throughput means your system can serve more users or process more data simultaneously.

C. The Relationship: How Latency and Throughput Interact

The relationship between latency and throughput isn’t straightforward – they often pull in opposite directions. Crank up throughput by processing more requests, and you might increase latency as queues build up. Focus solely on reducing latency, and you might limit how many requests you can handle. The sweet spot? Finding the balance that delivers acceptable response times while maximizing processing capacity for your specific use case.

D. Why These Metrics Matter in Modern System Design

These metrics aren’t just technical jargon – they directly impact user experience and business outcomes. Users abandon slow websites. Financial transactions demand ultra-low latency. Streaming services require sustained high throughput. Modern systems must be designed with both metrics in mind, especially as applications grow more distributed and user expectations continue to rise. Getting these fundamentals right lays the groundwork for everything else.

Latency Deep Dive: Beyond the Basics

Latency Deep Dive: Beyond the Basics

A. Types of Latency in Computing Systems

Network latency, processing latency, disk latency, and memory latency all impact your system differently. Network latency happens when data travels across networks, while processing latency occurs during CPU operations. Disk latency shows up when reading or writing to storage, and memory latency creeps in when accessing RAM. Each type creates unique bottlenecks that require specific optimization approaches.

B. Measuring Latency: Tools and Methodologies

When measuring latency, you need the right tools in your arsenal. Ping and traceroute help diagnose network issues, while application profilers like New Relic or Datadog dive deeper into system performance. For web applications, browser developer tools track page load times and identify bottlenecks. Benchmarking tools like Apache JMeter simulate load to measure response under pressure. Always gather data over time to spot trends.

C. Common Causes of High Latency

High latency often stems from network congestion, where too much traffic creates digital traffic jams. Poorly optimized code forces systems to work harder than necessary. Insufficient resources—whether CPU, memory, or disk space—create bottlenecks. Database queries without proper indexing drag performance down. Geographic distance between servers and users adds unavoidable physics-based delays. Hardware limitations can also cap your system’s potential.

D. Real-world Impact of Latency on User Experience

Ever abandoned a website because it took too long to load? That’s latency killing conversions. Research shows even 100ms delays reduce conversion rates by 7%. In gaming, high latency makes the difference between victory and defeat. For financial trading systems, milliseconds can mean millions in lost opportunities. E-commerce sites lose 1% of sales for every 100ms delay. Users notice, and they’re not patient.

E. Latency Optimization Strategies

Content delivery networks (CDNs) place your assets closer to users, instantly cutting geographic latency. Implement caching at multiple levels—browser, application, and database—to avoid repetitive work. Asynchronous processing lets your system handle operations without making users wait. Database optimization through proper indexing and query tuning eliminates costly bottlenecks. Code profiling helps identify and fix slow functions before they impact users.

Throughput Explained: Maximizing System Efficiency

Throughput Explained: Maximizing System Efficiency

A. Components That Determine Throughput

Think of throughput as your highway’s capacity to move cars. Your system’s throughput depends on processing power (CPU), memory bandwidth, network capacity, and storage I/O speeds. These components work together like a relay team – if one runner drops the baton, the whole team slows down.

B. Calculating and Benchmarking Throughput

Measuring throughput is straightforward: divide the amount of work by the time it takes. Want to know your database’s throughput? Count transactions per second. Testing tools like JMeter, LoadRunner, and Apache Bench give you real numbers to work with instead of guessing. Without benchmarks, you’re flying blind.

C. Bottlenecks That Limit Throughput Performance

Bottlenecks are the party poopers of system design. Your entire system moves only as fast as its slowest component. Common culprits? Overloaded CPUs, maxed-out memory, network congestion, and disk I/O limitations. Finding these chokepoints requires monitoring each component’s utilization during peak loads.

D. Scaling Strategies to Improve Throughput

Hitting throughput limits? You’ve got options. Vertical scaling (bigger machines) works for immediate needs but gets expensive fast. Horizontal scaling (more machines) lets you distribute the load and often costs less long-term. Smart caching, load balancing, and database sharding can multiply your throughput without breaking the bank.

The Critical Trade-offs Between Latency and Throughput

The Critical Trade-offs Between Latency and Throughput

A. When to Prioritize Latency Over Throughput

In real-time systems like trading platforms or online gaming, every millisecond counts. Users bail when pages load slowly. Gaming companies know this—they’ll sacrifice some throughput capacity to ensure players experience smooth gameplay without frustrating delays. The same applies to emergency response systems where immediate feedback literally saves lives.

B. When Throughput Matters More Than Response Time

Batch processing systems don’t need lightning-fast responses. Think overnight data analysis, video rendering farms, or scientific computing. These systems thrive on processing massive volumes efficiently. Cloud providers often optimize for throughput in their storage solutions, letting customers process enormous datasets even if individual operations take longer.

C. Balancing Both Metrics in Different System Types

The sweet spot differs across systems. E-commerce platforms need fast product page loads (latency) during browsing but high transaction processing (throughput) during checkout. Modern architectures achieve this balance through clever caching, load distribution, and prioritization techniques. Streaming services like Netflix dynamically adjust—sacrificing video quality (throughput) to maintain playback continuity (latency) when networks struggle.

Practical Applications in Modern System Design

Practical Applications in Modern System Design

A. Cloud Infrastructure Optimization Techniques

Modern cloud platforms live and die by their performance metrics. When architecting cloud solutions, you’ll constantly juggle CPU allocation, memory distribution, and storage configurations. The key? Design auto-scaling systems that monitor both latency spikes and throughput demands, then dynamically adjust resources before users notice any slowdown.

B. Database Systems: Finding the Right Balance

Database performance isn’t just about raw speed. It’s about delivering consistent results under pressure. In-memory databases like Redis crush latency metrics but sacrifice durability, while distributed systems like Cassandra offer incredible throughput at the cost of eventual consistency. Your data access patterns should dictate which tradeoff makes sense.

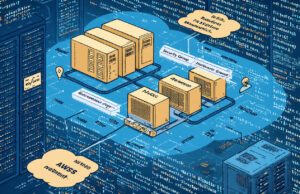

C. Microservices Architecture Considerations

Breaking monoliths into microservices introduces communication overhead that can torpedo latency. But here’s the thing—properly designed service boundaries create isolation that dramatically improves throughput under load. Smart teams use circuit breakers, bulkheads, and asynchronous messaging to maintain responsiveness even when specific services struggle.

D. Network Design for Optimal Performance

Network design makes or breaks system performance. Content delivery networks (CDNs) slash latency by positioning assets closer to users, while load balancers distribute traffic to maximize throughput. The magic happens when you implement advanced routing algorithms that adapt to changing conditions, ensuring consistent performance regardless of traffic spikes.

E. Edge Computing: The New Frontier for Latency Reduction

Edge computing flips the traditional model on its head. Instead of centralizing processing, it pushes computation closer to data sources. The results? Mind-blowing latency improvements for applications where milliseconds matter—like autonomous vehicles, industrial IoT, and augmented reality. This approach sacrifices some throughput capacity for dramatically faster response times.

Industry-Specific Optimization Approaches

Industry-Specific Optimization Approaches

A. E-commerce Platforms: Converting Sales Through Speed

Ever notice how Amazon loads almost instantly? That’s no accident. E-commerce giants know that a 100ms delay can drop conversion rates by 7%. They prioritize lightning-fast product pages and checkout processes while maintaining enough throughput to handle flash sales and holiday rushes. When millions shop simultaneously, that balance becomes pure profit.

B. Financial Systems: Where Milliseconds Equal Millions

In high-frequency trading, being 1ms faster than competitors can mean capturing millions in profit opportunities. Financial systems are the ultimate example of latency obsession. Trading platforms use specialized hardware, dedicated fiber lines, and co-location services to shave microseconds off transaction times. Meanwhile, their back-end systems must process enormous transaction volumes for clearing and settlement.

C. Gaming and Media Streaming: Enhancing User Experience

Gaming and streaming services face a unique challenge – they need both low latency and high throughput simultaneously. A 50ms lag in an online shooter game means missing your shot, while insufficient throughput causes buffering in 4K streams. Companies like Netflix pre-position content in local servers to reduce latency while optimizing compression algorithms to maintain quality with less bandwidth.

D. IoT Networks: Managing Massive Data Throughput

IoT networks are throughput beasts. Think about it – billions of connected devices continuously sending sensor readings. While individual messages are tiny, the collective data tsunami is enormous. Smart city implementations prioritize throughput for environmental sensors while demanding low latency for critical infrastructure monitoring. Edge computing helps by processing data locally before sending only essential information to central systems.

Future Trends in Performance Optimization

AI-driven Performance Tuning

Performance optimization is entering a new era. Machine learning algorithms now dynamically balance latency and throughput without human intervention, automatically adjusting system parameters based on real-time workloads. These AI systems can predict traffic patterns and preemptively scale resources, beating traditional static optimization approaches by wide margins.

Quantum Computing’s Impact on Traditional Metrics

Quantum computing is rewriting our performance rulebook. The concept of superposition allows quantum systems to process multiple possibilities simultaneously, making traditional throughput metrics almost meaningless. Latency also transforms in the quantum realm – certain calculations that would take centuries on classical computers can happen almost instantly, shifting how we conceptualize system responsiveness entirely.

New Measurement Standards on the Horizon

Traditional metrics are showing their age. Forward-thinking organizations are already adopting more holistic measurements like Experience Delivery Index (XDI) and Sustainable Computing Efficiency (SCE). These new standards account for user perception, energy consumption, and adaptive performance across varying conditions – factors completely ignored by simplistic latency/throughput measurements.

Emerging Hardware Solutions for Next-gen Systems

Hardware innovation is accelerating rapidly. Neuromorphic computing chips mimic brain structures for unprecedented efficiency. Photonic computing uses light instead of electricity, potentially increasing data transfer speeds by orders of magnitude. These technologies promise to deliver both ultralow latency and massive throughput, reducing the historical tradeoffs that system designers have faced for decades.

Understanding the intricate relationship between latency and throughput is crucial for any system designer aiming to build efficient applications. As we’ve explored, these two performance metrics often exist in a delicate balance, where optimizing for one frequently impacts the other. From examining the fundamentals of these metrics to diving deep into their trade-offs and practical applications across various industries, it’s clear that context-specific optimization is key to successful system design.

Whether you’re developing real-time financial trading platforms that prioritize latency, streaming services that emphasize throughput, or complex systems requiring a careful balance of both, remember that performance optimization is an ongoing journey. As technology continues to evolve with innovations in distributed systems, edge computing, and AI-driven optimizations, the approaches to managing latency and throughput will likewise transform. By maintaining a clear understanding of your specific use case requirements and applying the principles discussed in this guide, you’ll be well-equipped to make informed architectural decisions that deliver the optimal performance your users demand.